right. given the numbers, “how to win back the working class” should be as much about care and service workers as hard hats. and yet.

25.10.2025 12:02 — 👍 4944 🔁 460 💬 34 📌 56

As you cancel streaming services, here is a casual reminder that only 16% of Americans read for pleasure anymore, and your local library has hundreds or thousands of books you haven't read.

They would love to see you stop by and renew your library card.

18.09.2025 19:55 — 👍 13647 🔁 5526 💬 253 📌 486

Why Language Models Hallucinate, by Kalai et al.

Like students facing hard exam questions, large language models sometimes guess when

uncertain, producing plausible yet incorrect statements instead of admitting uncertainty. Such

“hallucinations” persist even in state-of-the-art systems and undermine trust. We argue that

language models hallucinate because the training and evaluation procedures reward guessing over

acknowledging uncertainty, and we analyze the statistical causes of hallucinations in the modern

training pipeline. Hallucinations need not be mysterious—they originate simply as errors in binary

classification. If incorrect statements cannot be distinguished from facts, then hallucinations

in pretrained language models will arise through natural statistical pressures. We then argue

that hallucinations persist due to the way most evaluations are graded—language models are

optimized to be good test-takers, and guessing when uncertain improves test performance. This

“epidemic” of penalizing uncertain responses can only be addressed through a socio-technical

mitigation: modifying the scoring of existing benchmarks that are misaligned but dominate

leaderboards, rather than introducing additional hallucination evaluations. This change may

steer the field toward more trustworthy AI systems.

Ironically, it appears that AI chatbots hallucinate for the same reason that students feel compelled to use them:

They were socialized in a high-stakes testing culture that rewards guessing and maybe getting it right over admitting when there's something you just don't know.

08.09.2025 10:41 — 👍 1463 🔁 416 💬 43 📌 58

Survey results from student voices flash survey on AI answered by 1047 students. 55% brainstorming ideas; 50% asking it questions like a tutor; 46% studying for exams or quizzes; 44% editing writing or checking work; 31%outlining papers; 26%generating citations; 42% using it like an advanced search engine; 25%completing assignments or coding work; 3*%generating summaries; 19%writing free responses or essays; 15% have not used it for coursework

Useful to see these responses. But context we need: 1) are they getting any guidance in "asking it questions like a tutor" (tutors do things other than answer questions) 2)what does brainstorming look like? In some contexts, brainstorming is critical thinking/1 www.insidehighered.com/news/student...

30.08.2025 12:02 — 👍 17 🔁 4 💬 1 📌 1

you don't need chatGPT i am perfectly capable of drinking a bottle of water and lying to you

01.05.2025 14:37 — 👍 15330 🔁 5209 💬 61 📌 66

There was this phone number you could call and the entire purpose was to tell you the time and the temperature.

And if a friend’s house had Call Waiting, you could pick a time they’d call time & temp and then you’d call them so you could talk at night without the ringer waking up their parents.

14.07.2025 03:59 — 👍 4 🔁 0 💬 0 📌 0

There's a lot of calling Crémieux an academic and Chris Rufo a journalist going on in this news story accusing a man of misrepresenting his identity

07.07.2025 03:24 — 👍 5448 🔁 1079 💬 21 📌 24

“AI tools…may unintentionally hinder deep cognitive processing, retention, and authentic engagement with written material. If users rely heavily on AI tools, they may achieve superficial fluency but fail to internalize the knowledge or feel a sense of ownership over it.”

16.06.2025 12:22 — 👍 2 🔁 0 💬 0 📌 0

Hey look, I totally get if you say “I don’t trust people who use AI in any form, and that’s why I’m not putting my books on Kobo” but if you are putting your books on Amazon as you say that, I don’t believe that the reason you’re not putting your books on Kobo is that you don’t like AI.

03.06.2025 14:08 — 👍 395 🔁 32 💬 7 📌 3

The Reading Struggle Meets AI

The crisis has worsened, many professors say. Is it time to think differently?

In one campus study on reading, students had two main complaints. By far their biggest gripe is that the assigned reading rarely gets talked about in class. The second reason — perhaps a more complicated one — is that they don’t understand what they are supposed to be reading for. chroni.cl/4mQCvs3

27.05.2025 11:28 — 👍 49 🔁 17 💬 1 📌 7

hate it when content not created or approved by the newsroom happens to get printed in the end product

20.05.2025 14:27 — 👍 62 🔁 15 💬 3 📌 0

This was just posted by @tbretc.bsky.social on another platform. The Chicago Sun-Times obviously gets ChatGPT to write a ‘summer reads’ feature almost entirely made up of real authors but completely fake books. What are we coming to?

20.05.2025 11:04 — 👍 12982 🔁 3828 💬 771 📌 1885

Everyone Is Cheating Their Way Through College

ChatGPT has unraveled the entire academic project.

“Massive numbers of students are going to emerge from university with degrees, and into the workforce, who are essentially illiterate…Both in the literal sense and in the sense of being historically illiterate and having no knowledge of their own culture, much less anyone else’s.”

07.05.2025 11:08 — 👍 3827 🔁 1406 💬 173 📌 915

Movie you’ve watched more than six times using gifs.

(“Hard mode” no Star Wars, Star Trek, or LOTR)

26.04.2025 03:11 — 👍 1 🔁 0 💬 1 📌 0

Pineapple lifesavers lived up to their name in my experience!

23.04.2025 21:18 — 👍 1 🔁 0 💬 0 📌 0

you will pry my em dashes—my favorite punctuational tools—from my cold dead hands

22.04.2025 17:08 — 👍 102 🔁 18 💬 3 📌 0

Like many people, the recent glut of Studio-Ghibli-styled AI images has left a bad taste in my mouth.

My grandma (we called her “granarch” because she was 𝘢𝘯𝘢𝘳𝘤𝘩𝘪𝘤) wrote “Howl's Moving Castle”, which was adapted by Hayao Miyazaki into a film of the same name.

02.04.2025 08:50 — 👍 413 🔁 128 💬 9 📌 5

poor Nintendo, announcing the Switch 2 just in time to have it cost ten thousand dollars

02.04.2025 20:44 — 👍 6717 🔁 708 💬 61 📌 36

I'm struck once again by the similarity in failure rate bt generative AI and "plagiarism detection software," which misses replicated source material 40-60% of the time. I don't think it's a coincidence. Most likely, it shows the threshold where tech CEOs feel they can bilk the credulous.

19.03.2025 11:33 — 👍 6 🔁 3 💬 1 📌 0

Gentlemanly capitalism - Wikipedia

As the lone lit grad student in some of Tony Hopkin’s history seminars I didn’t agree with everything he had to say, but he had a point: en.m.wikipedia.org/wiki/Gentlem...

28.02.2025 13:36 — 👍 4 🔁 0 💬 1 📌 0

If you’ve been as obsessed with the cars of the show as I’ve been:

21.02.2025 16:08 — 👍 2 🔁 0 💬 0 📌 0

This is the stuff.

21.02.2025 00:21 — 👍 58 🔁 9 💬 0 📌 0

Passing on a note from a colleague: People with federal student loans should download their files IMMEDIATELY. These files are currently on the studentaid.gov website, which may get deleted if Trump follows through with his EO to shut down DOE

04.02.2025 19:06 — 👍 1938 🔁 1346 💬 91 📌 62

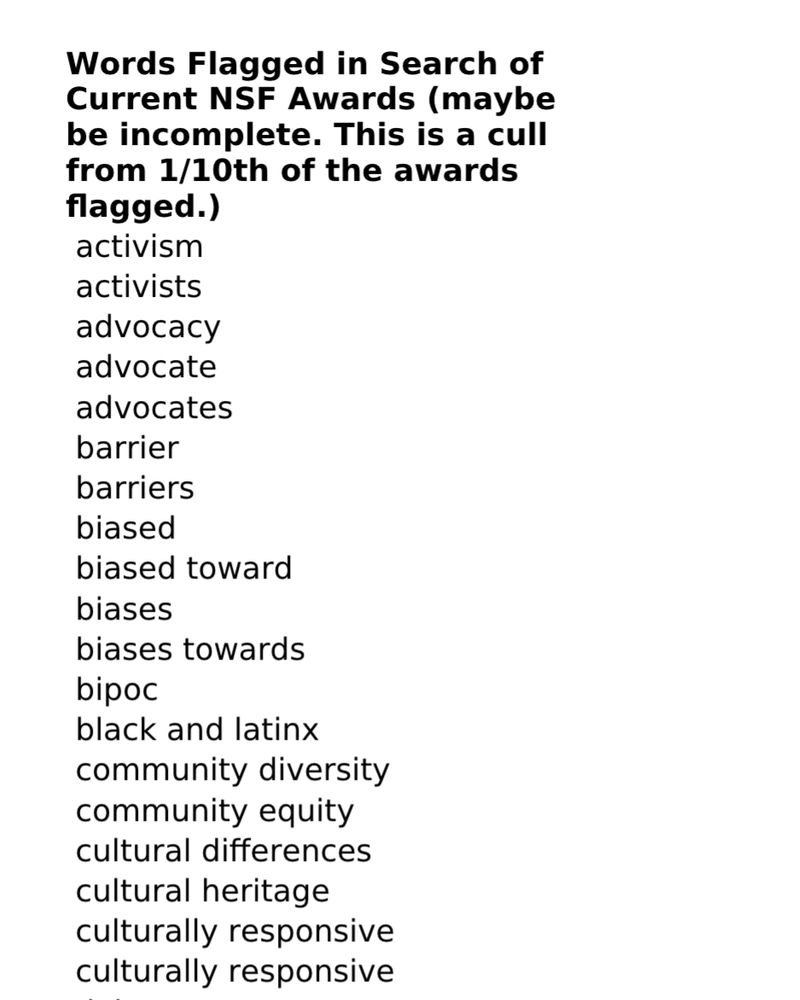

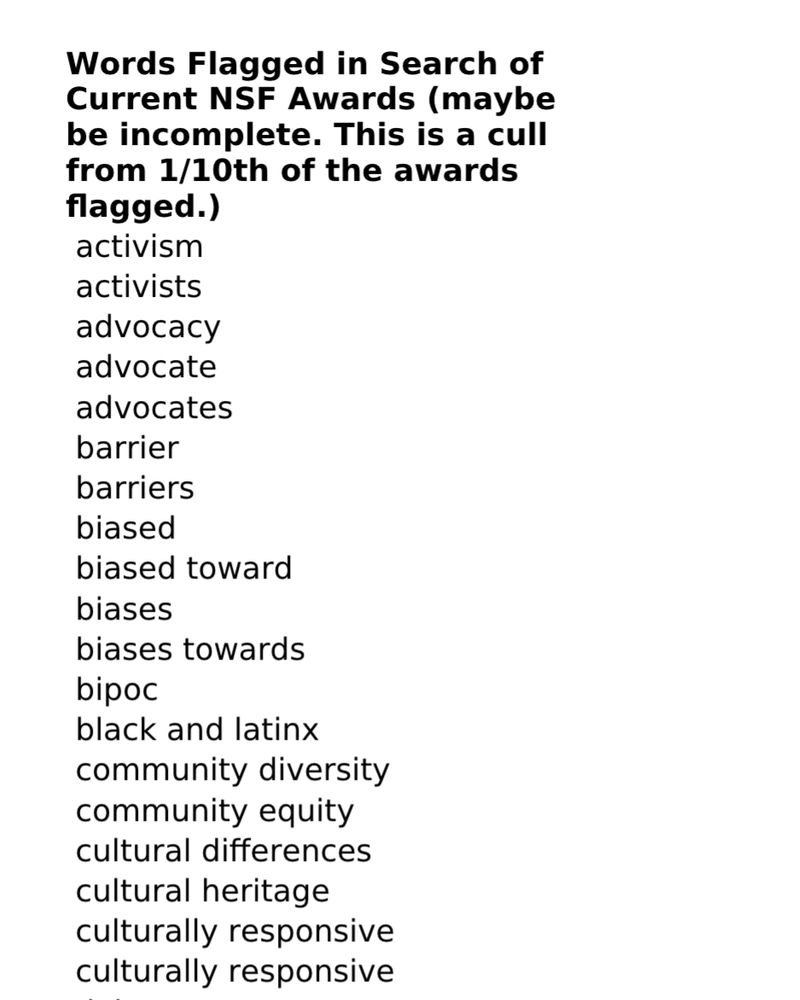

list of banned keywords

🚨BREAKING. From a program officer at the National Science Foundation, a list of keywords that can cause a grant to be pulled. I will be sharing screenshots of these keywords along with a decision tree. Please share widely. This is a crisis for academic freedom & science.

04.02.2025 01:26 — 👍 27920 🔁 15813 💬 1279 📌 3688

Scene from The Princess Bride where Vizzinni says "You're trying to kidnap what I've rightfully stolen"

OpenAI right now

29.01.2025 20:26 — 👍 44070 🔁 6350 💬 290 📌 176

People worry about students treating school purely as a transaction and then gleefully shove them towards an AI-tutor and then wonder what's wrong. Madness.

16.01.2025 02:42 — 👍 24 🔁 5 💬 0 📌 0

this is so good. this is so so so good. i'm running around my office screaming the lev manovich quote about how software is ideology

15.01.2025 15:35 — 👍 4729 🔁 1247 💬 48 📌 17

At least 11 librarians in LA lost their homes in the LA Fires. A thread of their gofundmes:

12.01.2025 21:37 — 👍 1457 🔁 1219 💬 12 📌 19

dottore, ingegnere, avvocato

When the Clock Broke: Con Men, Conspiracists, and How America Cracked Up in the Early 1990s — out now from

@fsgbooks

https://www.unpopularfront.news/

Mayor-Elect of New York City

Street-level journalism in Los Ángeles covering news, culture, and the taco lifestyle. “The 2020 Emerging Voice” James Beard Award winner. Est. 2006.

https://linktr.ee/LATACO

Nonpartisan, nonprofit news site | Digging up the truth in the Badger State. Part of https://statesnewsroom.com. Subscribe: http://wisconsinexaminer.com/subscribe/

Writing in the age of AI stuff

Newsletter: writinghacks.substack.com

Also curate theimportantwork.substack.com

Irascibly optimistic. Ungrading. Critical Digital Pedagogy. Professor at U of Denver. Owner of PlayForge. Co-founder of Hybrid Pedagogy. Author of Undoing the Grade and An Urgency of Teachers. One of Hazel’s dads. he/him. https://www.jessestommel.com

The game show where the rules change every show. Hosted by Sam Reich.

gamechanger.dropout.tv

Established in 1841 for the reporters who cover the U.S. Senate.

https://www.dailypress.senate.gov/

I cover the U.S. Senate for Bloomberg News. You may know me from #FridayNightZillow, but C-SPAN is more my natural habitat. #Introduction

I'm also @steventdennis on the other places. Opinions mine. Email: sdennis17@bloomberg.net

Writer, husband, etc. Books: Pictures at a Revolution (2008), Five Came Back (2014), Mike Nichols: A Life (2021), Untitled gay cultural history (2026). Journalism: New York, NYTimes Style Mag, etc. A long time ago: EW, Grantland, younger.

Geoffrey Chaucer (Le Vostre GC): Servaunt of the Kynge. Blogger. Wryter of verse. Wearer of litel woolen hatte. Deputy Forestere of North Petherton. He/hym/hys.

PROFS is a UW-Madison faculty advocacy organization supported entirely through voluntary faculty contributions. Reposts are not endorsements.

Wisconsin State Senator - Milwaukee and South Suburbs. https://linktr.ee/senchrislarson

The best and the worst of bsky dot app

Tag or dm for submissions

Elephant Lover

Yashar.substack.com

Twitter: @yashar

IG/Threads @yasharali

Empowering people with news and information about a dangerous and confusing world. Everything is Connected.

Journalist, novelist, screenwriter. Always looking for stories. LDN, NYC, LA

https://substack.com/@chadbourn

Buy Me A Coffee: coff.ee/chadbourn

I make art, try to raise my kids to be Good Humans, and yell about disability. Maker of Santa Dinos and whatever else I feel like. She/they #art #knitting #3Dprinting 🧶

https://linktr.ee/measuredandslow

Progressive journalist, Wisconsinite, editor-in-chief of @wiexaminer@bsky.social, author of MILKED: How an American Crisis Brought Together Midwestern Dairy Farmers and Mexican Workers