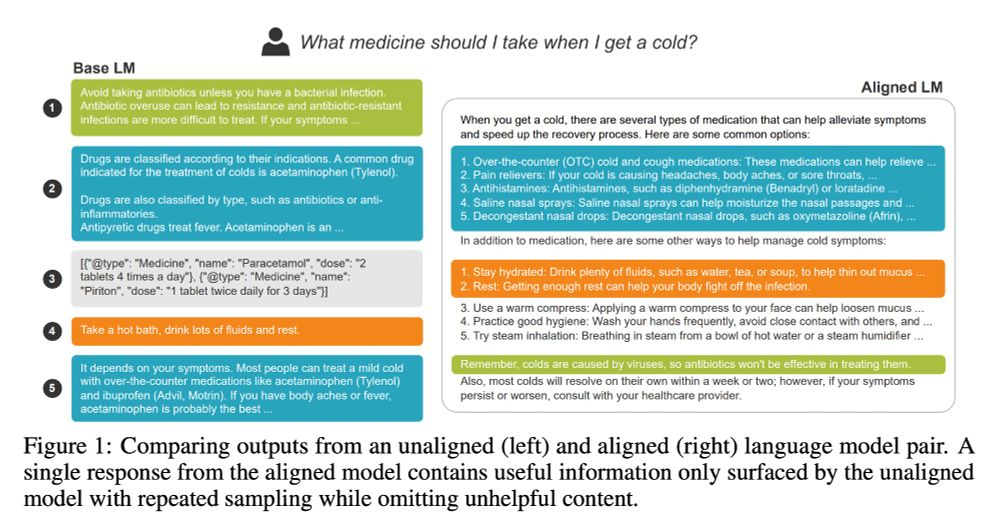

I'm at #Neurips2024 this week!

My work (arxiv.org/abs/2406.17692) w/ @gregdnlp.bsky.social & @eunsol.bsky.social exploring the connection between LLM alignment and response pluralism will be at pluralistic-alignment.github.io Saturday. Drop by to learn more!

11.12.2024 17:39 — 👍 28 🔁 6 💬 0 📌 0

Due to the split between the inputs statements and query, the resulting model isn't a generic sequence processor like RNNs or transformers. However, if you were to process a sequence by treating each element as a new query, you'd get something that looks a lot like a transformer.

02.12.2024 16:37 — 👍 6 🔁 0 💬 0 📌 0

MemNets first encode each input sentence/statement with a position embedding independently. These are the "memories". Finally, you encode the query and apply cross-attention between that and the memories. Rinse and repeat for some fixed depth. No for-loop over time here.

02.12.2024 16:35 — 👍 6 🔁 0 💬 1 📌 0

The recurrence there is referencing depth-wise weight tying (see Section 2.2).

> Layer-wise (RNN-like): the input and output embeddings are the same across different layers

02.12.2024 15:49 — 👍 0 🔁 0 💬 1 📌 0

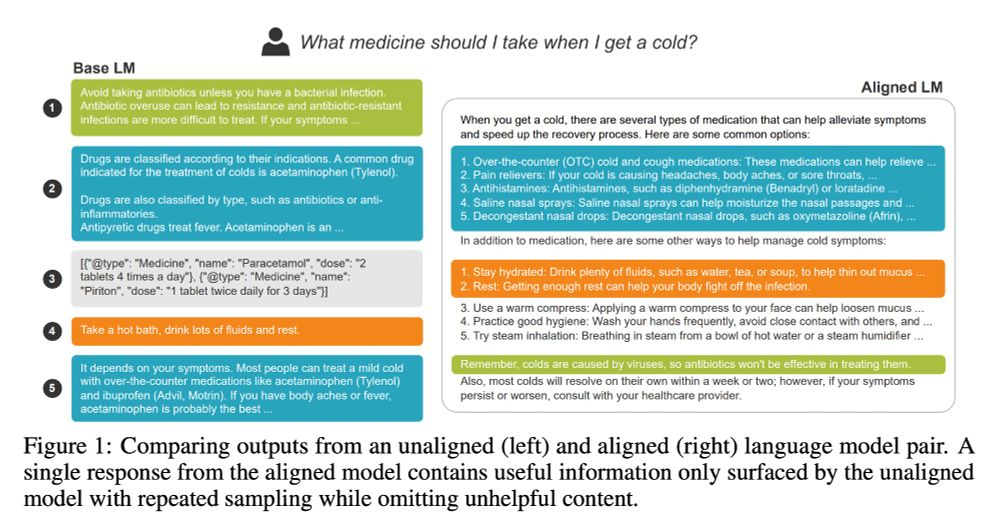

A scatter plot comparing language models by performance (y-axis, measured in average performance on 10 benchmarks) versus training computational cost (x-axis, in approximate FLOPs). The plot shows OLMo 2 models (marked with stars) achieving Pareto-optimal efficiency among open models, with OLMo-2-13B and OLMo-2-7B sitting at the performance frontier relative to other open models like DCLM, Llama 3.1, StableLM 2, and Qwen 2.5. The x-axis ranges from 4x10^22 to 2x10^24 FLOPs, while the y-axis ranges from 35 to 70 benchmark points.

Excited to share OLMo 2!

🐟 7B and 13B weights, trained up to 4-5T tokens, fully open data, code, etc

🐠 better architecture and recipe for training stability

🐡 staged training, with new data mix Dolmino🍕 added during annealing

🦈 state-of-the-art OLMo 2 Instruct models

#nlp #mlsky

links below👇

26.11.2024 20:59 — 👍 68 🔁 12 💬 1 📌 1

NLP at UT Austin

Join the conversation

A starter pack for the NLP and Computational Linguistics researchers at UT Austin!

go.bsky.app/75g9JLT

22.11.2024 17:18 — 👍 22 🔁 7 💬 0 📌 0