Since the renderer is used as a Hydra render delegate, this is the concern of the host application (usdview). I just pass it some CPU memory.

25.01.2026 12:09 — 👍 0 🔁 0 💬 0 📌 0

In general, it was nice getting to know Metal a bit - and I can't wait writing some user land code on my MacBook Air! Btw: Vulkan backend is ~3,8k LOC and Metal ~2,2k LOC.

18.01.2026 15:52 — 👍 2 🔁 0 💬 0 📌 0

As these functions don't have access to intrinsics, we need to pass them along as part of their signature. Which the function tables are parameterized with. Here's an example of how the ray generation shader signature ends up looking with two ray payloads:

18.01.2026 15:52 — 👍 0 🔁 0 💬 1 📌 0

Closest-hit shaders and miss shaders need to be emulated. They are compiled with their entry points being "visible" and are invoked from visible function tables (VFTs) based on the traversal result. For each payload type we have 1x IFT and 2x VFTs.

18.01.2026 15:52 — 👍 0 🔁 0 💬 1 📌 0

An important difference between the graphics APIs is that Metal only has 'intersection' shaders that are invoked for non-opaque geometry. This is what I map any-hit shaders to. Their function addresses are stored in an intersection function table (IFT), similar to an SBT.

18.01.2026 15:52 — 👍 0 🔁 0 💬 1 📌 0

On the API side I use metal-cpp and for shaders I partially implement the GLSL_EXT_ray_tracing extension in SPIRV-Cross. It's a bit hacky and it only supports the features I need, but overall content agnostic. Ideally it's transitionary until Slang or KosmicKrisp's compiler can be used.

18.01.2026 15:52 — 👍 3 🔁 0 💬 1 📌 0

Finally finished Gatling's Metal backend! Here's a teaser of NVIDIA's USD / MDL sample scene 'Attic' running on macOS. What's special about the backend is probably that it uses the same GLSL code as the Vulkan backend, complete with hardware ray tracing. How does that work?

18.01.2026 15:52 — 👍 15 🔁 2 💬 2 📌 0

Notes On Motion Blur Rendering

Introduction

Blog post on motion blur rendering (which is impressively thorough) by Alex Gauggel

gaukler.github.io/2025/12/09/n...

10.12.2025 22:34 — 👍 13 🔁 6 💬 0 📌 0

This scene from WireWheelsClub looks like this out of the box - no editing was required.

Performance is not a priority right now, but is good enough with this simple lighting on an RTX 2060 @ WQHD

07.12.2025 22:47 — 👍 0 🔁 0 💬 0 📌 0

There are still some minor issues to resolve, including getting rid of static state (for multiple viewports) and implementing support for orthographic cameras.

07.12.2025 22:47 — 👍 0 🔁 0 💬 1 📌 0

Spent some time this weekend updating Gatling’s Blender integration to the latest 5.0 release.

07.12.2025 22:47 — 👍 1 🔁 0 💬 1 📌 0

A cloud rendered using jackknife transmittance estimation and the formula used to do so.

Ray marching is a common approach to GPU-accelerated volume rendering, but gives biased transmittance estimates. My new #SIGGRAPHAsia paper (+code) proposes an amazingly simple formula to eliminate this bias almost completely without using more samples.

momentsingraphics.de/SiggraphAsia...

30.10.2025 13:23 — 👍 139 🔁 28 💬 4 📌 0

What I’m working on right now is a Metal backend for gatling. The idea is to use metal-cpp with GLSL shaders, by forking SPIRV-cross to support ray tracing pipelines :) (very WIP)

12.10.2025 13:50 — 👍 1 🔁 0 💬 0 📌 0

Btw, a few weeks ago I released a small plugin for usdview. It allows inspection of UsdShade networks using a custom Qt node graph. It’s open source, lightweight and simple to install.

12.10.2025 13:36 — 👍 3 🔁 0 💬 1 📌 0

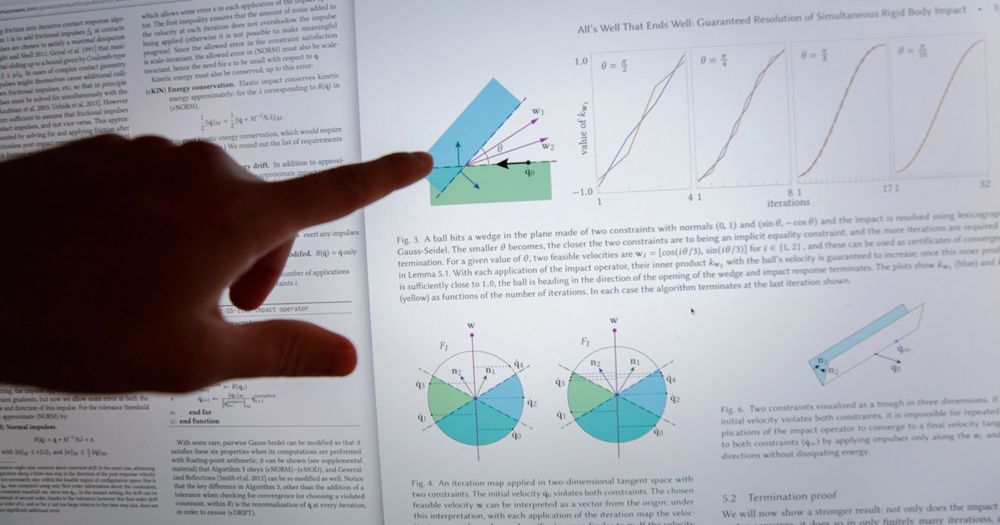

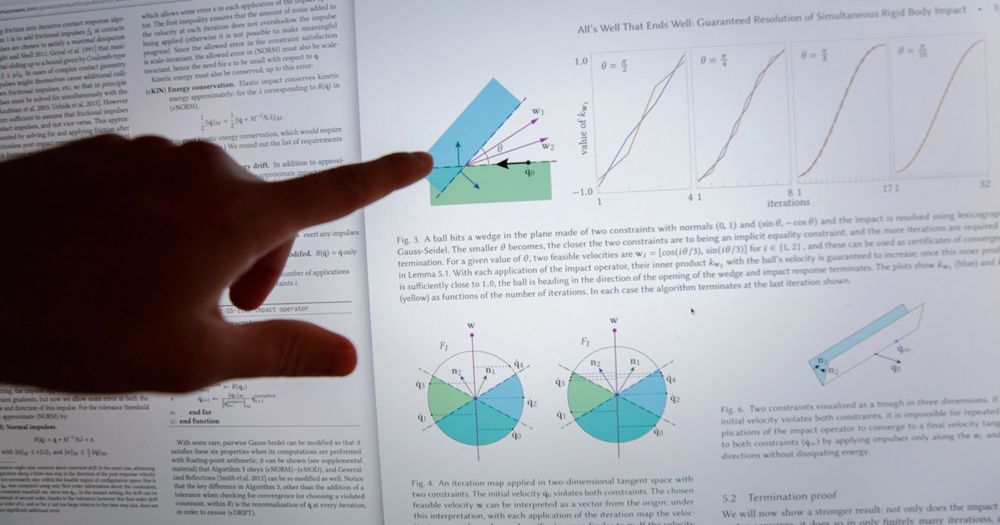

The Pixar RenderMan team has a paper out at HPG 2025 this week about the architecture of RenderMan XPU. There's a lot of interesting details in the paper- definitely a worthwhile read!

diglib.eg.org/bitstreams/d...

21.06.2025 23:24 — 👍 28 🔁 5 💬 1 📌 0

Source code on GitHub: github.com/pablode/cg-a...

28.06.2025 16:49 — 👍 0 🔁 0 💬 0 📌 0

Small weekend experiment: ported an LCD shader from Blender to #MaterialX (originally authored by PixlFX)

28.06.2025 16:49 — 👍 1 🔁 0 💬 1 📌 0

Walt Disney Animation Studios - Publications

We engage with the global research community through collaborations with universities around the world, publications in academic journals and participation in top-tier conferences.

We just posted a recording of the presentation I gave at DigiPro last year on Hyperion's many-lights sampling system. It's on the long side (30 min) but hopefully interesting if you like light transport! Check it out on Disney Animation's website:

www.disneyanimation.com/publications...

19.03.2025 05:42 — 👍 48 🔁 10 💬 1 📌 0

Would be nice if 2DGS were to win the race. Much simpler to render 😛

11.03.2025 17:55 — 👍 1 🔁 0 💬 0 📌 0

YouTube video by Oliver Markowski

2D Gaussian Ray-Tracing is the future!

www.youtube.com/watch?v=BUpD...

11.03.2025 17:55 — 👍 1 🔁 0 💬 1 📌 0

Implemented USD point instancer primvar support in my toy renderer the last few days. Can now render this 2D Gaussian Splatting (2DGS) scene with a single mesh & MaterialX material.

(Created by Oliver Markowski with Houdini, link see below ⬇️)

11.03.2025 17:55 — 👍 5 🔁 1 💬 1 📌 0

Three different examples of the Chiang Hair BSDF in MaterialX v1.39.2, rendered in NVIDIA RTX.

Highlighting one of the key contributions in MaterialX v1.39.2, Masuo Suzuki at NVIDIA contributed the Chiang Hair BSDF, seen below in NVIDIA RTX, which opens the door to the authoring of cross-platform, customizable hair shading models in MaterialX and OpenUSD.

github.com/AcademySoftw...

21.01.2025 19:22 — 👍 20 🔁 4 💬 1 📌 0

Tools for GPU Codec Development – Ignacio Castaño

Wrote a blog post about my development process and the tools I’ve built to develop the Spark codecs:

ludicon.com/castano/blog...

04.01.2025 08:46 — 👍 33 🔁 10 💬 2 📌 0

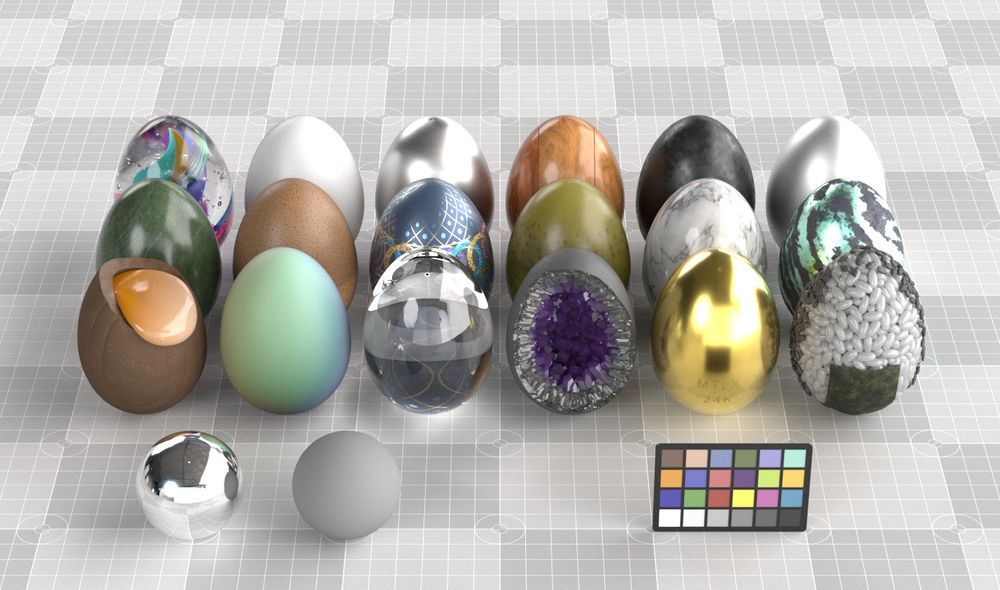

Lastly, implemented proper support for AOVs including those used for picking (primId, instanceId, elementId). (asset: standard shader ball) (4/4)

03.01.2025 11:15 — 👍 0 🔁 0 💬 0 📌 0

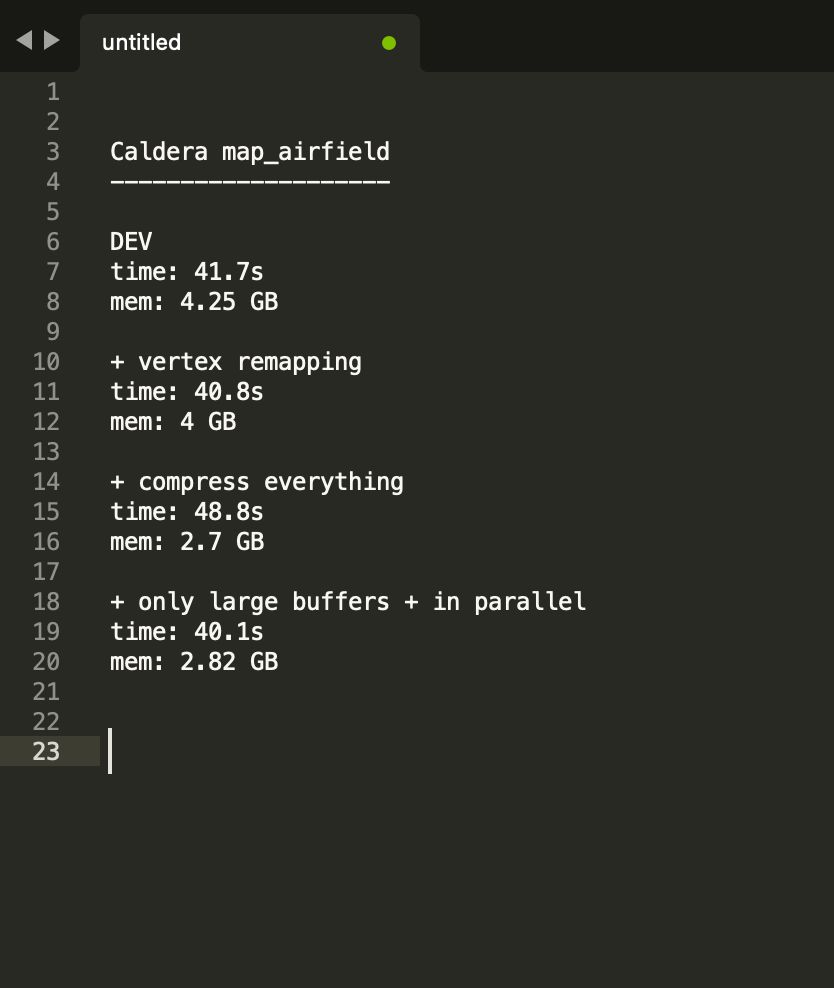

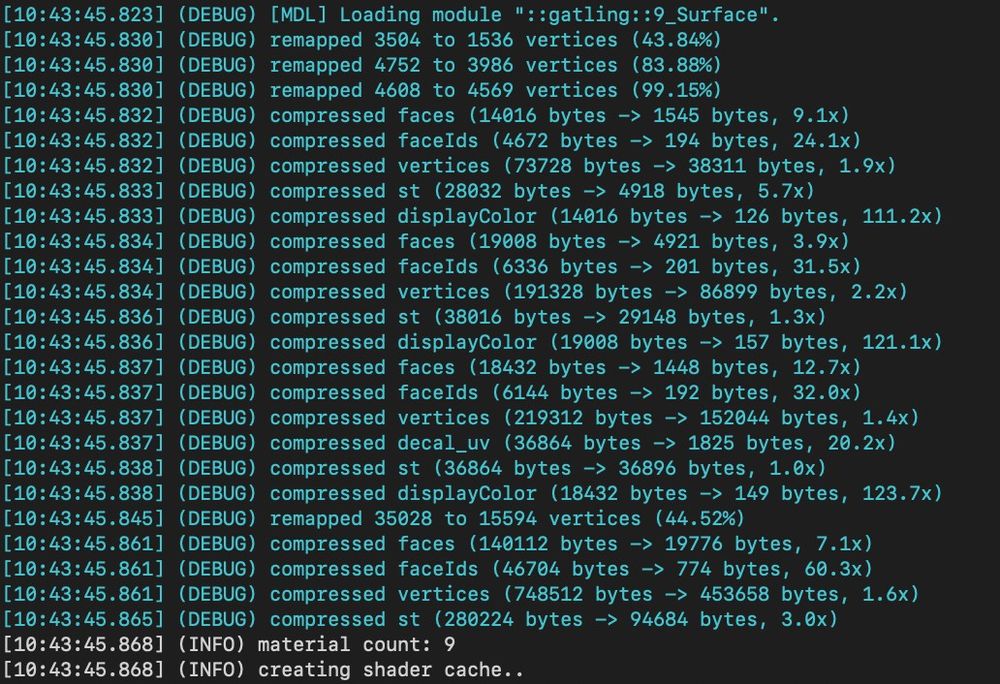

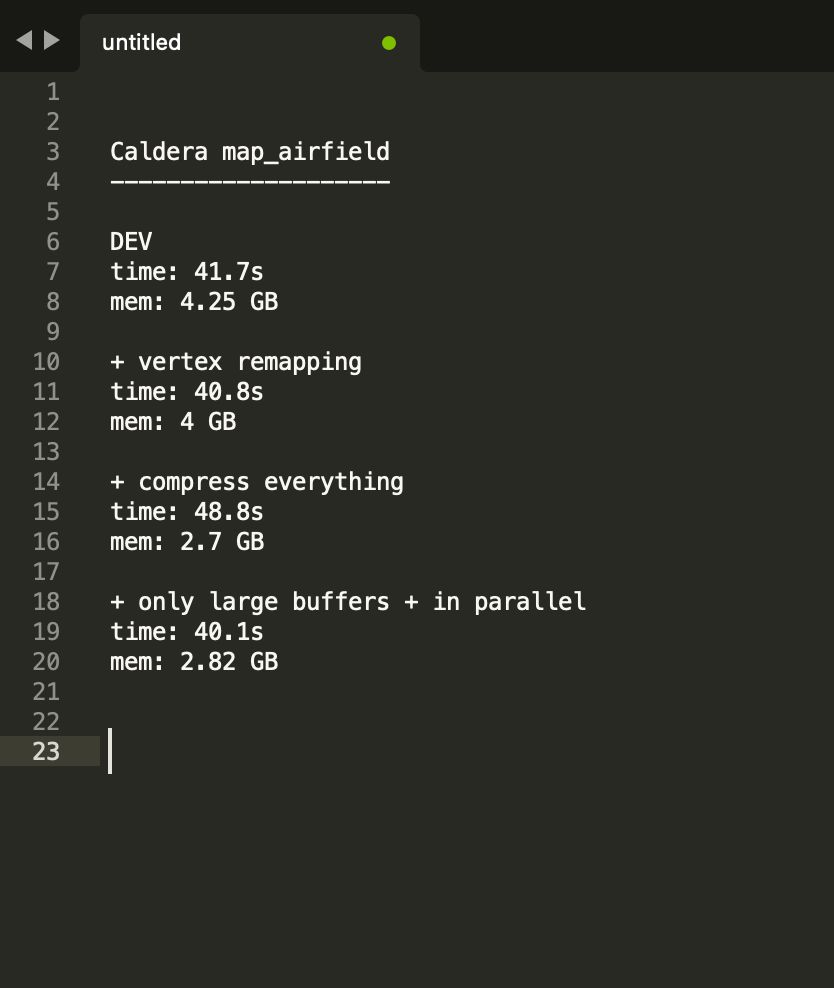

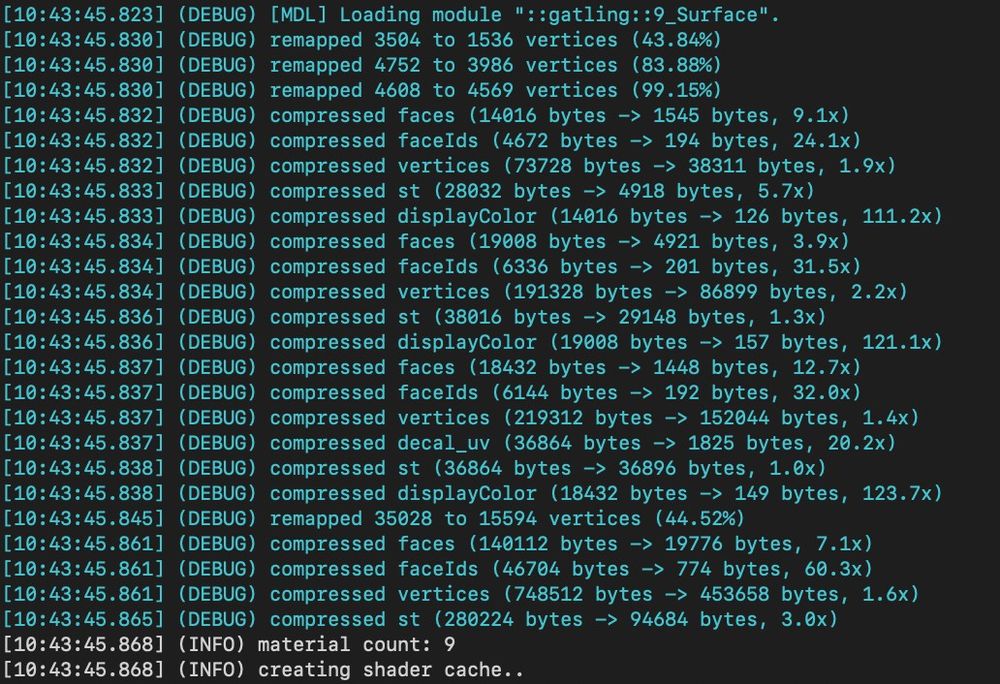

To reduce the memory footprint, I first run the geometry through meshoptimizer which culls and deduplicates vertices. Next, the data is compressed with blosc. The results are quite good. Decompression only happens when a BLAS is rebuilt. (3/4)

03.01.2025 11:15 — 👍 0 🔁 0 💬 1 📌 0

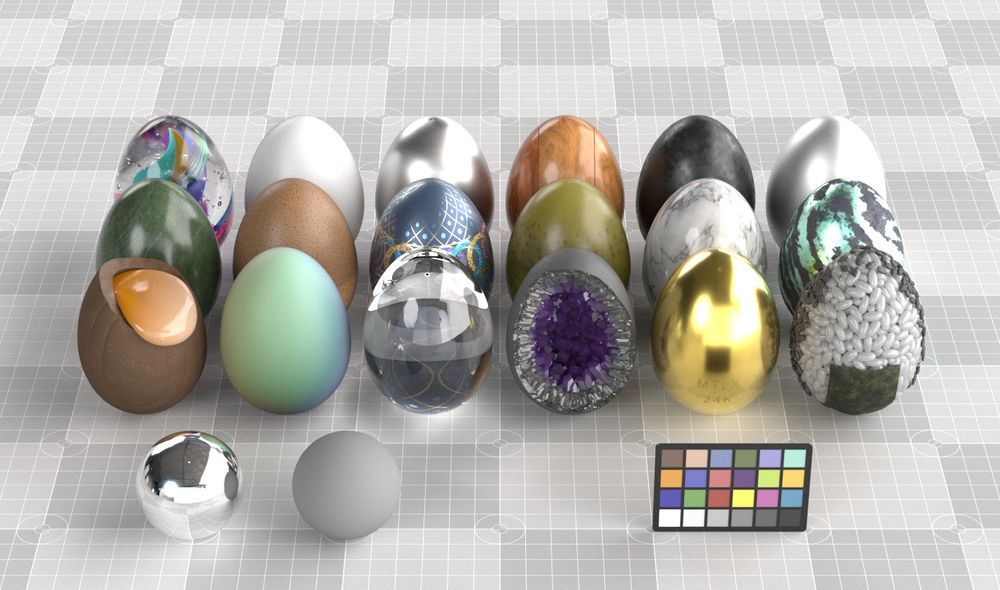

Furthermore implemented #MaterialX geompropvalues (MDL scene data), and GeomSubsets. This required quite some code rewriting, as I now store a copy of the CPU geometry data in the renderer itself. Here’s a render of stehrani3d’s MaterialEggs which make use of these features (2/4)

03.01.2025 11:15 — 👍 1 🔁 0 💬 1 📌 0

Building a new renderer at HypeHype. Former principal engineer at Unity and Ubisoft. Opinions are my own.

Metal Games Ecosystem at Apple. Opinions are my own.

Empowering real-time graphics developers with advanced language features that enhance portability and productivity in GPU shader development.

French C++ developer, consultant & co-founder @ siliceum.

Performance optimization, multithreading, rendering APIs and game engines.

I know too much about Webkit and JS for my own good.

I do reverse engineering for fun!

Just for announcing articles published in the Journal of Computer Graphics Tools, a diamond open access (free for all) journal at https://jcgt.org

Just a guy who likes to learn and play with graphics, with a focus on realtime pathtracing. I enjoy chatting/messages! Always looking for new opportunities

Github: https://github.com/Pjbomb2/TrueTrace-Unity-Pathtracer

We're the #gamedev home for AMD FSR™, Radeon™ Developer Tool Suite, VMA, AMF, & many more + developer docs.

Ray tracing addict. ReSTIR enthusiast. RTXing Jedi Outcast / Academy.

Chain swapping GPU hang detective @ Qualcomm | Ex-Unity (opinions mine)

cpu, gpu @sketchfab @fab @epicgames

Computer graphics from c64 to ungodly number of shader processor on modern 3d rigs

Only cpu/gpu/dev/gamdev/vfx content

Tracing rays at NVIDIA. Former lighting artist, working on computer graphics rendering tech for all humans and robots alike.

I mostly post stuff about light transport simulation, and my hobby project Workbench.

gpu psychiatrist @ SIE PSS ATG

Your friendly neighborhood Guthmann | Computer graphics enthusiast | GPU Dev Tech at AMD. Opinions are my own.

http://frguthmann.github.io

• Creator of Radical Pie equation editor, Slug Library, C4 Engine

• Math / gamedev author

• Geometric algebra researcher

• Former Naughty Dog, Apple, Sierra

Senior Mobile Platform Engineer @Epic Games

Previously Graphics Tech Director @NMGames, Sr. Graphics Eng at Samsung R&D

Engineering Fellow at Epic Game. I love games, graphics and lighting.

https://knarkowicz.wordpress.com

Independent. Previously: TF@Roblox

meshoptimizer, pugixml, volk, calm, niagara, qgrep, Luau

https://github.com/zeux

https://zeux.io

Graphics Engineer at @AMD Core Tech Group. Previously at @ImaginationTech. Has-been prog-metal guitarist. All views expressed are my own.

Graphics and video games. Principal Graphics Engineer at NVIDIA. Computer Science PhD. Thoughts here are my own.

Graphics nerd - RHI Rendering Lead on Unreal Engine @ Epic. Previously Rendering @ Roblox, Deviation Games, Halo, and more!

Also on Mastodon: @gkaerts@mastodon.gamedev.place

🇧🇪🇨🇦