LLMs' sycophancy issues are a predictable result of optimizing for user feedback. Even if clear sycophantic behaviors get fixed, AIs' exploits of our cognitive biases may only become more subtle.

Grateful our research on this was featured in @washingtonpost.com by @nitasha.bsky.social!

01.06.2025 18:25 — 👍 4 🔁 2 💬 0 📌 0

First page of the paper Influencing Humans to Conform to Preference Models for RLHF, by Hatgis-Kessell et al.

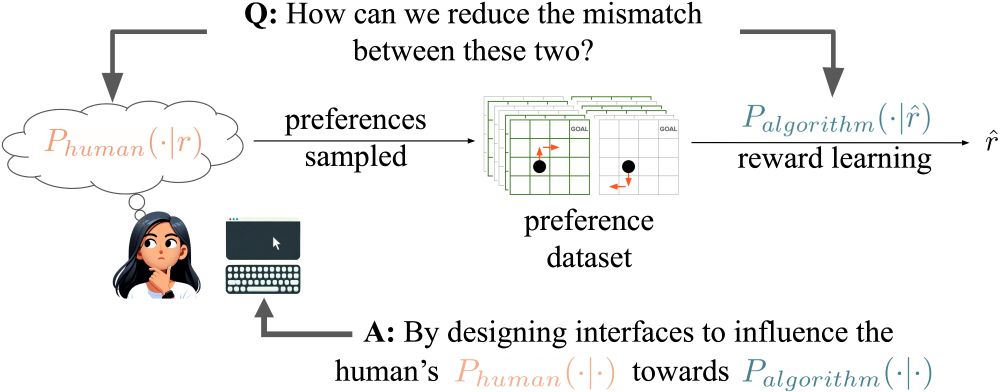

Our proposed method of influencing human preferences.

RLHF algorithms assume humans generate preferences according to normative models. We propose a new method for model alignment: influence humans to conform to these assumptions through interface design. Good news: it works!

#AI #MachineLearning #RLHF #Alignment (1/n)

14.01.2025 23:51 — 👍 7 🔁 3 💬 1 📌 0