Causal inference for psychologists who think that causal inference is not for them

Correlation does not imply causation and psychologists' causal inference training often focuses on the conclusion that therefore experiments are needed—without much consideration for the causal infer...

You need to bring in the same toolkit as in studies that try to establish causality without randomization.

I know it sounds unfair, but I don’t make the rules. These situations are instances of post-treatment bias, if you want to read up on it as a psychologist:

25.02.2026 17:32 —

👍 66

🔁 12

💬 3

📌 4

Speaking truth to power

21.02.2026 04:43 —

👍 1

🔁 0

💬 0

📌 0

A more user friendly

t-test

regression

variable description

frequency plots, and more.

datacolada.org/132

16.02.2026 14:45 —

👍 11

🔁 2

💬 2

📌 0

🚨SOLUTIONS🚨

Desk reject more stuff with actionable feedback.

Don’t request second reviews

Build larger editorial boards of volunteers

Wait to submit your work until it’s ready; a.k.a don’t send in your half-baked trash hoping for feedback

6/7

06.02.2026 12:59 —

👍 9

🔁 4

💬 2

📌 0

I’ve thanked people for spending the time to give me comments

06.02.2026 02:29 —

👍 1

🔁 0

💬 0

📌 0

After years in academia, I’m exploring data science and research roles in industry.

I'm a quant. social scientist (PhD Yale ’24, NYU) focused on causal inference, experiments, and large-scale data.

Feel free to get in touch or share; all leads appreciated. dwstommes@gmail.com

27.01.2026 18:45 —

👍 31

🔁 20

💬 0

📌 0

We believe that to improve practices, some fundamental rethinking of what we consider a publishable scientific contribution may be necessary. Currently, researchers may feel pressured to do “everything” in a single article—summarize and synthesize the existing literature, suggest a new theory or at least modify an existing one, hypothesize moderation and/or mediation, and provide (preferably positive) empirical evidence through statistical analyses that they run themselves, maybe even across multiple studies they conducted themselves. It is perhaps unsurprising that they end up cutting corners when it comes to causal inference—a hard topic, for which psychologists often receive little training—and rely on out-of-the-box statistical models.

This quote also reminds me of something that we wrote in our paper on path analysis (journals.sagepub.com/doi/10.1177/...). People are just expecting *way* too much of a single study, literally new discoveries exceeding Gregor Mendel's.

28.01.2026 12:00 —

👍 6

🔁 2

💬 0

📌 0

How about Don Campbell and his collaborators, who invented regression discontinuity among other things?

30.01.2026 02:39 —

👍 0

🔁 0

💬 0

📌 0

RegCheck

RegCheck is an AI tool to compare preregistrations with papers instantly.

Comparing registrations to published papers is essential to research integrity - and almost no one does it routinely because it's slow, messy, and time-demanding.

RegCheck was built to help make this process easier.

Today, we launch RegCheck V2.

🧵

regcheck.app

22.01.2026 11:05 —

👍 173

🔁 90

💬 8

📌 6

Back in 2017-18, a friend told me that Yale SOM banned laptops in MBA classes

19.01.2026 17:13 —

👍 1

🔁 0

💬 0

📌 0

For better learning in college lectures, lay down the laptop and pick up a pen | Brookings

Susan Dynarski examines the evidence that students learn better if they aren't using their laptops during lectures.

My syllabi have a footnote recommending the same 2017 @dynarski.bsky.social review that @gregsasso.bsky.social shared. This semester I also looked at Nicholas Decker's recent blog post

www.brookings.edu/articles/for...

nicholasdecker.substack.com/p/should-we-...

19.01.2026 17:12 —

👍 5

🔁 0

💬 2

📌 0

Rosenbaum, Observation and Experiment

16.01.2026 02:58 —

👍 2

🔁 0

💬 0

📌 0

Some people bring up (1) the cost of criticism and (2) that a lot of criticism has already been voiced but ignored. Both points are valid, so here are some suggestion for (1) reducing backlash and (2) increasing impact (from this talk of mine: juliarohrer.com/wp-content/u...

08.01.2026 07:28 —

👍 72

🔁 25

💬 2

📌 4

Citations always needed checking! Just as one example, I used to see my sole authored 2013 paper cited as “Lin et al” coz Google Scholar’s bib had an error :)

27.12.2025 16:12 —

👍 1

🔁 0

💬 1

📌 0

Will you incorporate LLMs and AI prompting into the course in the future?

No.

Why won’t you incorporate LLMs and AI prompting into the course?

These tools are useful for coding (see this for my personal take on this).

However, they’re only useful if you know what you’re doing first. If you skip the learning-the-process-of-writing-code step and just copy/paste output from ChatGPT, you will not learn. You cannot learn. You cannot improve. You will not understand the code.

In that post, it warns that you cannot use it as a beginner:

…to use Databot effectively and safely, you still need the skills of a data scientist: background and domain knowledge, data analysis expertise, and coding ability.

There is no LLM-based shortcut to those skills. You cannot LLM your way into domain knowledge, data analysis expertise, or coding ability.

The only way to gain domain knowledge, data analysis expertise, and coding ability is to struggle. To get errors. To google those errors. To look over the documentation. To copy/paste your own code and adapt it for different purposes. To explore messy datasets. To struggle to clean those datasets. To spend an hour looking for a missing comma.

This isn’t a form of programming hazing, like “I had to walk to school uphill both ways in the snow and now you must too.” It’s the actual process of learning and growing and developing and improving. You’ve gotta struggle.

This Tumblr post puts it well (it’s about art specifically, but it applies to coding and data analysis too):

Contrary to popular belief the biggest beginner’s roadblock to art isn’t even technical skill it’s frustration tolerance, especially in the age of social media. It hurts and the frustration is endless but you must build the frustration tolerance equivalent to a roach’s capacity to survive a nuclear explosion. That’s how you build on the technical skill. Throw that “won’t even start because I’m afraid it won’t be perfect” shit out the window. Just do it. Just start. Good luck. (The original post has disappeared, but here’s a reblog.)

It’s hard, but struggling is the only way to learn anything.

You might not enjoy code as much as Williams does (or I do), but there’s still value in maintaining codings skills as you improve and learn more. You don’t want your skills to atrophy.

As I discuss here, when I do use LLMs for coding-related tasks, I purposely throw as much friction into the process as possible:

To avoid falling into over-reliance on LLM-assisted code help, I add as much friction into my workflow as possible. I only use GitHub Copilot and Claude in the browser, not through the chat sidebar in Positron or Visual Studio Code. I treat the code it generates like random answers from StackOverflow or blog posts and generally rewrite it completely. I disable the inline LLM-based auto complete in text editors. For routine tasks like generating {roxygen2} documentation scaffolding for functions, I use the {chores} package, which requires a bunch of pointing and clicking to use.

Even though I use Positron, I purposely do not use either Positron Assistant or Databot. I have them disabled.

So in the end, for pedagogical reasons, I don’t foresee me incorporating LLMs into this class. I’m pedagogically opposed to it. I’m facing all sorts of external pressure to do it, but I’m resisting.

You’ve got to learn first.

Some closing thoughts for my students this semester on LLMs and learning #rstats datavizf25.classes.andrewheiss.com/news/2025-12...

09.12.2025 20:17 —

👍 331

🔁 99

💬 14

📌 31

I think of the 2019 Nobel as the 2nd wave of the experimental part of the credibility revolution. Ashenfelter, Card, & Lalonde’s work led to major job training RCTs in the US, and Angrist was one of Duflo’s advisors. Ashenfelter has a nice speech on the early history

legacy.iza.org/en/webconten...

05.12.2025 03:43 —

👍 3

🔁 0

💬 1

📌 0

Gentle reminder that a correlation coefficient isn’t a particularly great way to quantify the effect of a dichotomous treatment. See also

www.the100.ci/2025/07/28/w...

24.11.2025 07:23 —

👍 29

🔁 7

💬 3

📌 0

from Amrhein, Greenland, & McShane ("Retire statistical significance," Nature, 2019)

"For example, the authors above could have written: ‘Like a previous study, our results suggest a 20% increase in risk of new-onset atrial fibrillation in patients given the anti-inflammatory drugs. Nonetheless, a risk difference ranging from a 3% decrease, a small negative association, to a 48% increase, a substantial positive association, is also reasonably compatible with our data, given our assumptions.’ "

from Amrhein, Greenland, & McShane ("Retire statistical significance," Nature, 2019)

"Whatever the statistics show, it is fine to suggest reasons for your results, but discuss a range of potential explanations, not just favoured ones. Inferences should be scientific, and that goes far beyond the merely statistical. Factors such as background evidence, study design, data quality and understanding of underlying mechanisms are often more important than statistical measures such as P values or intervals."

I like this from @vamrhein.bsky.social et al. I assigned it to my class last semester and tried to explain that p-values measure how compatible (vs. surprising) the data are with the null, given our assumptions. But yeah, tests & CIs are hard to understand!

www.blakemcshane.com/Papers/natur...

22.11.2025 18:31 —

👍 6

🔁 2

💬 0

📌 0

The Only Three Reasons to Do a Ph.D. in the Social Sciences

If none are true, don’t do it.

Are you or one of your students considering doing a Ph.D. in a social science? I've spent a lot of time talking about this w/ students & finally wrote something up.

IMO, there are only 3 good reasons to do it. One of them needs to be true--otherwise, don't.

medium.com/the-quantast...

24.09.2025 16:32 —

👍 4

🔁 1

💬 0

📌 0

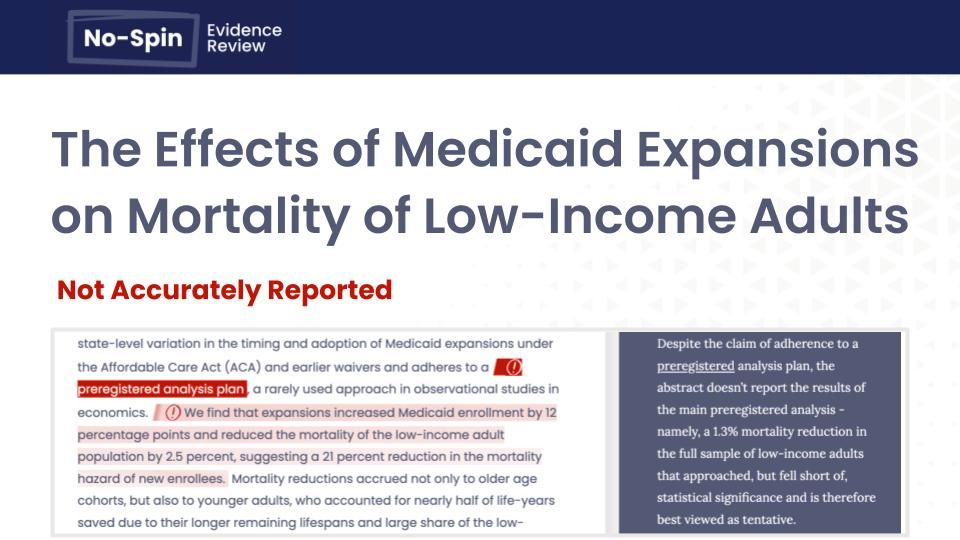

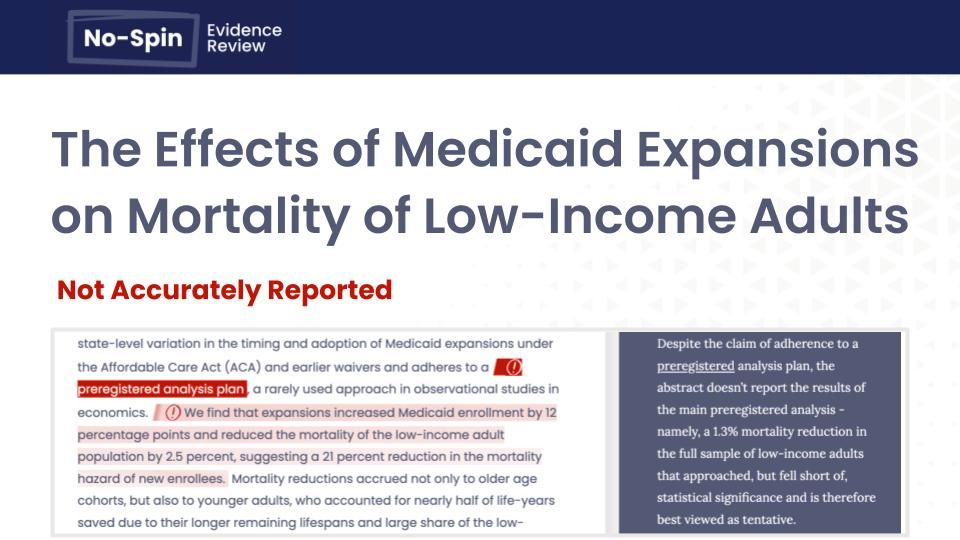

See our No-Spin report on a widely-covered NBER study of Medicaid expansion. In brief: Despite the abstract's claims that expansion reduced adult mortality 2.5%, the study found much smaller effects that fell short of statistical significance in its main preregistered analysis.🧵

11.06.2025 17:38 —

👍 1

🔁 2

💬 1

📌 0

Starting to look like I might not be able to work at Harvard anymore due to recent funding cuts. If you know of any open statistical consulting positions that support remote work or are NYC-based, please reach out! 😅

04.06.2025 19:02 —

👍 152

🔁 96

💬 11

📌 7

In case this is of interest, even ANCOVA I is consistent and asymptotically normal in completely randomized experiments (though II is asymptotically more efficient in imbalanced or multiarm designs)

05.05.2025 19:31 —

👍 3

🔁 0

💬 1

📌 0

Issues with interpreting p-values haunts even AI, which is prone to same biases as human researchers. ChatGPT, Gemini & Claude all fall prey to "dichotomania" - treating p=0.049 & p=0.051 as categorically different, and paying too much attention to significance. www.cambridge.org/core/journal...

21.04.2025 16:51 —

👍 41

🔁 6

💬 2

📌 0