8/N More qualitatively, in the solution, we see (in cyan) references (“cheatsheet”) and keywords from the abstraction being used meaningfully in the reasoning trace of the solution generator model, showcasing that strategies can be elicited through abstractions.

03.10.2025 19:33 — 👍 0 🔁 0 💬 1 📌 0

7/N We additionally perform analysis of the abstractions and solutions that RLAD generates. Here, RLAD produces solutions with greater semantic diversity across different abstractions (left) and higher adherence of the solution to the abstraction (right) compared to baselines.

03.10.2025 19:33 — 👍 0 🔁 0 💬 1 📌 0

6/N Furthermore, the abstraction generator shows weak-to-strong generalization, where if we swap out the solution generator with o4-mini (with a 24K token budget), conditioning on abstractions consistently yields higher pass@k accuracy compared to question-only conditioning.

03.10.2025 19:33 — 👍 0 🔁 0 💬 1 📌 0

5/N On AIME 2025, as inference compute grows, efficiency improves when more budget is devoted to abstraction over solution generation—robust across all normalization offsets 𝑘₀. Local errors can be corrected with retries, but fresh abstractions help once retries are exhausted!

03.10.2025 19:33 — 👍 0 🔁 0 💬 1 📌 0

4/N We evaluate RLAD on Math Reasoning on benchmarks such as AIME 2025, DeepScaleR Hard, AMC 2023, achieving consistent accuracy gains over the base Qwen 3-1.7B model and DAPO. Performance is measured without (w/o), with (w/), and with the best abstraction among 4 samples.

03.10.2025 19:33 — 👍 0 🔁 0 💬 1 📌 0

3/N We instantiate a two-player RL framework:

1. An Abstraction Generator proposes reasoning strategies.

2. A Solution Generator uses that strategy to produce an answer.

The reward corresponds to the average success rate, leading the first player to find useful abstractions.

03.10.2025 19:33 — 👍 0 🔁 0 💬 1 📌 0

2/N Reasoning requires going beyond pattern-matching and recall to the execution of algorithmic procedures. RLVR aims to induce this, but models often underthink—switching logic midstream. Instead, can we optimize “breadth", training models to explore a wider array of strategies?

03.10.2025 19:33 — 👍 0 🔁 0 💬 1 📌 0

🚨🚨New Paper: Training LLMs to Discover Abstractions for Solving Reasoning Problems

Introducing RLAD, a two-player RL framework for LLMs to discover 'reasoning abstractions'—natural language hints that encode procedural knowledge for structured exploration in reasoning.🧵⬇️

03.10.2025 19:33 — 👍 2 🔁 0 💬 1 📌 0

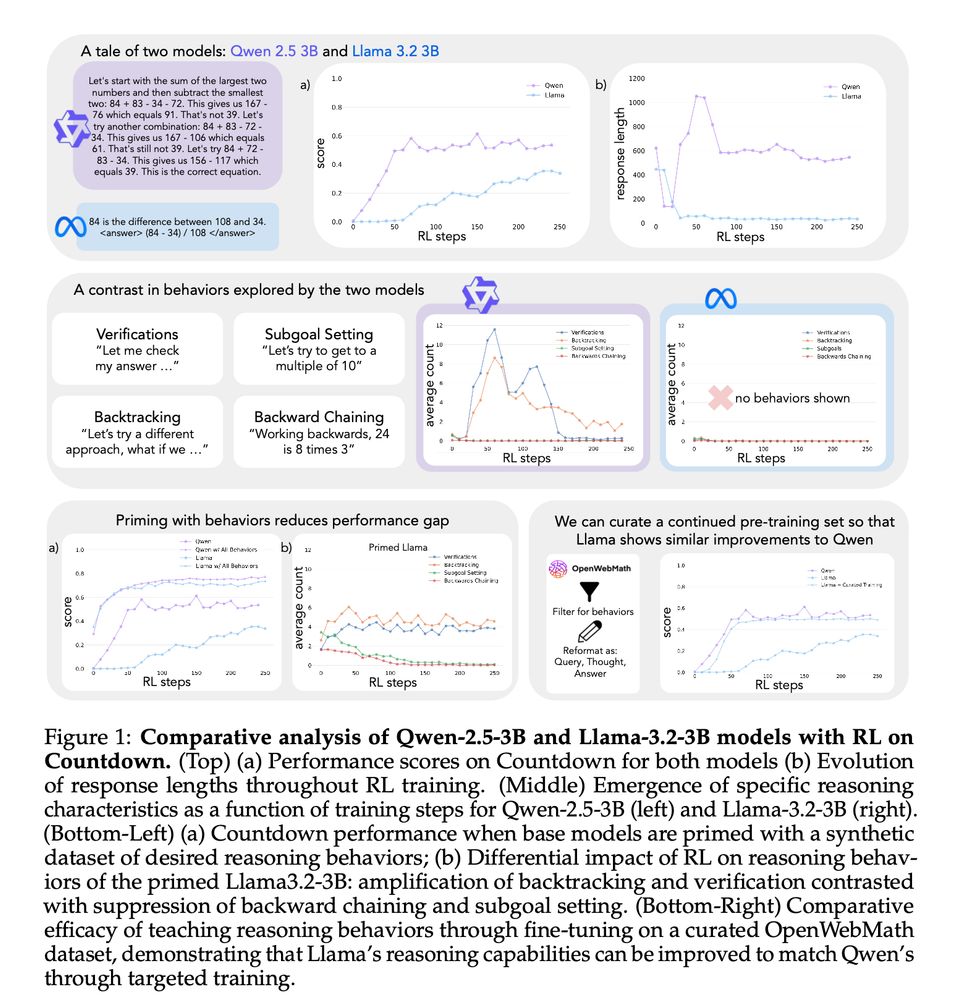

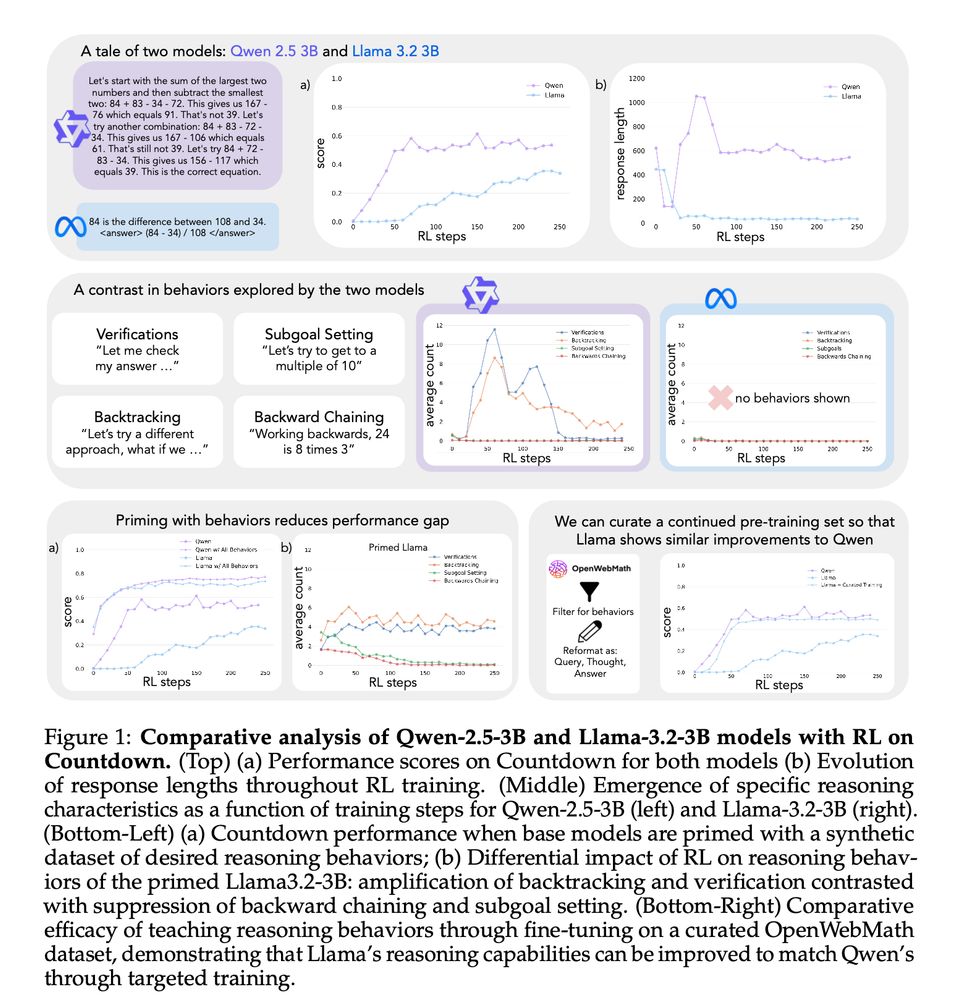

1/13 New Paper!! We try to understand why some LMs self-improve their reasoning while others hit a wall. The key? Cognitive behaviors! Read our paper on how the right cognitive behaviors can make all the difference in a model's ability to improve with RL! 🧵

04.03.2025 18:15 — 👍 57 🔁 17 💬 2 📌 3

Could you add me too :)

22.11.2024 00:59 — 👍 1 🔁 0 💬 1 📌 0

Stanford Health Policy: Interdisciplinary innovation, discovery and education to improve health policy here at home and around the world.

2nd year PhD at UCSD w/ @rajammanabrolu.bsky.social

Prev: @ltiatcmu.bsky.social @umich.edu

Research: Agents🤖, Reasoning🧠, Games👾

The official account of the Stanford Institute for Human-Centered AI, advancing AI research, education, policy, and practice to improve the human condition.

Assistant Professor @ Case Western Reserve Univ.

CS Ph.D. @TAMU

ahxt.github.io

CS PhD student @StanfordNLP

https://cs.stanford.edu/~shaoyj/

Assistant Professor at UCLA. Alum @StanfordNLP. NLP, Cognitive Science, Accessibility. https://www.coalas-lab.com/elisakreiss

Stanford Professor of Linguistics and, by courtesy, of Computer Science, and member of @stanfordnlp.bsky.social and The Stanford AI Lab. He/Him/His. https://web.stanford.edu/~cgpotts/

PhD @berkeley_ai; prev SR @GoogleDeepMind. I stare at my computer a lot and make things

PhD Student Stanford w/ Noah Goodman, studying reasoning, discovery, and interaction. Trying to build machines that understand people.

StanfordNLP, Stanford AI Lab

PhD student at Stanford NLP. Working on Social NLP and CSS. Previously at GaTech, Meta AI, Emory.

📍Palo Alto, CA

🔗 calebziems.com

Stanford Linguistics and Computer Science. Director, Stanford AI Lab. Founder of @stanfordnlp.bsky.social . #NLP https://nlp.stanford.edu/~manning/

Professor of Natural and Artificial Intelligence @Stanford. Safety and alignment @GoogleDeepMind.

PhD candidate @ Stanford NLP

https://myracheng.github.io/

asst prof @Stanford linguistics | director of social interaction lab 🌱 | bluskies about computational cognitive science & language

Assistant Professor of Computer Science @JohnsHopkins,

CS Postdoc @Stanford,

PHD @EPFL,

Computational Social Science, NLP, AI & Society

https://kristinagligoric.com/

member of technical staff @stanfordnlp.bsky.social

Modeling Linguistic Variation to expand ownership of NLP tools

Views my own, but affiliations that might influence them:

ML PhD Student under Prof. Diyi Yang

2x RS Intern🦙 Pretraining

Alum NYU Abu Dhabi

Burqueño

he/him

Cognitive science PhD student at Stanford, studying iterated learning and reasoning.