New paper with @herbps10.bsky.social!

25.09.2025 20:25 — 👍 7 🔁 0 💬 0 📌 0Alec McClean

@alecmcclean.bsky.social

Postdoc @ NYU Grossman; stats / ML + causal inference https://alecmcclean.github.io/

@alecmcclean.bsky.social

Postdoc @ NYU Grossman; stats / ML + causal inference https://alecmcclean.github.io/

New paper with @herbps10.bsky.social!

25.09.2025 20:25 — 👍 7 🔁 0 💬 0 📌 0

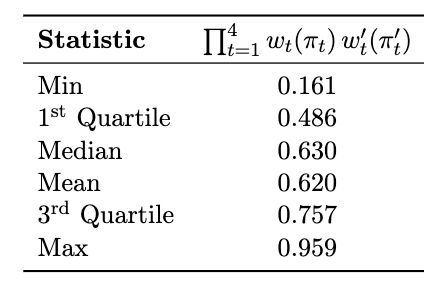

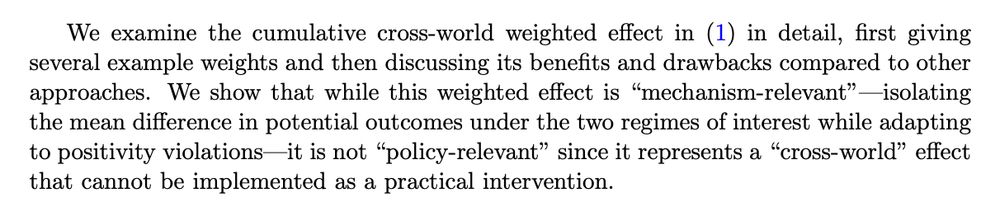

Distribution of cumulative weights, with one for each subject.

Although estimator is complex, some nice properties arise from the construction: in particular, we can examine distribution of cumulative weights across subjects, like in single-timepoint weighting

16.07.2025 22:36 — 👍 0 🔁 0 💬 0 📌 0

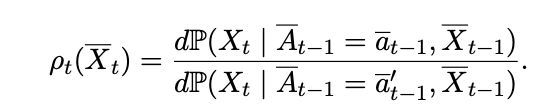

Covariate density ratio across two target regimes

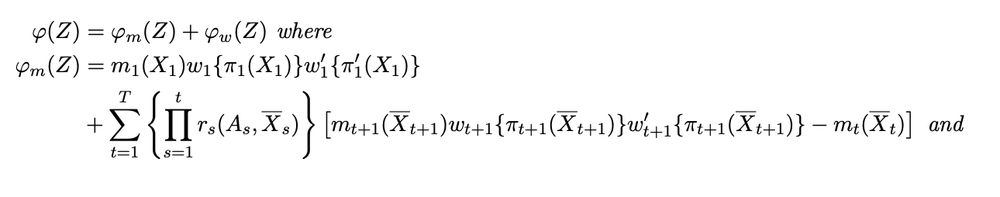

First half of EIF

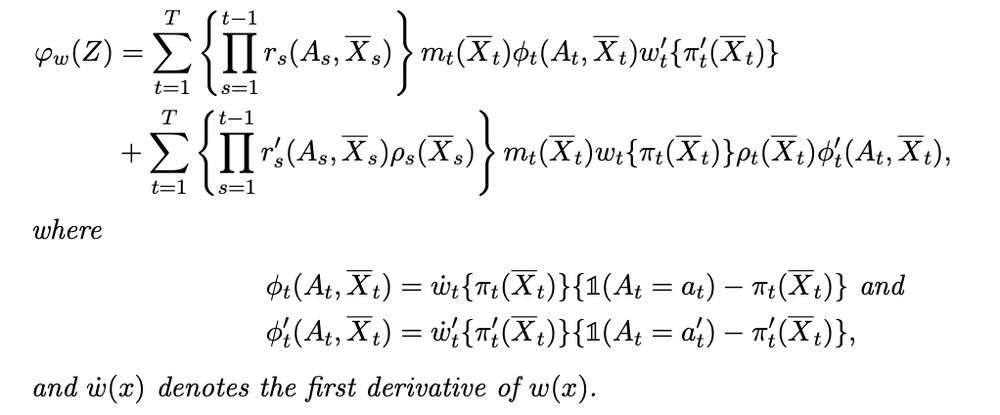

Second half of EIF, w/ additional novel term involving covariate density ratio across regimes

ID. No positivity needed. Just need weights to behave well, which is possible by construction (eg, overlap, trimming)

Cross-world"ness" --> nuances in identification and estimation

- ID: Need strong seq. rand., but still possible w/out positivity

- Est: new EIF for doubly robust estimator involves additional term w/ covariate density ratio across the target regimes

These fx are

- "Cross-world"

- "Mechanism-relevant" (they target mean diff in POs we care about)

- **Not** "policy-relevant" (they're not implementable)

This tradeoff arises elsewhere (mediation, censoring by death). Ours is another example:

What you want to know != what you can implement

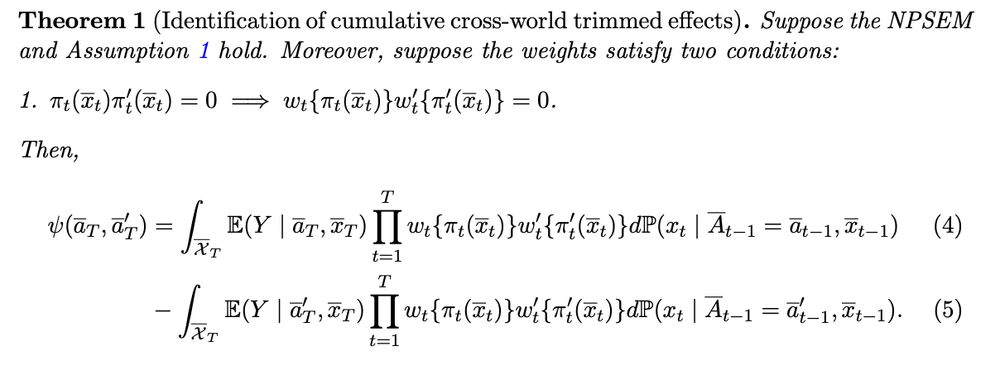

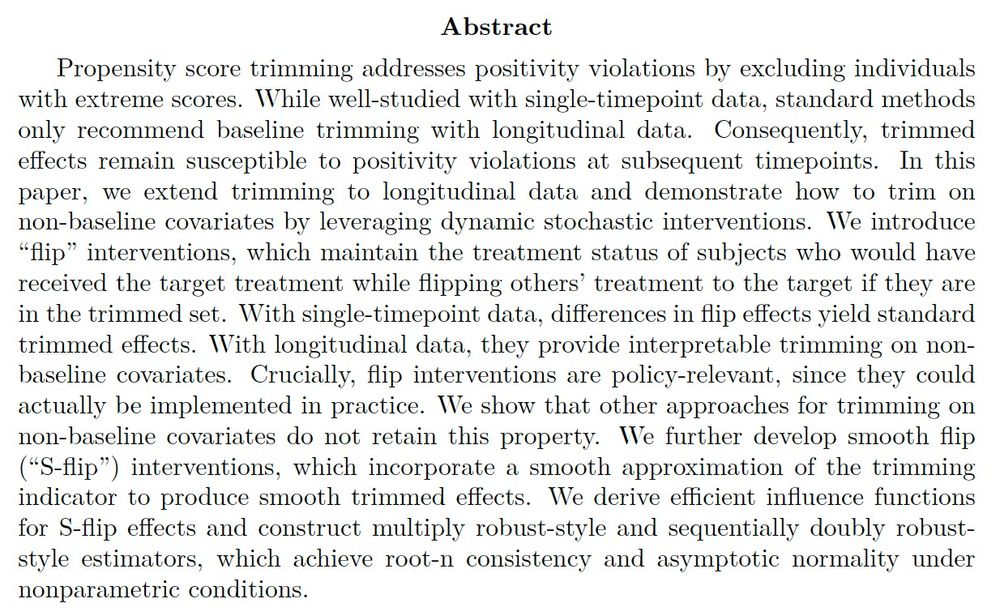

New paper 📜 We construct longitudinal effects tailored to isolated mean diff in two POs while adapting to positivity violations under both regimes.

Some notes vv

We show that contrasts in flip effects yield WATEs when t=1 and non-baseline weighting for t>1

We also give some new doubly robust estimation results:

1. typical multiply robust estimator is twice as robust as people had thought

2. new sequentially doubly robust style estimator

Flip ints are built from a target tx and a weight (eg overlap wt, trimming indicator):

1. If subject would take target tx, do nothing

2. O/w flip subject to target with prob equal to the weight

Allows you to target any regime (eg, always treated) while adjusting to pos violations as needed

New paper! Weighting is great for addressing positivity violations, but it's unclear how to do it in longitudinal data. We propose a solution: "flip" interventions. These allow for weighing on non-baseline covariates and give effects robust to arbitrary positivity violations.

Highlights below vv

Excited to present this again at ACIC (Th 1:15pm)!

We realized trimming is a special version of weighting —> we generalized the analysis to longitudinal weighted effects

“Longitudinal weighted and trimmed treatment effects with flip interventions”

Draft:

alecmcclean.github.io/files/long-w...

Excited to present on Thursday @eurocim.bsky.social on new work with @idiaz.bsky.social on (smooth) trimming with longitudinal data!

"Longitudinal trimming and smooth trimming with flip and S-flip interventions"

Prelim draft: alecmcclean.github.io/files/LSTTEs...

link 📈🤖

Bridging Root-$n$ and Non-standard Asymptotics: Dimension-agnostic Adaptive Inference in M-Estimation (Takatsu, Kuchibhotla) This manuscript studies a general approach to construct confidence sets for the solution of population-level optimization, commonly referred to as M-estimation. Sta

📢📢The 4th Lifetime Data Science Conference will take place May 28–30, 2025, at New York Marriott at the Brooklyn Bridge in Brooklyn, NY, USA. This event will feature keynotes by Drs. Nicholas Jewell and Mei-Ling Lee, short courses, 60+ invited sessions, and a banquet on May 29. Register and join us!

15.01.2025 14:37 — 👍 4 🔁 2 💬 0 📌 0

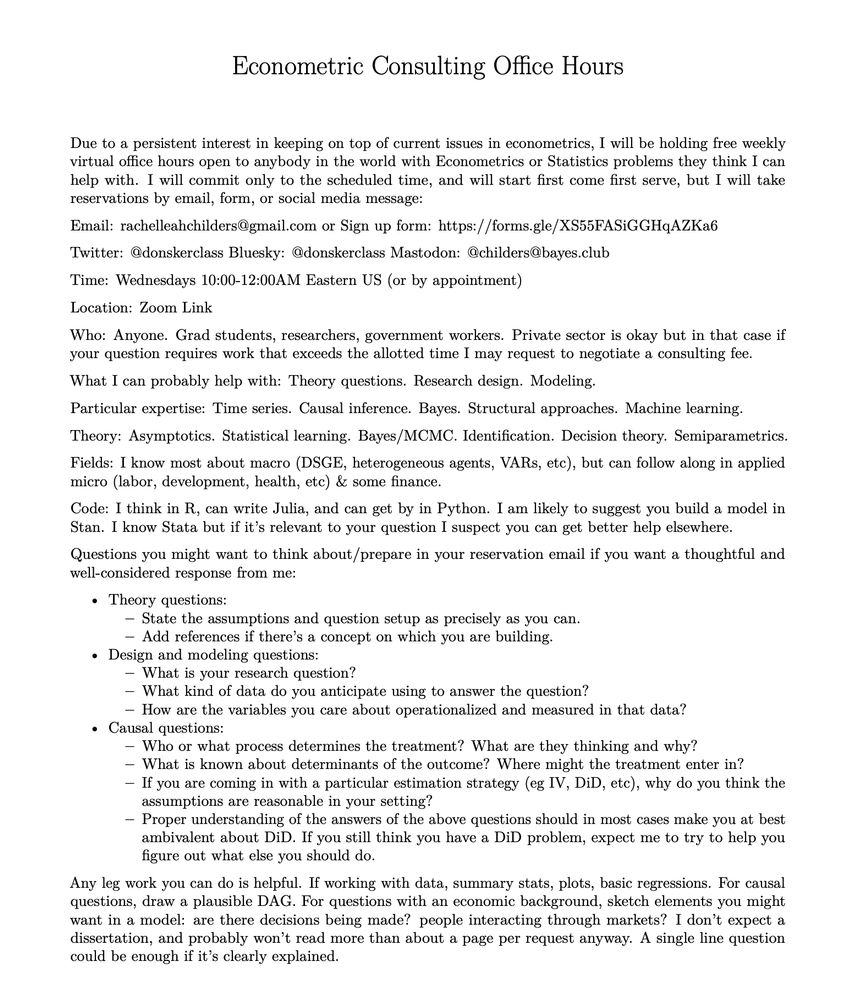

Free Weekly Econometrics Office Hours Email: rachelleahchilders@gmail.com or Sign up form: https://forms.gle/XS55FASiGGHqAZKa6 Time: Wednesdays 10:00-12:00AM Eastern US (or by appointment) Location: Zoom Link https://bowdoin.zoom.us/j/96039587180 Who: Anyone. Grad students, researchers, government workers. Private sector is okay but in that case if your question requires work that exceeds the allotted time I may request to negotiate a consulting fee. What I can probably help with: Theory questions. Research design. Modeling. Particular expertise: Time series. Causal inference. Bayes. Structural approaches. Machine learning. Theory: Asymptotics. Statistical learning. Bayes/MCMC. Identification. Decision theory. Semiparametrics. Fields: I know most about macro (DSGE, heterogeneous agents, VARs, etc), but can follow along in applied micro (labor, development, health, etc) & some finance. Code: I think in R, can write Julia, and can get by in Python. I am likely to suggest you build a model in Stan. I know Stata but if it’s relevant to your question I suspect you can get better help elsewhere.

For the Spring semester, I am restarting my free weekly open office-hours for anyone in the world with Econometrics questions. Wednesdays 10-12AM Eastern or by appointment; sign up and drop by!

Details and sign up at donskerclass.github.io/OfficeHours....

Rebecca Farina, Arun Kumar Kuchibhotla, Eric J. Tchetgen Tchetgen

Doubly Robust and Efficient Calibration of Prediction Sets for Censored Time-to-Event Outcomes

https://arxiv.org/abs/2501.04615

For IV folks: what's a good resource on time-varying 2SLS?

Data = time-varying {covariates, instruments, outcomes}

Asmp: a version of longitudinal 2SLS; ie linear SEM in 1st & 2nd stages, over time

Time-varying data seems to introduce some nuance. Is there a textbook treatment of this?

My traditional end-of-year review: some papers I read and liked in 2024.

donskerclass.github.io/post/papers-...

@idiaz.bsky.social et al. 2020

arxiv.org/pdf/2006.01366

Generalizes to a large class of ints. Also gives great review of other innovations from 2010s

Bonus: for identification, it uses an NPSEM -- an alternative to SWIGs. NPSEMs come from do-why lit; great for discussing asmps w/ practitioners

2) Young et al. 2014 pmc.ncbi.nlm.nih.gov/articles/PMC...

Ints that depend on natural value of trtment. Very easy-to-read! Appendix B is great on ID.

Further reading: the SWIG papers; primer first (stats.ox.ac.uk/~evans/uai13/Richardson.pdf), and original (R&R '13) when you're feeling brave!

1) Robins et al. 2004 www.jstor.org/stable/pdf/r...

Addresses or foreshadows lots of subsequent work on time-varying data. The data analysis in Section 6 helped me build intuition for earlier parts of the paper.

Related to lit review in an ongoing project: for complex time-varying ints and identification in epi/bio, I think these three papers are great starting points:

www.jstor.org/stable/pdf/r...

pmc.ncbi.nlm.nih.gov/articles/PMC...

arxiv.org/pdf/2006.01366

Details below. What are other's favorites?

What's the best paper you read this year?

27.12.2024 17:02 — 👍 35 🔁 4 💬 13 📌 2

Not a 2024 paper, but new to me in 2024: www.sciencedirect.com/science/arti...

Cool results about estimation with extreme prop scores; limiting dists and inference even w/out CLT. Nicely resolved some q's I was thinking abt, before I spent too much time thinking about them (the perfect situation!)

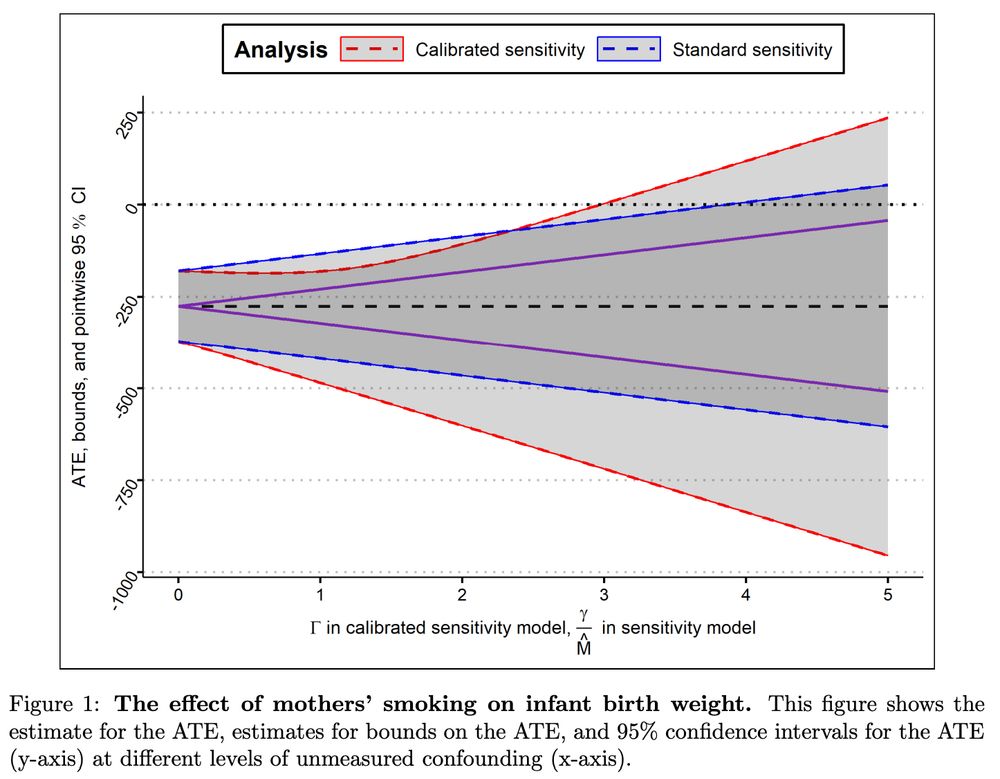

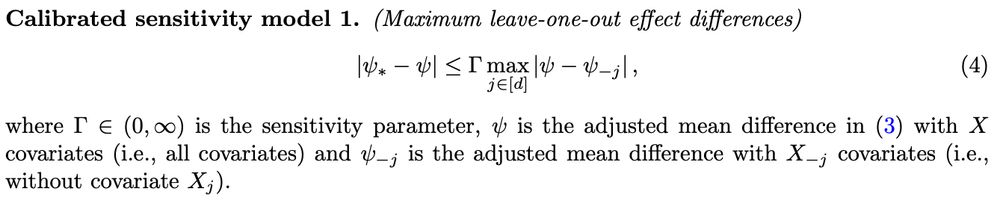

We analyze the effect of mothers’ smoking on infant birthweight, and see that accounting for uncertainty in estimating M alters CIs for ATE.

This was fun work with Edward and Zach Branson (sites.google.com/site/zjbrans...) and was a great project to finish my PhD!

9/9

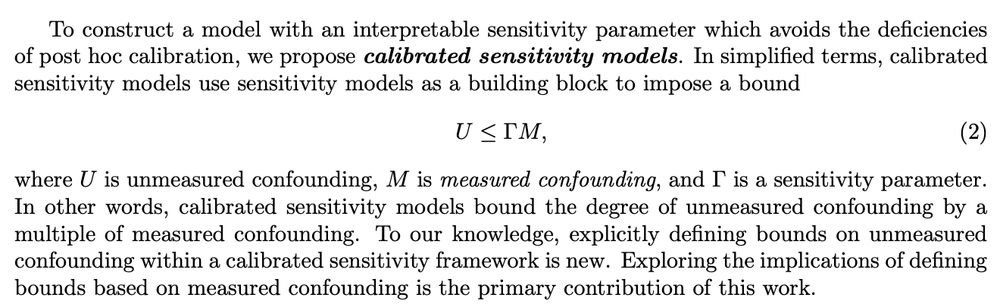

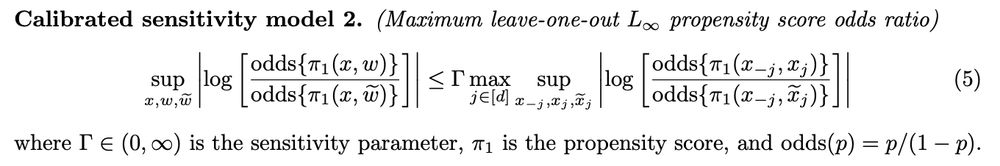

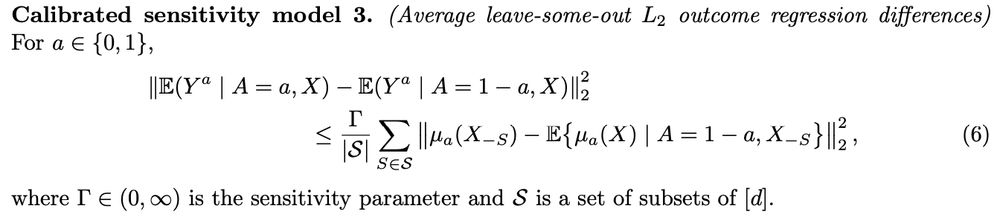

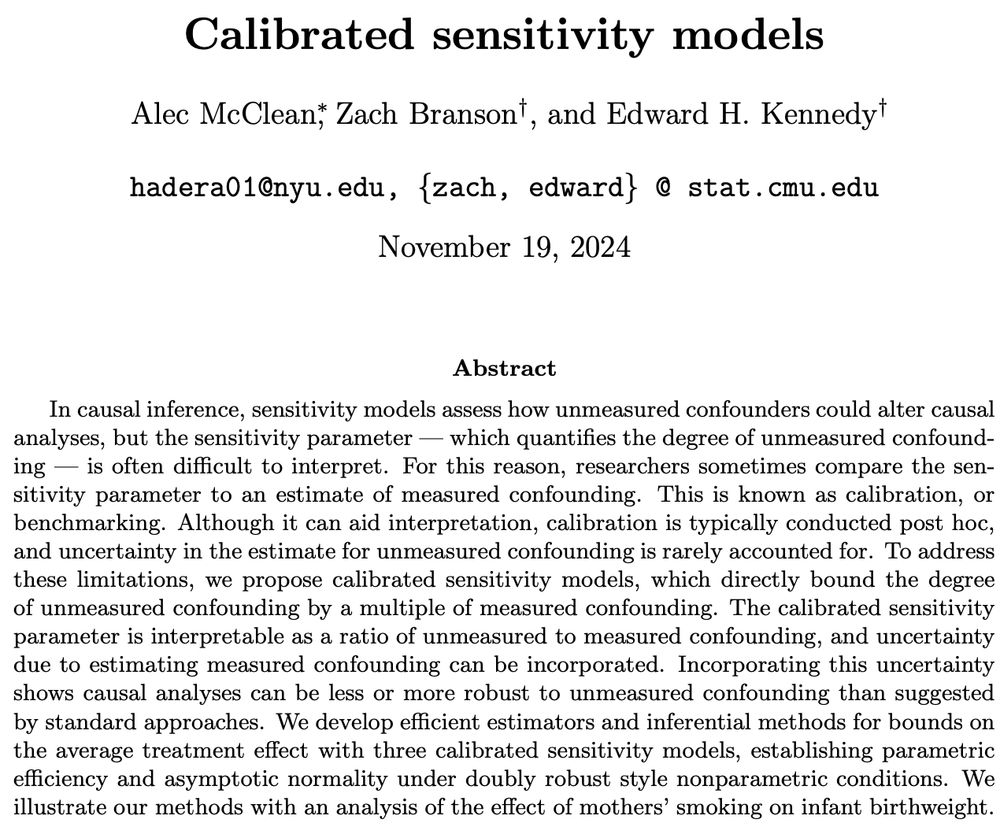

We incorporate M into our “calibrated” sensitivity models. Generically:

U <= GM

where G is sensitivity parameter.

We outline many choices for U and M and develop three specific models. Then identify bounds on ATE and give estimators that account for uncertainty in estimating M.

8/9

Often calibration is somewhat informal w/out accounting for uncertainty in M. However, statistical error in estimating M is first order and can alter confidence intervals! Indeed, if calibration is goal, more intuitive to put M directly in model.

We explore ramifications of this reframing!

7/9

#2 Calibrated sensitivity models arxiv.org/abs/2405.08738

Sensitivity analyses look at how unmeasured confounders (U) alter causal effect estimates (when, eg, trtment not random). To understand U, we can calibrate by estimating analogous ~measured~ conf. (M) by leaving out variables from data

6/9

This was with @edwardhkennedy.bsky.social, Siva Balakrishnan, and Larry Wasserman. It was an amazing learning experience for me!

Also, arxiv.org/abs/2212.14857 is another great paper on this topic. Comprehensive analysis under holder smoothness assumptions, with many cool results!

5/9

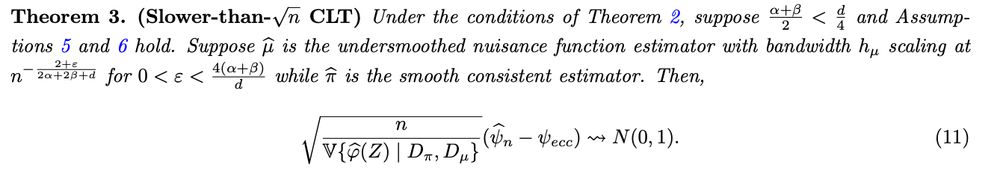

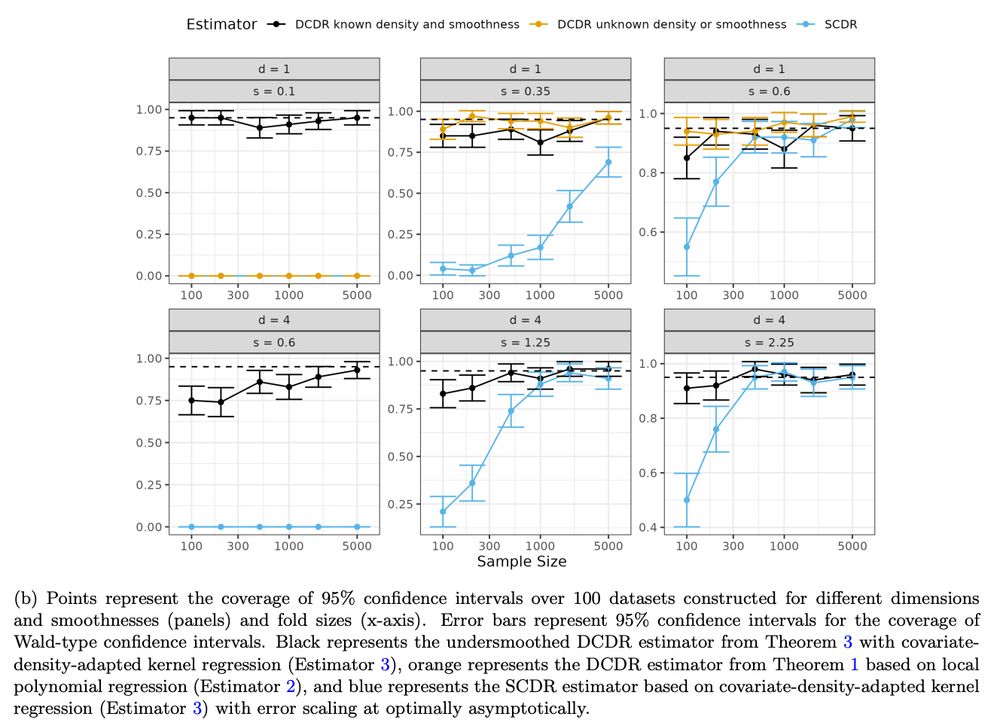

3. A slower-than-root-n CLT w/ non-smooth nfs (some “fun” technical results deep in appendix :D)

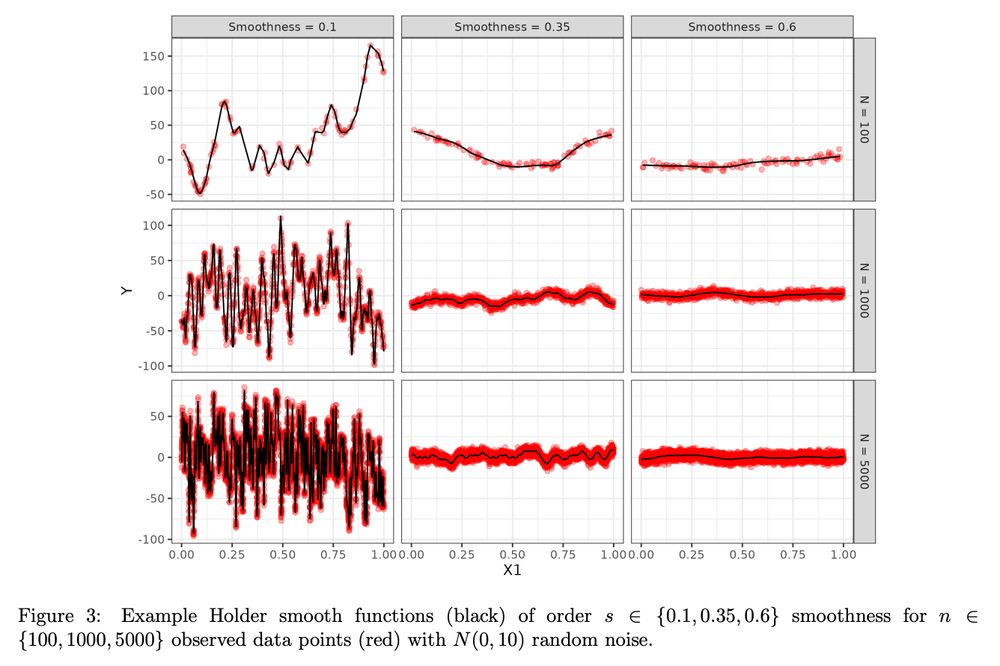

4. Simulations illustrating 1-3. Manufacturing Holder smooth fns was an interesting challenge!

4/9

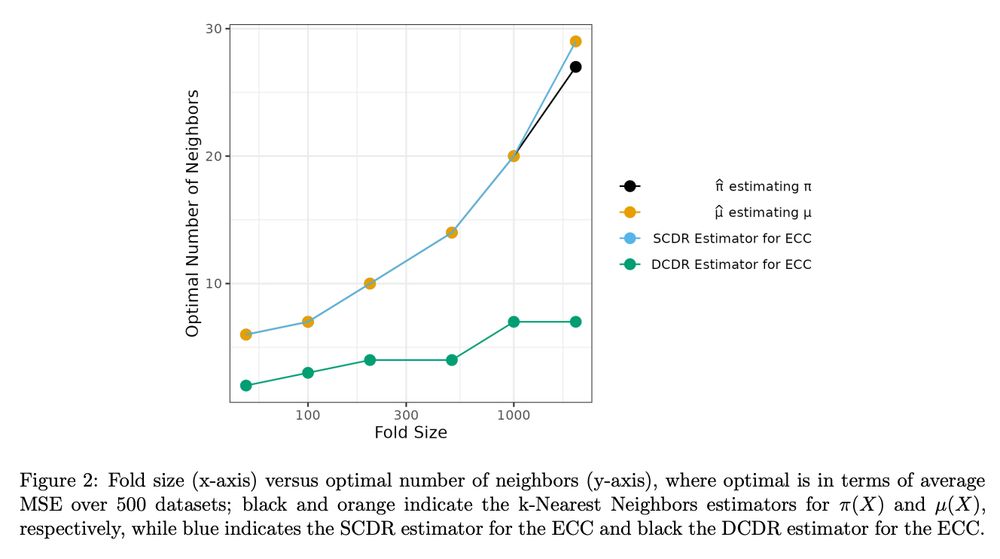

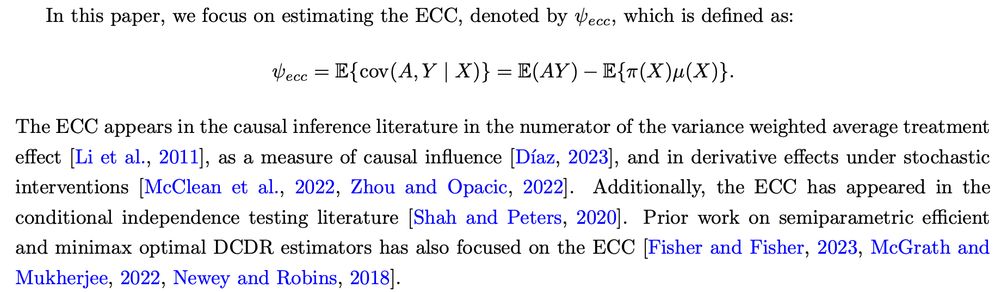

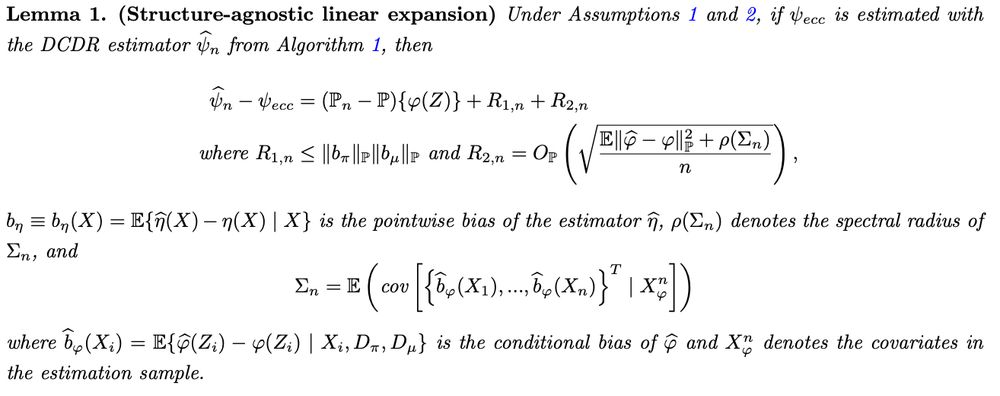

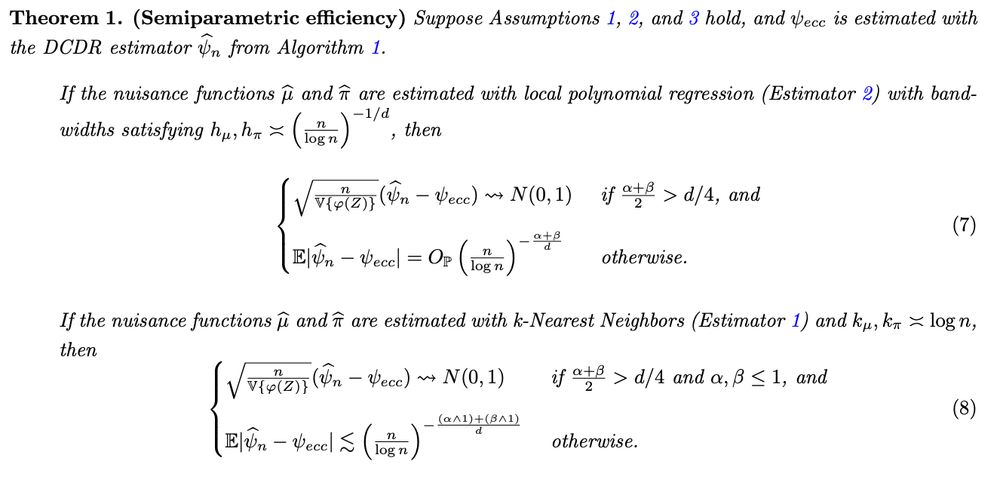

We focus on estimating expected cond. cov. Four main contributions:

1. Structure-agnostic linear expansion for DCDR est. Nuis func est. bias more important than var.

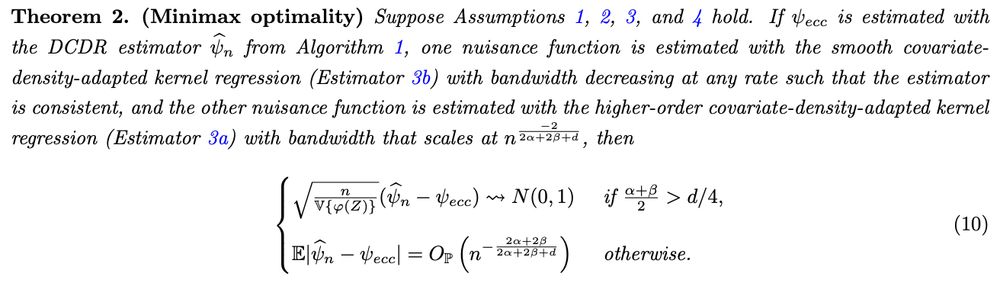

2. Rates with local lin smoothers for nfs under holder smoothness. Semiparametric efficiency and minimax optimality possible

3/9

#1 arxiv.org/abs/2403.15175

The DCDR estimator is quite new (2018, arxiv.org/abs/1801.09138). It splits training data and trains nuisance fns on independent folds

It can get faster conv rates than usual DR estimator, which trains nuis funcs on same sample.

We analyze the DCDR est. in detail!

2/9