Announcing a new special guest edition in Digital Health! Everything on patient-reported outcomes in mHealth, including algorithmic treatment and measurement allocation, N-of-1 trials, preference learning & handling subjective measurements. 📲

Take a look!

journals.sagepub.com/topic/collec...

10.10.2025 14:06 — 👍 1 🔁 1 💬 0 📌 0

Automated Visualization Makeovers with LLMs

Making a good graphic that accurately and efficiently conveys the desired message to the audience is both an art and a science, typically not taught in the data science curriculum. Visualisation makeo...

📊📉📈 Better data visualizations with AI: can LLMs provide constructive critiques on existing charts? We explore how generative AI can automate #MakeoverMonday -type exercises, suggesting improvements to existing charts.

📄 New preprint + benchmark dataset 💽

arxiv.org/abs/2508.05637

19.08.2025 06:17 — 👍 0 🔁 0 💬 0 📌 0

GitHub - Selbosh/aaai2026-quarto: Unofficial Quarto template for the AAAI-2026 Conference

Unofficial Quarto template for the AAAI-2026 Conference - Selbosh/aaai2026-quarto

New: unofficial @quarto.org template for the upcoming @realaaai.bsky.social 2026 conference. Write your submission in Markdown with reproducible, inline computations!

github.com/Selbosh/aaai...

29.07.2025 14:54 — 👍 0 🔁 0 💬 0 📌 0

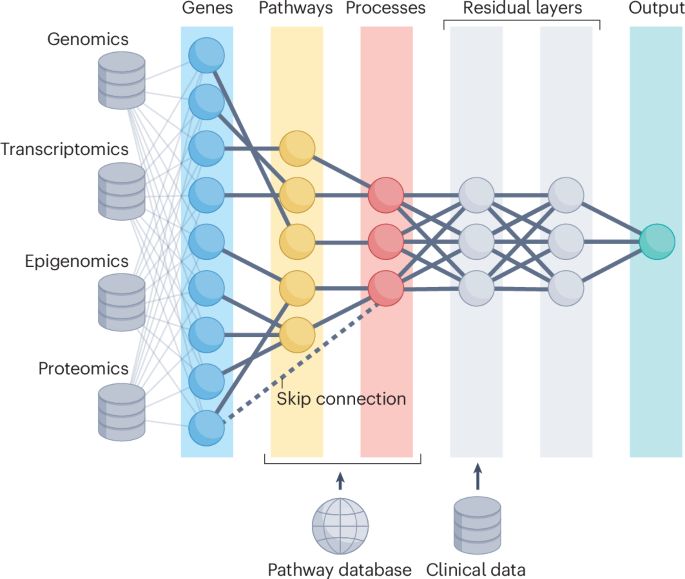

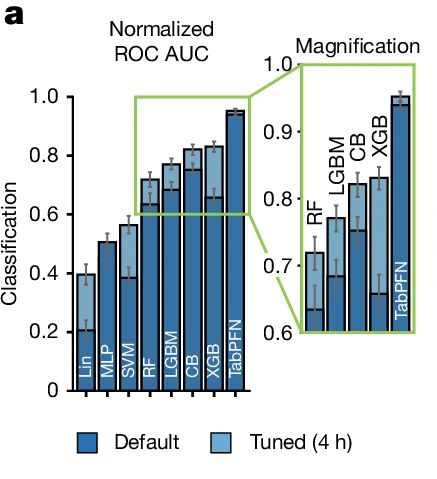

Frontiers | Visible neural networks for multi-omics integration: a critical review

BackgroundBiomarker discovery and drug response prediction are central to personalized medicine, driving demand for predictive models that also offer biologi...

What is a "Visible Neural Network"? It's a new kind of deep learning model for multi-omics, where prior knowledge and interpretability are baked into the architecture.

📄 We reviewed dozens of models, datasets & applications, and call for better tools/benchmarks:

www.frontiersin.org/journals/art...

21.07.2025 12:36 — 👍 4 🔁 1 💬 0 📌 0

Health Research From Home Hackathon 2025

7-9 May 2025

Health Research From Home Hackathon 2025 |

This hackathon is being held by Health Research From Home Partnership led by the @OfficialUoM. Register your interest now: health-research-from-home.github.io/DataAnalysis...

06.03.2025 21:47 — 👍 0 🔁 1 💬 0 📌 0

New blog post: Alternatives to @overleaf.com for #rstats, reproducible writing and collaboration

selbydavid.com/2025/03/04/o...

06.03.2025 18:55 — 👍 4 🔁 1 💬 0 📌 0

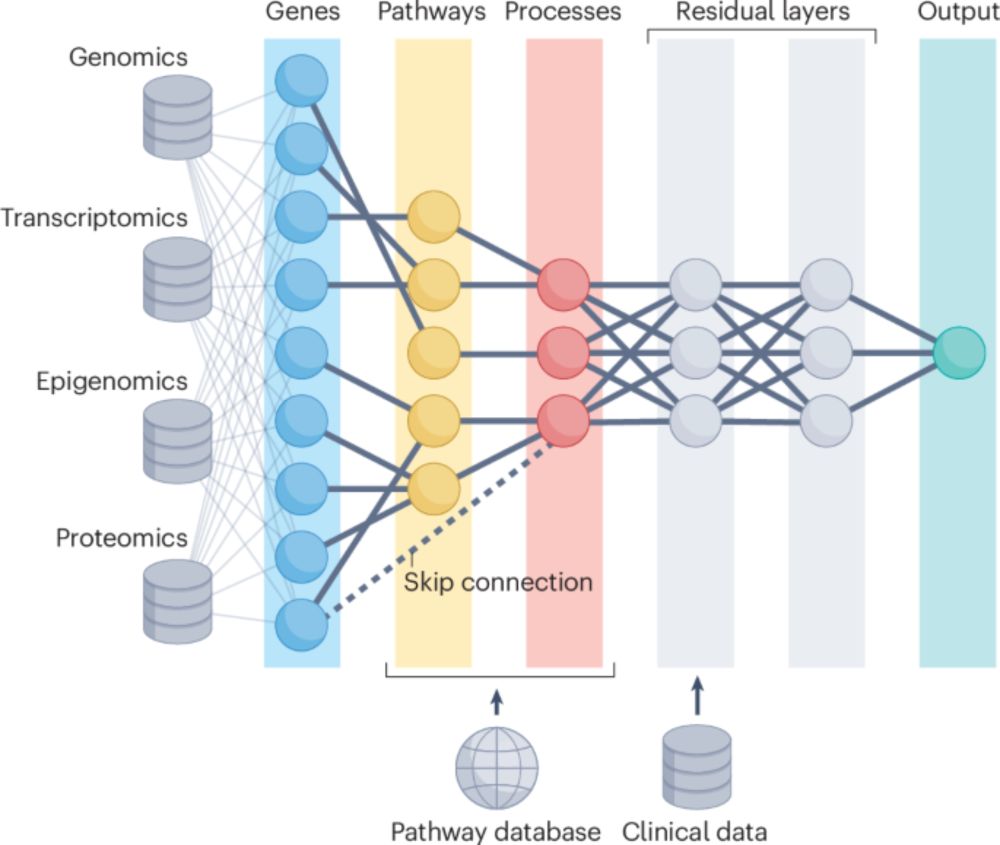

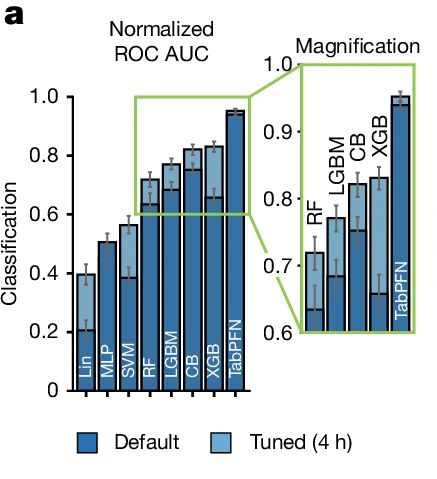

Plot showing stacked bar plots with error bars to visualize normalized ROC AUC of different machine learning models, before and after fine-tuning for four hours. The main insight is that TabPFN, a tabular foundation model, outperforms tree-based methods such as random forests and XGBoost.

How might one redesign this data visualization to avoid using much-maligned 'plunger plots'?

#visualisation

From www.nature.com/articles/s41...

10.01.2025 06:42 — 👍 1 🔁 0 💬 0 📌 0

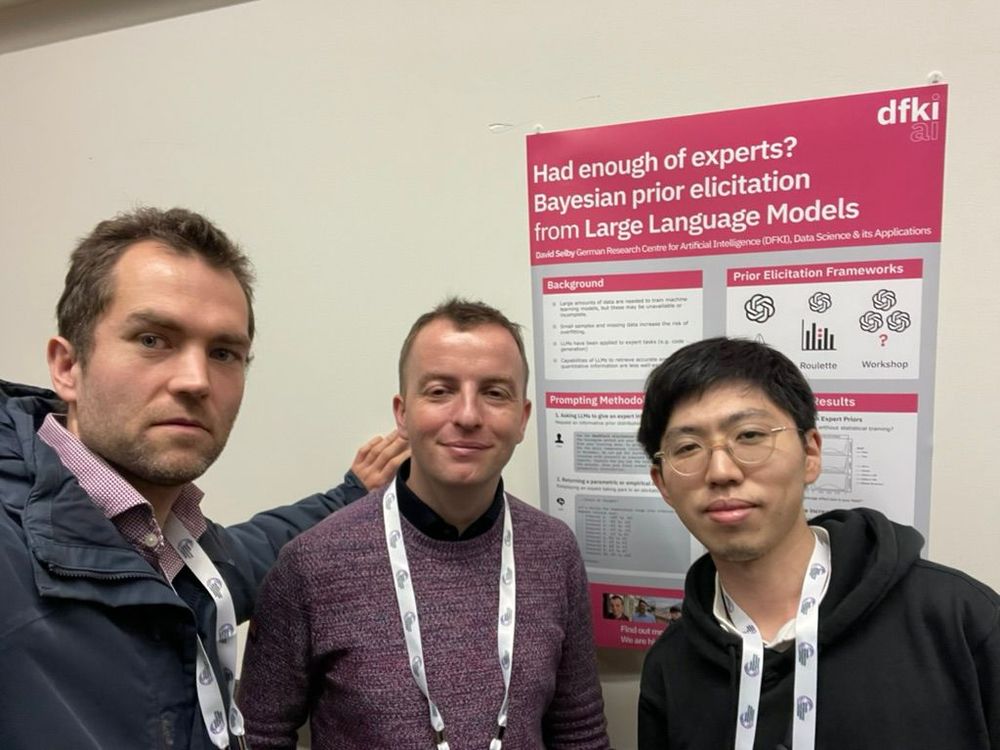

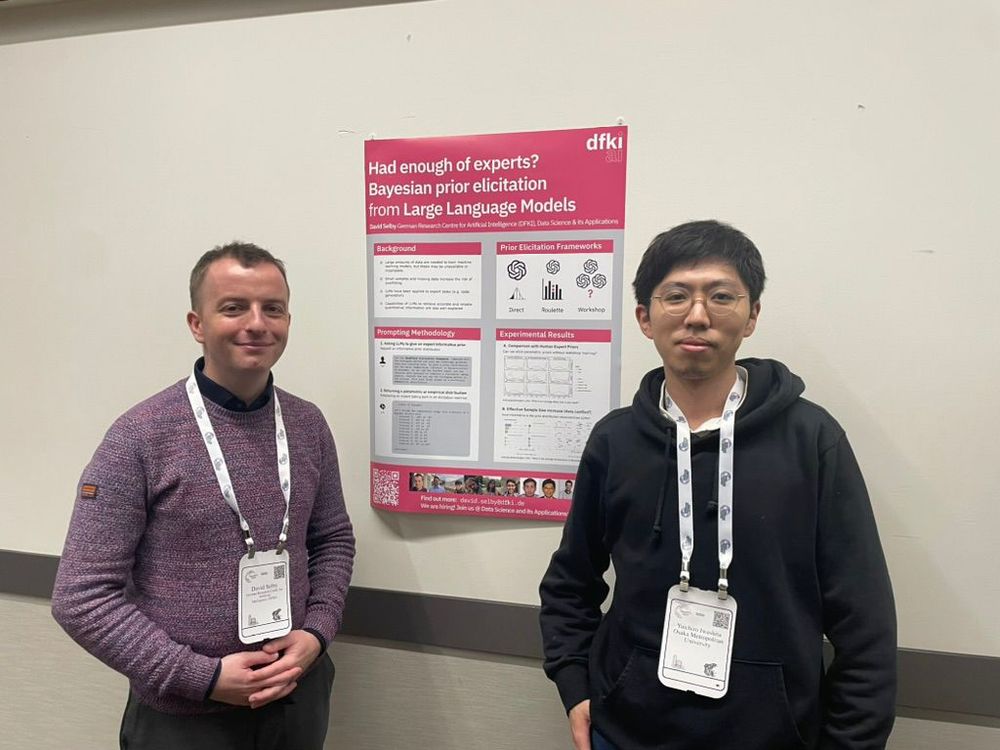

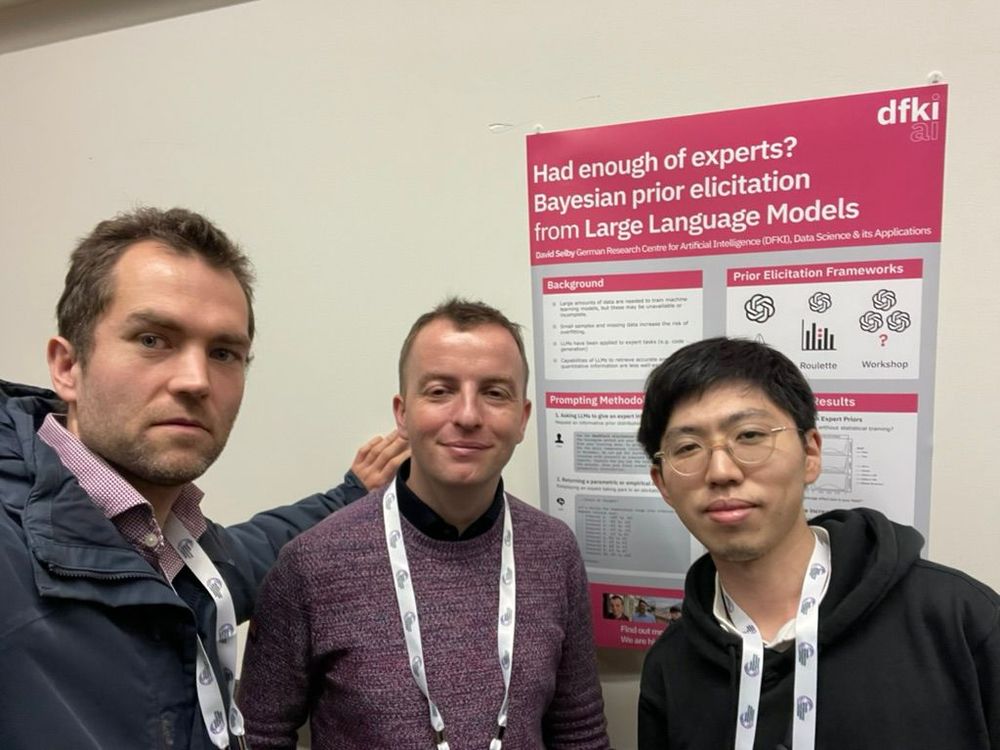

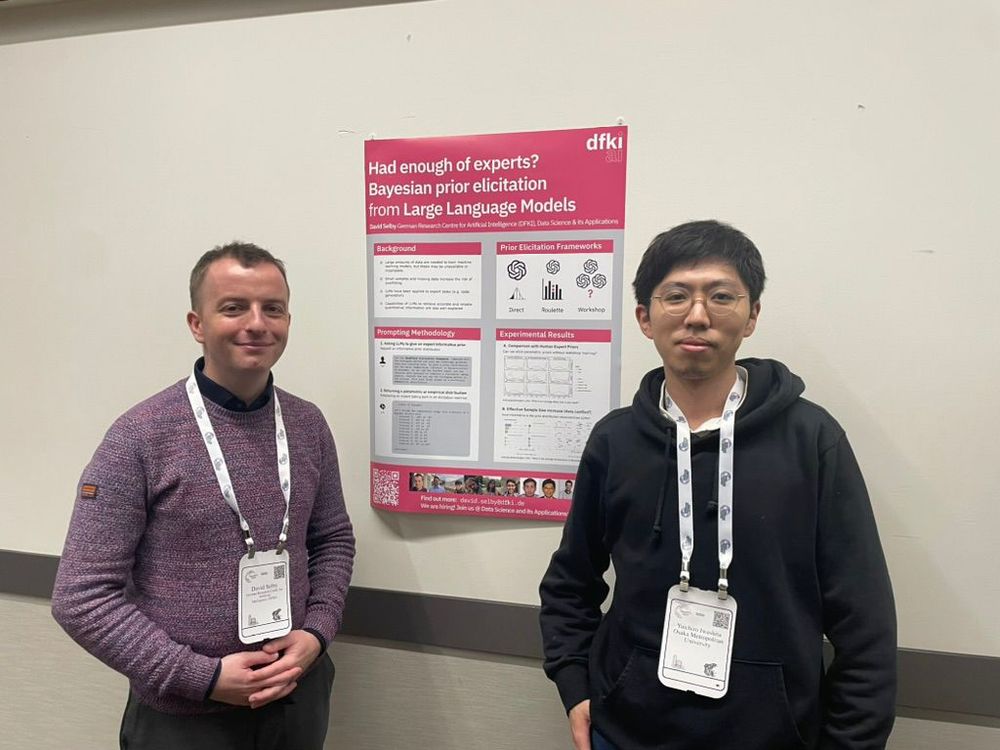

Sebastian Vollmer, David Selby and Yuichiro Iwashita present their poster, "Had enough of experts? Bayesian prior elicitation from Large Language Models" at the NeurIPS Bayesian Decisionmaking and Uncertainty Workshop 2024 in Vancouver, Canada.

Sebastian Vollmer, David Selby and Yuichiro Iwashita present their poster, "Had enough of experts? Bayesian prior elicitation from Large Language Models" at the NeurIPS Bayesian Decisionmaking and Uncertainty Workshop 2024 in Vancouver, Canada.

Pleased to present our poster at #NeurIPS2024 workshop on Bayesian Decisionmaking and Uncertainty! 🎉 Our work explores using large language models for eliciting expert-informed Bayesian priors. Elicited lots of discussion with the ML community too! Check it out: neurips.cc/virtual/2024...

20.12.2024 12:43 — 👍 0 🔁 0 💬 0 📌 0

Visible neural networks for multi-omics integration: a critical review

Biomarker discovery and drug response prediction is central to personalized medicine, driving demand for predictive models that also offer biological insights. Biologically informed neural networks (B...

Excited to share our new preprint: Visible neural networks for multi-omics integration: a critical review! 🌟 We systematically analyse 86 studies on biologically informed neural networks (BINNs/VNNs), highlighting trends, challenges, interesting ideas & opportunities. www.biorxiv.org/content/10.1...

20.12.2024 12:20 — 👍 4 🔁 0 💬 0 📌 0

Manchester's quality newspaper. Sign up now - we only publish via email.

Send tips to our reporters ophiraophira.bsky.social and Jack Dulhanty

Book your Good Night Train to: 📍Brussels📍Antwerp📍Rotterdam 📍Amsterdam 📍Berlin 📍Dresden 📍Prague🚆

🚞 europeansleeper.eu

Writer of the new TOXIC AVENGER and TOXIC CRUSADERS comics and co-writer of JUSTICE WARRIORS. Founded The Nib (RIP). Comics editor at In These Times magazine.

https://mattbors.substack.com/

We are the Young Statisticians Section of the Royal Statistical Society👶🔢. The views expressed are our own and do not necessarily represent those of the RSS.

Publishing reviews and commentaries across the fields of genetics and genomics. Part of Springer Nature and Nature Portfolio.

https://www.nature.com/nrg/

Assistant Prof @ImperialCollege. Applied Bayesian inference, spatial stats and deep generative models for epidemiology. Passionate about probabilistic programming—check out my evolving #Numpyro course: https://elizavetasemenova.github.io/prob-epi 🚀

Interested in all things visual and data, like data visualization. ISOTYPE collector, synth dabbler, runner.

Playing with data, visualizing it for humans at flowingdata.com

ASA Fellow; #rstats developer of graphical methods for categorical and multivariate data; #datavis history of data visualization; #historicaldatavis; Milestones project

Web: www.datavis.ca

GitHub: github.com/friendly

Associate professor of statistics and data science at the University of St Thomas. Into data visualization and reproducible research, obsessed with R. pronoun.is/she

website: amelia.mn

mastodon: @vis.social/@amelia

Co-host, The War on Cars podcast. Writer, runner, Disney parks fan. Born at 330.21 ppm. (he/him)

Pre-order "Life After Cars," coming in October from Thesis, an imprint of Penguin Random House.

https://www.lifeaftercars.com

A podcast about the fight against car culture. Hosted by Sarah Goodyear & Doug Gordon.

Pre-order our new book, "Life After Cars," coming Oct 2025 from Thesis / Penguin Random House.

https://www.lifeaftercars.com/

https://www.patreon.com/thewaroncarspod

the once and future city planner // senior legislative director for california YIMBY // AICP // proud kentuckian // #BBN // buy my book ❤

I run a newsletter/podcast called Volts about clean energy & politics. Subscribe & join the community at http://volts.wtf!

Biking news up Seattle’s hills since 2010.

https://www.seattlebikeblog.com/

#SEAbikes #Seattle

"Fietser" (Dutch) 'feet-ser' Meaning: A Person Who Bikes For Transportation; Infrastructure, City Design, E-Cargo Bike Ambassador, Brasil 🇧🇷🔗, Train & Aviation Enjoyer

The only website devoted to covering all the livable streets and public space news you need. Visit us at http://nyc.streetsblog.org. Retweets/posts are NOT endorsements.

Maire de Paris, ville hôte des Jeux Olympiques et Paralympiques 2024

All about cycling in the Netherlands

Cycling ambassador with the Dutch Cycling Embassy | Mark Wagenbuur

I like trains and walkable neighborhoods.

https://linktr.ee/thetransitguy