Program - MAIN educational 2025

Website of the educational workshop organized during the Montreal Artificial Intelligence and Neuroscience Conference 2025

Attending the Montreal AI and Neuroscience (MAIN) Conference this week? #MontAIN2025

We have put together some exciting educational workshops on cognitive benchmarking large models, RL and video games and dynamical systems! More info and registration here: main-educational.github.io/program/

09.12.2025 15:01 —

👍 10

🔁 1

💬 1

📌 0

Registration closes tonight!

07.12.2025 22:21 —

👍 1

🔁 0

💬 0

📌 0

MAIN educational 2025

Website of the educational workshop organized during the Montreal Artificial Intelligence and Neuroscience Conference 2025

Interested in getting hands on with methods at the intersection of AI and neuroscience? Register to the Educational Workshop of the Montreal AI and Neuroscience (MAIN) Conference 2025! main-educational.github.io

06.12.2025 20:10 —

👍 5

🔁 1

💬 0

📌 1

I've created a @gatsbyucl.bsky.social starter pack!

Let me know if you’d like to be included, or just jump in to see what we're talking about.

Either way, a retweet would be greatly appreciated! 🚀

go.bsky.app/4g6Ro4U go.bsky.app/AErHDon

04.03.2025 13:56 —

👍 10

🔁 4

💬 0

📌 0

When an audio AI Model Play 🧠 Dress-Up

This really is about fine-tuning an audio artificial network to align representations with human brain data, and seeing what happened next

CNeuroMod is now on substack, and our first post highlights a new study showing that a tiny 2M-parameter audio model can be meaningfully fine-tuned on an individual brain with benefits for downstream AI tasks. open.substack.com/pub/cneuromo...

21.11.2025 17:37 —

👍 1

🔁 1

💬 0

📌 0

very much looking forward to try this.

18.11.2025 16:01 —

👍 1

🔁 0

💬 0

📌 0

The 2nd CogBases Workshop is this 4 & 5 Nov at Institut Pasteur!

We'll discuss the latest in open science methods for analysing brain imaging data. Registration free, but mandatory

neuroanatomy.github.io/cogbases-2025/

@k4tj4.bsky.social @cmaumet.bsky.social @bthirion.bsky.social @demw.bsky.social

14.10.2025 10:33 —

👍 12

🔁 9

💬 1

📌 1

OpenNeuro @openneuro.bsky.social just hit a huge milestone: 1500 datasets! Congrats to the team on making this project so successful over the last 7 years.

13.10.2025 23:35 —

👍 142

🔁 30

💬 2

📌 1

The Biological Psychiatry family of journals is now officially on Bluesky!

Follow us for the latest research in psychiatric neuroscience, cognitive neuroimaging, and global open science from our three leading journals.

25.09.2025 09:36 —

👍 67

🔁 23

💬 0

📌 0

LinkedIn

This link will take you to a page that’s not on LinkedIn

And to match their spirit of openness, we’ve released the code, containers, and data. Anyone can rerun the entire analysis.

Co-lead authors: @clarken.bsky.social and @surchs.bsky.social

Paper: doi.org/10.1093/giga...

Github: github.com/SIMEXP/autis...

Zenodo archive: doi.org/10.5281/zeno...

End/🧵

08.09.2025 14:04 —

👍 4

🔁 0

💬 0

📌 0

The signature was discovered in a balanced cohort of ~1,000 individuals and replicated in an independent sample (thanks to the ABIDE I & II wonderful participants and the researchers who shared their data 💜💜💜). 5/🧵

08.09.2025 14:03 —

👍 2

🔁 0

💬 1

📌 0

Scatterplot showing individual risk (positive predictive value) versus prevalence in the general population for different autism risk markers. Rare monogenic syndromes (green diamonds) confer very high risk but are extremely rare; common genetic variants (yellow triangles) are widespread but confer very low risk; copy number variants (pink triangles) sit in between. Previous imaging-based models (red dots) achieve modest risk. The new High-Risk Signature (orange circle) replicates across datasets, confers a sevenfold increased risk of autism, and is present in about 1 in 200 people.

A positive result means someone is about seven times more likely to actually have an autism diagnosis. This rivals the best imaging markers, while still being found in about 1 in 200 people in the general population. 4/🧵

08.09.2025 14:03 —

👍 4

🔁 0

💬 1

📌 0

Diagram comparing how different autism risk markers identify individuals. Each circle represents the overlap between people labeled by a marker (grey), people with autism (purple), and those labeled who actually have autism (blue). Monogenic syndromes label very few people but with high accuracy; existing imaging models label many people but with low accuracy; the High-Risk Signature (HRS) approach identifies a small subset with a higher proportion of true autism cases.

We turned the problem on its head. Instead of trying to classify everyone, we built a brain signature that only makes predictions when it’s confident. 3/🧵

08.09.2025 14:01 —

👍 2

🔁 0

💬 1

📌 0

Real life isn’t balanced. Autism affects about 1% of the population. In that setting, a biomarker with 80% balanced accuracy would catch one true case for every twenty false alarms. 2/🧵

08.09.2025 14:00 —

👍 1

🔁 0

💬 1

📌 0

Many brain imaging “biomarkers” for autism have been proposed. Most aim for balanced accuracy (matching sensivity/specificity) on datasets where cases and controls are split 50/50. 1/🧵

08.09.2025 13:59 —

👍 12

🔁 8

💬 1

📌 0

"A murder of butterflies" has a nice ring to it.

24.08.2025 12:03 —

👍 0

🔁 0

💬 0

📌 0

This year at #CCN25 we showed the importance of OOD evaluation to adjudicate between brain models. Our results demonstrate these trivial but key facts :

- high encoding accuracy ≠ functional convergence

- human brain ≠ NES console ≠ 4-layers CNN

- videogames are cool

w/ @lune-bellec.bsky.social 🙌

13.08.2025 15:51 —

👍 7

🔁 3

💬 0

📌 1

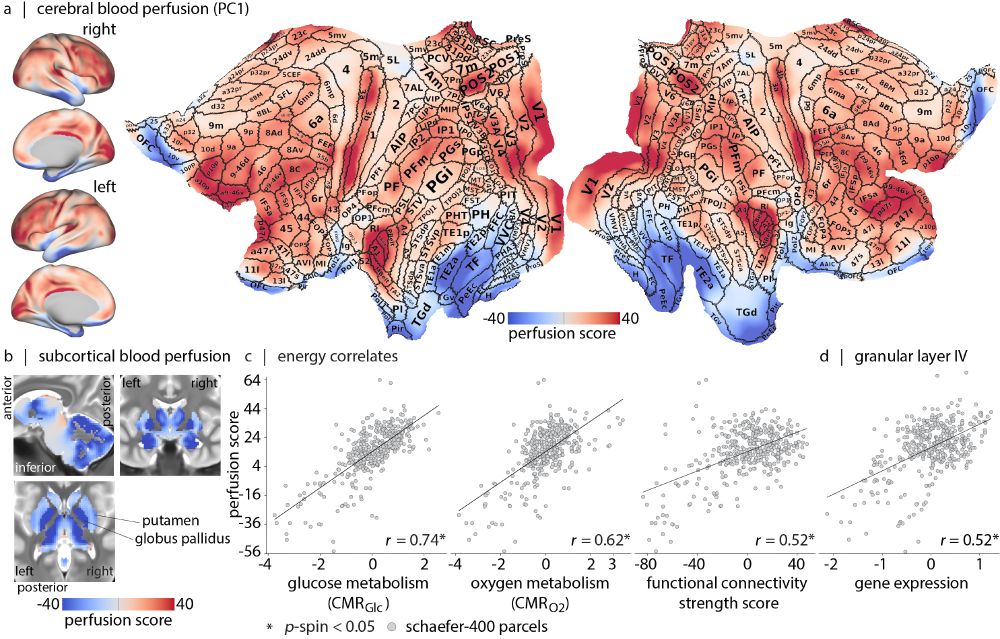

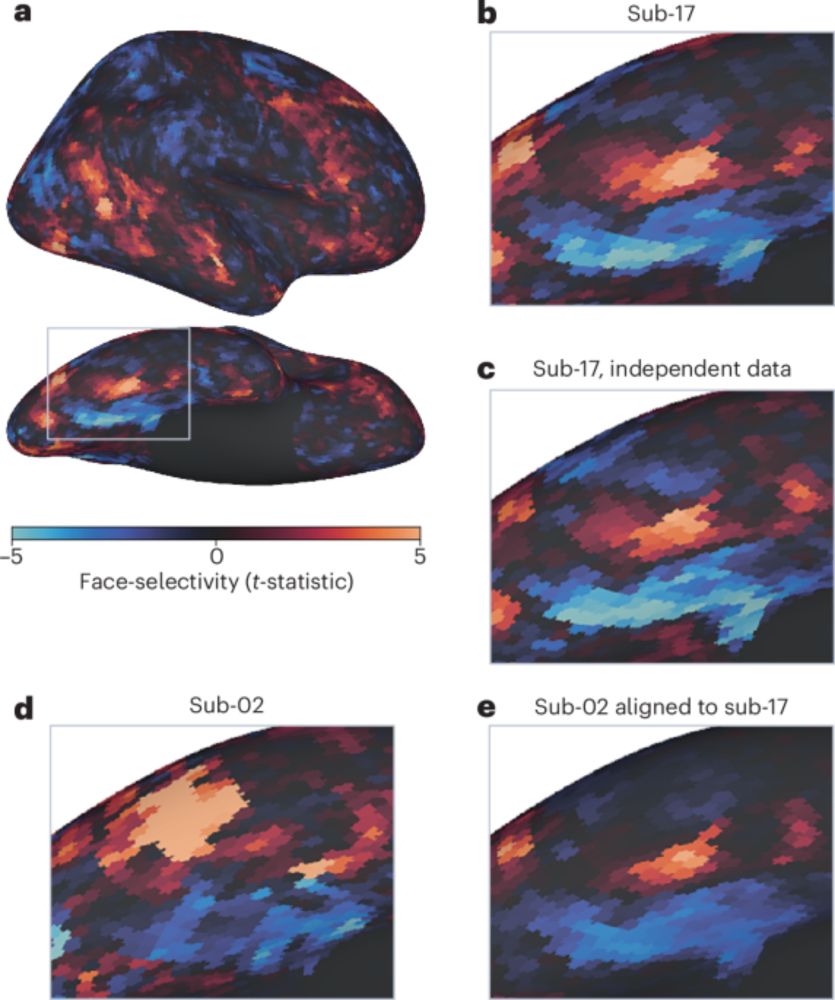

Mapping cerebral blood perfusion and its links to multi-scale brain organization across the human lifespan | doi.org/10.1371/jour...

How does blood perfusion map onto canonical features of brain structure and function? @asafarahani.bsky.social investigates @plosbiology.org ⤵️

08.08.2025 14:24 —

👍 56

🔁 16

💬 1

📌 2

Poster titled "Neuromod: The Courtois Project on Neuronal Modelling" with logos from Université de Montréal and the Centre de recherche de l'Institut universitaire de gériatrie de Montréal.

Large bold text reads:

6 BRAINS – 987H-fMRI – 18 TASKS

Followed by the subtitle:

Naturalistic & Controlled – Multimodal / Perception + Action

Each letter in "18 TASKS" contains thumbnails from various visual tasks.

The central table summarizes 32 datasets grouped by primary domain (Vision, Audition, Language, Memory, Action, Other). For each dataset, the table indicates which stimulus modalities were used (Vision, Speech, Audio, Motion), what responses were collected (Physiology, Eye tracking, Explanations, Actions), and how many sessions and subjects were scanned. The overall visual style is playful and bold, with rainbow colors for modality types and rich iconography indicating data types.

In 2019, the CNeuroMod team and 6 participants began a massive data collection journey: twice-weekly MRI scans for most of 5 years. Data collection is now complete! 1/🧵

07.08.2025 20:30 —

👍 14

🔁 9

💬 1

📌 0

Automated testing with GitHub Actions

Better Code, Better Science: Chapter 4, Part 7

Automated testing with GitHub Actions - the latest in my Better Code, Better Science series russpoldrack.substack.com/p/automated-...

05.08.2025 15:29 —

👍 10

🔁 1

💬 0

📌 0

I find AI coding most useful to comment / suggest on what I do. Your disastrous experience with code generation matches mine. But as a side kick it's incredibly positive IMO.

01.08.2025 21:10 —

👍 1

🔁 0

💬 0

📌 0

🥁... we are SO happy to officially announce that registration is now OPEN for our OHBM Virtual Satellite Meeting, taking place September 10-12!

This has been a major goal of the SEA-SIG for a while now and we're so excited to show you what we've been working on!

🌱🌎✨🧠

01.08.2025 15:51 —

👍 6

🔁 2

💬 0

📌 0

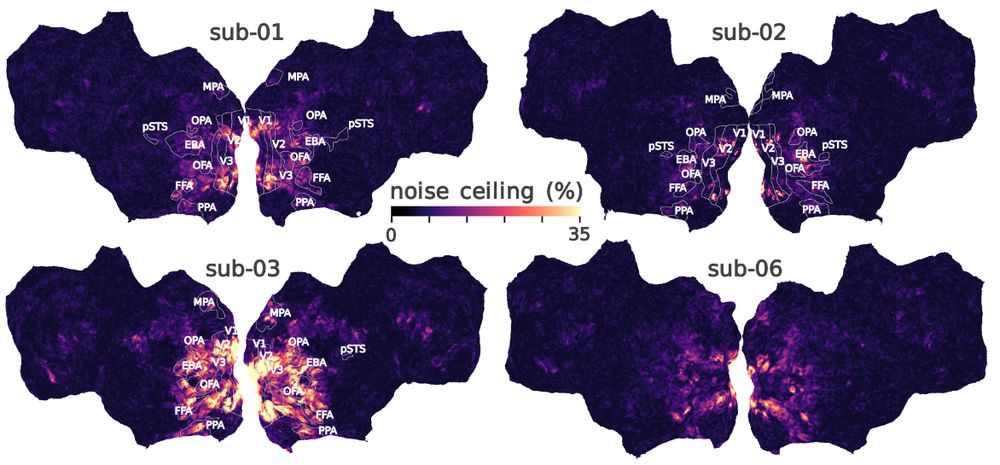

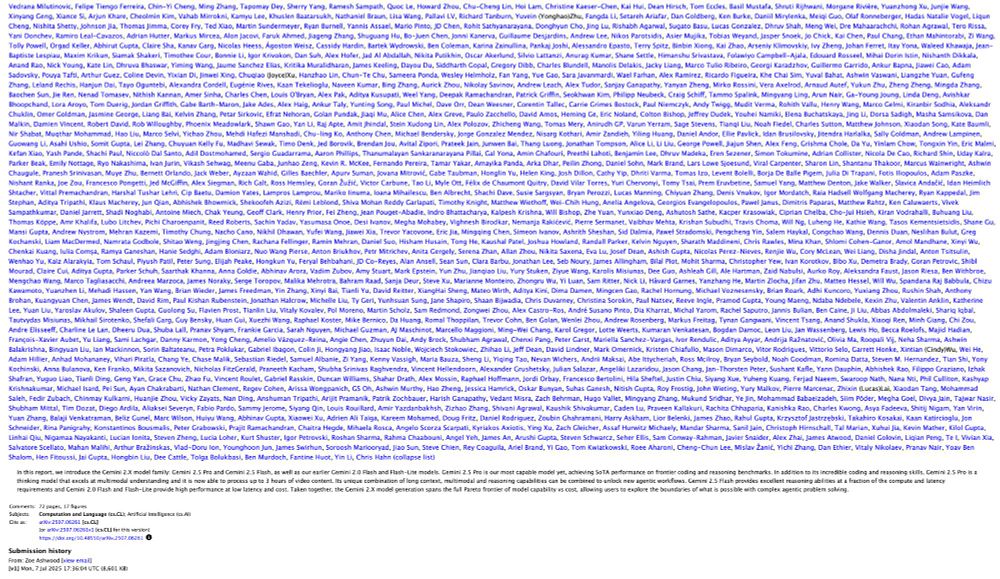

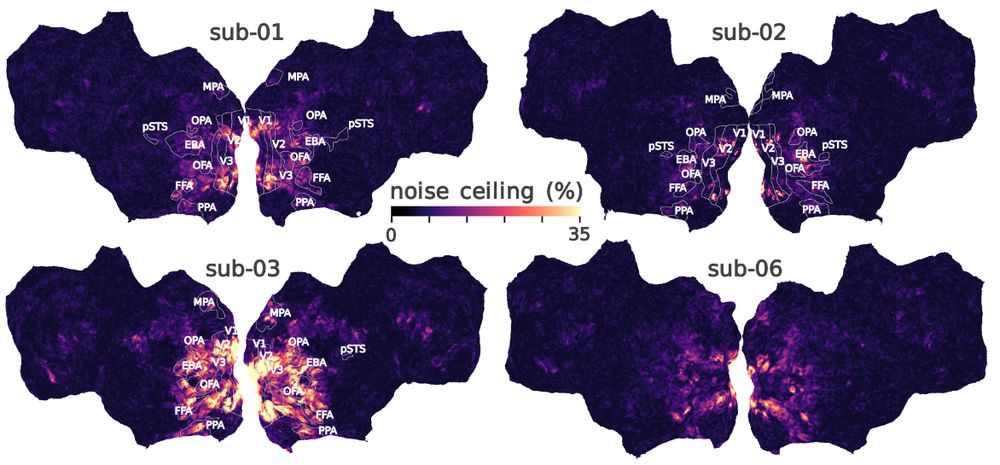

four brain maps showing noise ceiling estimates in response to image presentation

New CNeuroMod-THINGS open-access fMRI dataset: 4 participants · ~4 000 images (720 categories) each shown 3× (12k trials per subject)· individual functional localizers & NSD-inspired QC . Preprint: arxiv.org/abs/2507.09024 Congrats Marie St-Laurent and @martinhebart.bsky.social !!

30.07.2025 01:57 —

👍 35

🔁 17

💬 1

📌 0

Excited to co-organize our NeurIPS 2025 workshop on Foundation Models for the Brain and Body!

We welcome work across ML, neuroscience, and biosignals — from new approaches to large-scale models. Submit your paper or demo! 🧠 🧪 🦾

11.07.2025 19:51 —

👍 8

🔁 1

💬 0

📌 0

This is why I think the platonic rep hypothesis doesn’t apply to brain-ANN alignment, since most existing (functional?) models are implicitly or explicitly trained to mimic humans.

The assumption of PRH is that the networks are trained independently which doesn’t hold in brain-ANN comparisons.

10.07.2025 15:02 —

👍 4

🔁 1

💬 1

📌 0