My article "Data is not available upon request" was published in Meta-Psychology. Very happy to see this out!

open.lnu.se/index.php/me...

@renebekkers.bsky.social

@renebekkers.bsky.social

My article "Data is not available upon request" was published in Meta-Psychology. Very happy to see this out!

open.lnu.se/index.php/me...

Can large language models stand in for human participants?

Many social scientists seem to think so, and are already using "silicon samples" in research.

One problem: depending on the analytic decisions made, you can basically get these samples to show any effect you want.

THREAD 🧵

How A Research Transparency Check Facilitatates Responsible Assessment of Research Quality

13.09.2025 07:57 — 👍 0 🔁 0 💬 0 📌 0

The social sciences face a replicability crisis. A key determinant of replication success is statistical power. We assess the power of political science research by collating over 16,000 hypothesis tests from about 2,000 articles in 46 areas of the discipline. Under generous assumptions, we show that quantitative research in political science is greatly underpow- ered: the median analysis has about 10% power, and only about 1 in 10 tests have at least 80% power to detect the consensus effects reported in the literature. We also find substantial heterogeneity in tests across research areas, with some being characterized by high power but most having very low power. To contextualize our findings, we survey political methodologists to assess their expectations about power levels. Most methodologists greatly overestimate the statistical power of political science research.

The pretty draft is now online.

Link to paper (free): www.journals.uchicago.edu/doi/epdf/10....

Our replication package starts from the raw data and we put real work into making it readable & setting it up so people could poke at it, so please do explore it: dataverse.harvard.edu/dataset.xhtm...

At the FORRT Replication Hub, our mission is to support researchers who want to replicate previous findings. We have now published a big new component with which we want to fulfill this mission: An open access handbook for reproduction and replication studies: forrt.org/replication_...

03.09.2025 06:54 — 👍 59 🔁 35 💬 2 📌 2

We're bringing together members of the VU community, including research support staff and faculty, who will share their experiences and lessons learned from implementing a campus-wide Open Science program.

𝐑𝐞𝐠𝐢𝐬𝐭𝐞𝐫: cos-io.zoom.us/webin...

𝐃𝐚𝐭𝐞: Sep 10, 2025

𝐓𝐢𝐦𝐞: 9:00 AM ET

The full program for the PMGS Meta Research Symposium 2025 is online: docs.google.com/document/d/1... If you are interested in causal inference, systematic review, hypothesis testing, and preregistration, join is October 17th in Eindhoven! Attendance is free!

20.08.2025 14:34 — 👍 21 🔁 13 💬 0 📌 3

Coding errors in data processing are more likely to be ignored if the erroneous result is in line with what we want to see. The theoretical prediction made in this paper is very plausible - testing it empirically is perhaps a bit more challenging. But still, interesting. arxiv.org/pdf/2508.20069

30.08.2025 09:54 — 👍 5 🔁 2 💬 0 📌 0

Thanks for mentioning it! Indeed it's a teaching tool. Each entry is a risk factor, but some are worse than others and their weights will vary from case to case. The website gives some meta science insights for each issue, and recommends a fix

16.08.2025 13:33 — 👍 1 🔁 0 💬 0 📌 0A 25 year old successful replication of a well-known finding in social psychology about the overestimation of self-interest in attitudes.

15.08.2025 11:39 — 👍 0 🔁 0 💬 0 📌 0A good systematic review/meta analysis will evaluate the quality of each study and not just review the conclusions/data.

If the methodology doesn't include evaluation of quality, it's not a good source. Reviewing methodology is part of the vetting process.

The Center For Scientific Integrity, our parent nonprofit, is hiring! Two new positions:

-- Editor, Medical Evidence Project

-- Staff reporter, Retraction Watch

and we're still recruiting for:

-- Assistant researcher, Retraction Watch Database

#ai #llm #integrity #reliability #transparency

02.07.2025 06:00 — 👍 0 🔁 0 💬 0 📌 0As the wave of LLM generated research swells, how can you tell whether it is legit? Introducing the high five: a checklist for the evaluation of knowledge claims by LLMs, other generative AI, and science in general

01.07.2025 13:49 — 👍 0 🔁 0 💬 0 📌 1

Very important evidence from Sweden showing that #business and #economics become less #prosocial during their studies, while #law students do not onlinelibrary.wiley.com/doi/full/10....

23.02.2025 15:12 — 👍 0 🔁 0 💬 0 📌 0Yes!

20.02.2025 09:13 — 👍 0 🔁 0 💬 0 📌 0

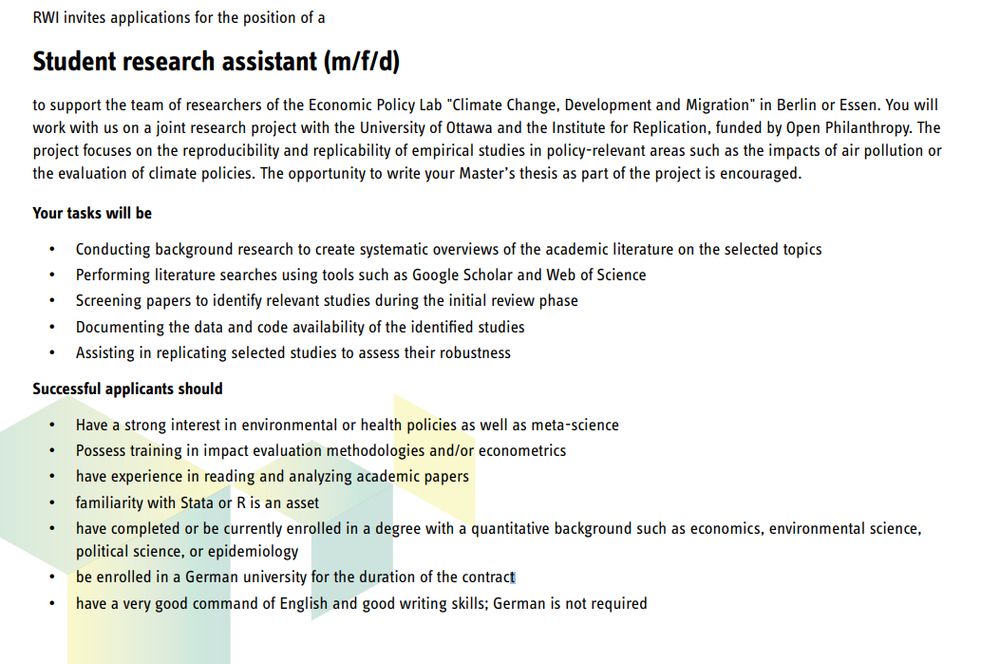

We are looking for RAs to work with us on a new meta-science & replication project, joint with @i4replication.bsky.social, now with focus on environmental (economics) topics such as air pollution & carbon pricing. RAs can work remotely but must be located in Germany www.rwi-essen.de/fileadmin/us...

30.01.2025 10:54 — 👍 10 🔁 9 💬 0 📌 0@ap.brid.gy @renebekkers.mastodon.social.ap.brid.gy

20.02.2025 07:39 — 👍 0 🔁 0 💬 0 📌 0@ap.brid.gy @renebekkers@mastodon.social

20.02.2025 07:37 — 👍 0 🔁 0 💬 0 📌 0@ap.brid.gy

20.02.2025 07:36 — 👍 0 🔁 0 💬 0 📌 0