🎉 Congrats @peterbhase.bsky.social + best wishes for the @schmidtsciences.bsky.social scientist + @stanfordnlp.bsky.social visiting researcher roles! 🙂

Looking forward to your continued exciting research contributions in AI safety & interpretability, and your new grant-making contributions 🔥

15.07.2025 01:15 —

👍 2

🔁 0

💬 0

📌 0

Overdue job update — I am now:

- A Visiting Scientist at @schmidtsciences.bsky.social, supporting AI safety & interpretability

- A Visiting Researcher at Stanford NLP Group, working with @cgpotts.bsky.social

So grateful to keep working in this fascinating area—and to start supporting others too :)

14.07.2025 17:06 —

👍 5

🔁 1

💬 3

📌 0

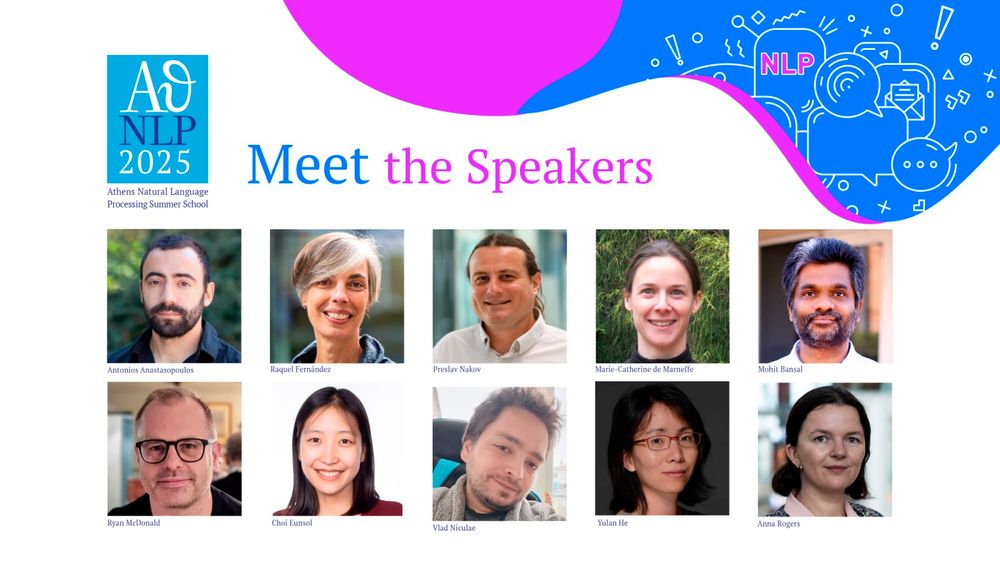

📢 Speakers Announcement #AthNLP2025

We’re thrilled to welcome a new lineup of brilliant minds to the ATHNLP stage!🚀

Meet our new NLP speakers shaping the future.

📅 Dates: 4-10 September 2025 athnlp.github.io/2025/speaker...

#ATHNLP #NLP #AI #MachineLearning #Athens

27.06.2025 14:49 —

👍 7

🔁 1

💬 1

📌 0

Looking forward to this year's edition! With great speakers: Ryan McDonald Yulan He @vn-ml.bsky.social @antonisa.bsky.social Raquel Fernandez @annarogers.bsky.social Preslav Nakov @mohitbansal.bsky.social @eunsol.bsky.social Marie-Catherine de Marnefffe !

06.06.2025 09:10 —

👍 6

🔁 3

💬 0

📌 0

the journey has been a great pleasure for me too @jmincho.bsky.social 🤗, and looking forward to your exciting work in the future! 💙

21.05.2025 12:18 —

👍 1

🔁 0

💬 0

📌 0

🔥 Huge CONGRATS to Jaemin + @jhucompsci.bsky.social! 🎉

Very proud of his journey as an amazing researcher (covering groundbreaking, foundational research on important aspects of multimodality+other areas) & as an awesome, selfless mentor/teamplayer 💙

-- Apply to his group & grab him for gap year!

20.05.2025 18:18 —

👍 6

🔁 1

💬 1

📌 0

🚨 Introducing our @tmlrorg.bsky.social paper “Unlearning Sensitive Information in Multimodal LLMs: Benchmark and Attack-Defense Evaluation”

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

07.05.2025 18:54 —

👍 10

🔁 8

💬 1

📌 0

aww thanks for the kind words @esteng.bsky.social -- completely my pleasure 🤗💙

06.05.2025 00:04 —

👍 1

🔁 0

💬 0

📌 0

🔥 BIG CONGRATS to Elias (and UT Austin)! Really proud of you -- it has been a complete pleasure to work with Elias and see him grow into a strong PI on *all* axes 🤗

Make sure to apply for your PhD with him -- he is an amazing advisor and person! 💙

05.05.2025 22:00 —

👍 12

🔁 4

💬 1

📌 0

🌵 I'm going to be presenting PBT at #NAACL2025 today at 2PM! Come by poster session 2 if you want to hear about:

-- balancing positive and negative persuasion

-- improving LLM teamwork/debate

-- training models on simulated dialogues

With @mohitbansal.bsky.social and @peterbhase.bsky.social

30.04.2025 15:04 —

👍 8

🔁 3

💬 0

📌 0

I will be presenting ✨Reverse Thinking Makes LLMs Stronger Reasoners✨at #NAACL2025!

In this work, we show

- Improvements across 12 datasets

- Outperforms SFT with 10x more data

- Strong generalization to OOD datasets

📅4/30 2:00-3:30 Hall 3

Let's chat about LLM reasoning and its future directions!

29.04.2025 23:21 —

👍 5

🔁 3

💬 1

📌 0

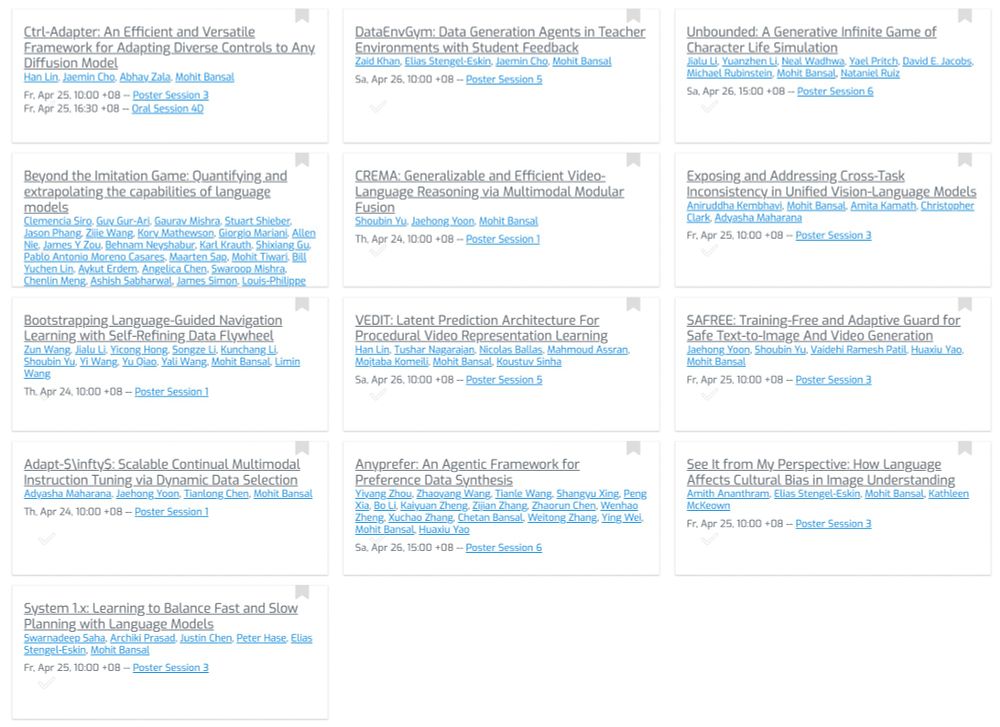

✈️ Heading to #NAACL2025 to present 3 main conf. papers, covering training LLMs to balance accepting and rejecting persuasion, multi-agent refinement for more faithful generation, and adaptively addressing varying knowledge conflict.

Reach out if you want to chat!

29.04.2025 17:52 —

👍 15

🔁 5

💬 1

📌 0

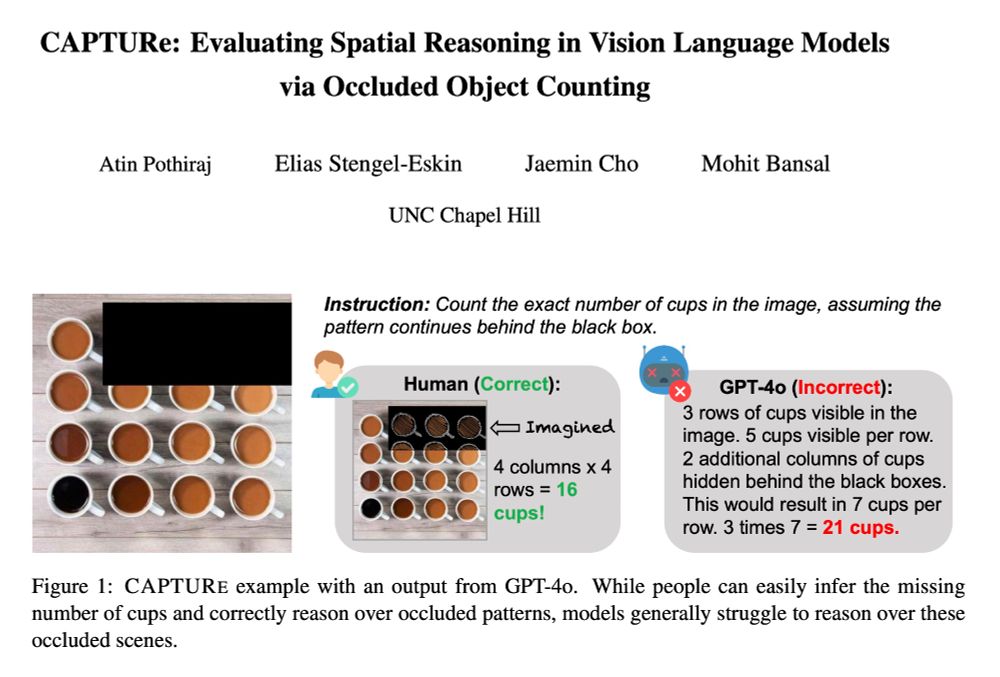

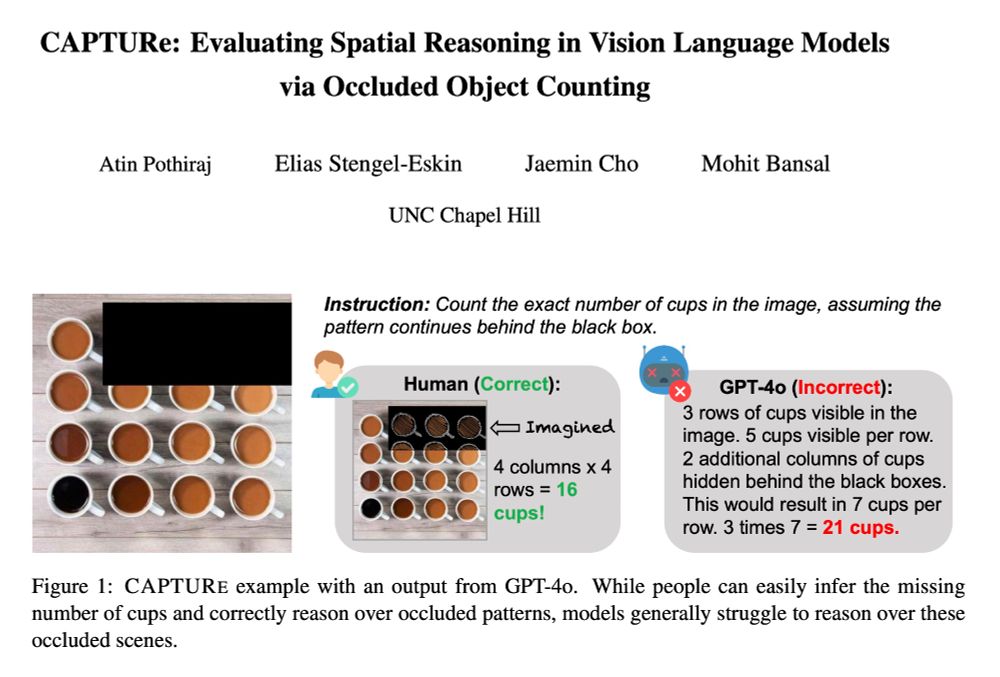

Check out 🚨CAPTURe🚨 -- a new benchmark testing spatial reasoning by making VLMs count objects under occlusion.

SOTA VLMs (GPT-4o, Qwen2-VL, Intern-VL2) have high error rates on CAPTURe (but humans have low error ✅) and models struggle to reason about occluded objects.

arxiv.org/abs/2504.15485

🧵👇

24.04.2025 15:14 —

👍 5

🔁 4

💬 1

📌 0

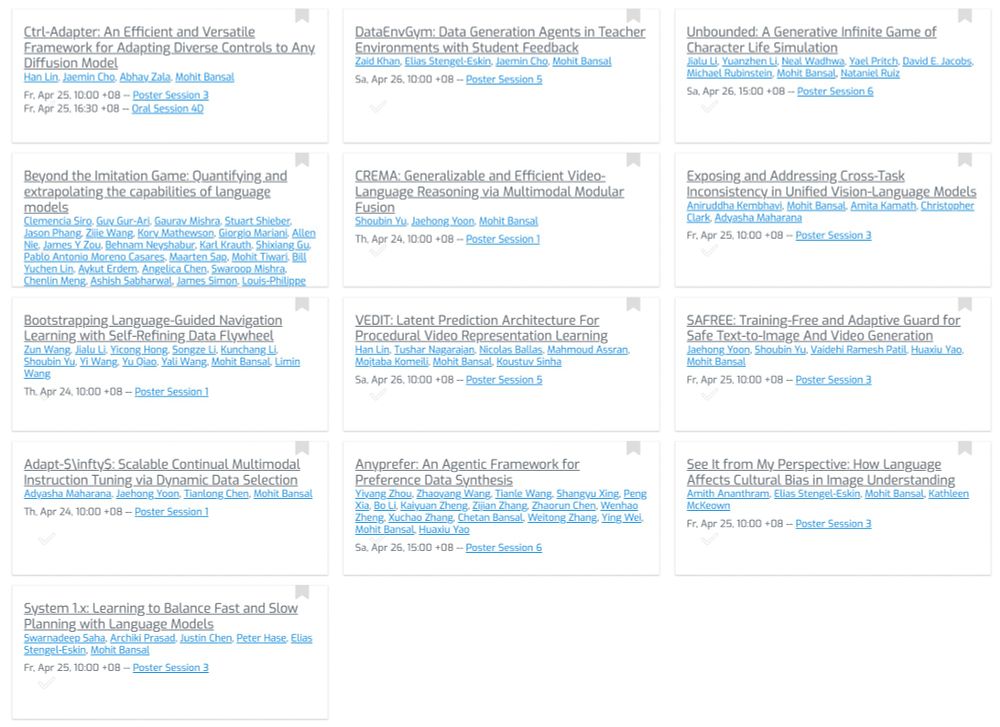

PS. and here are the presentation time slots/details of all the

@iclr-conf.bsky.social and @tmlrorg.bsky.social papers 👇

21.04.2025 21:07 —

👍 2

🔁 0

💬 0

📌 0

In Singapore for #ICLR2025 this week to present papers + keynotes 👇, and looking forward to seeing everyone -- happy to chat about research, or faculty+postdoc+phd positions, or simply hanging out (feel free to ping)! 🙂

Also meet our awesome students/postdocs/collaborators presenting their work.

21.04.2025 16:49 —

👍 19

🔁 4

💬 1

📌 1

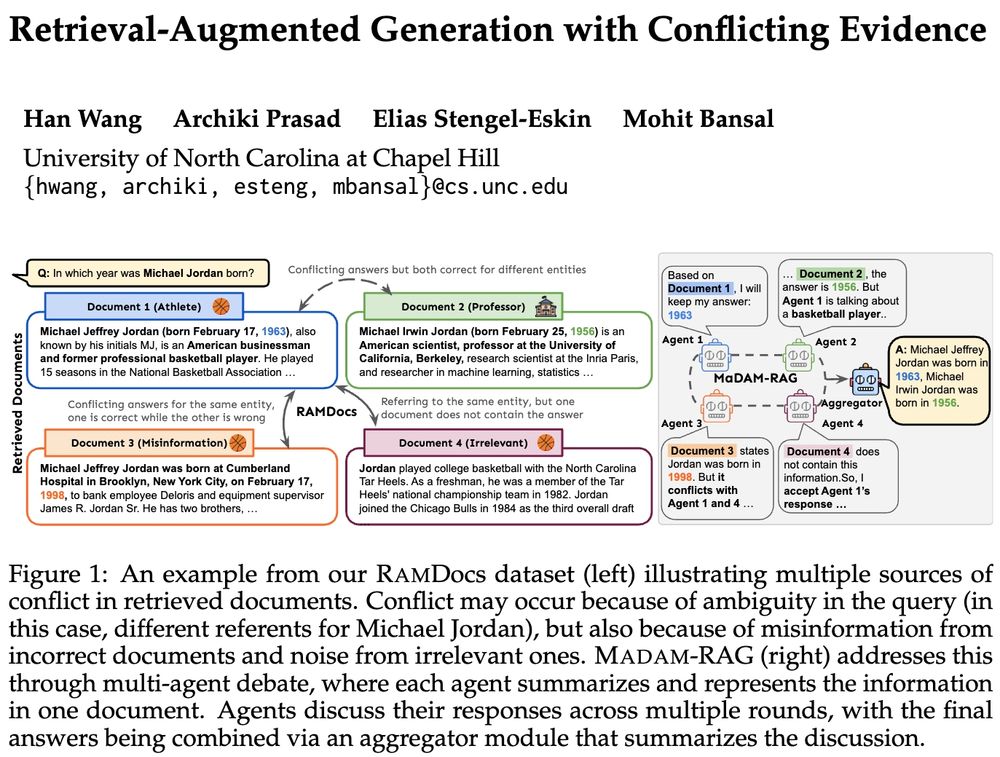

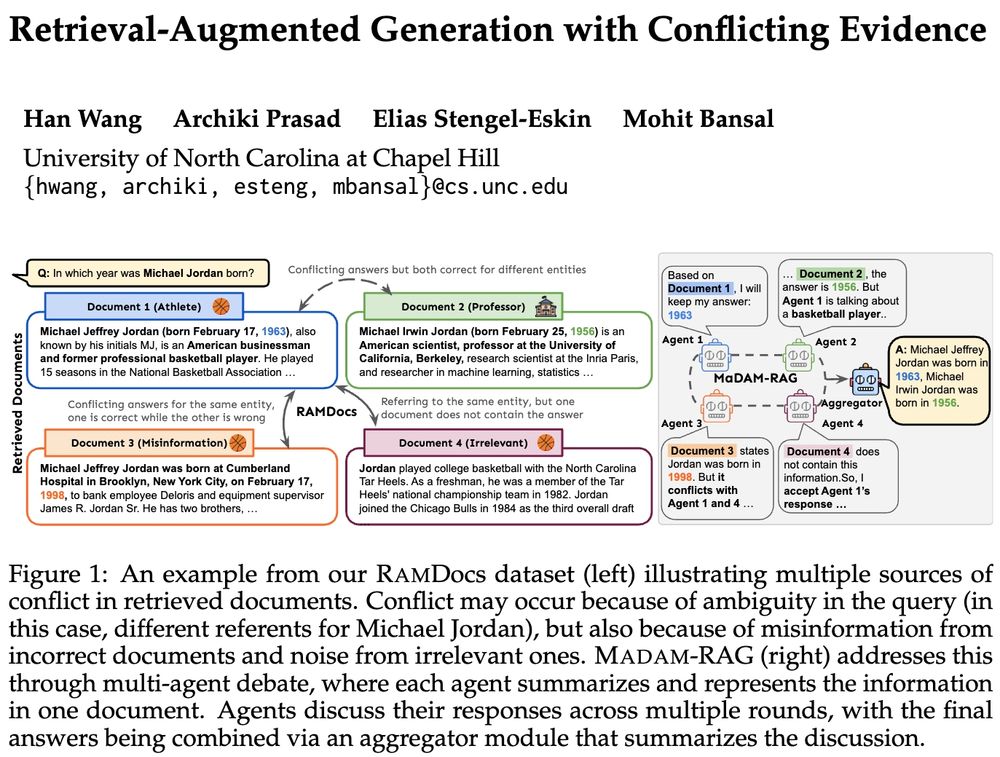

🚨Real-world retrieval is messy: queries are ambiguous or docs conflict & have incorrect/irrelevant info. How can we jointly address these problems?

➡️RAMDocs: challenging dataset w/ ambiguity, misinformation & noise

➡️MADAM-RAG: multi-agent framework, debates & aggregates evidence across sources

🧵⬇️

18.04.2025 17:05 —

👍 14

🔁 7

💬 3

📌 0

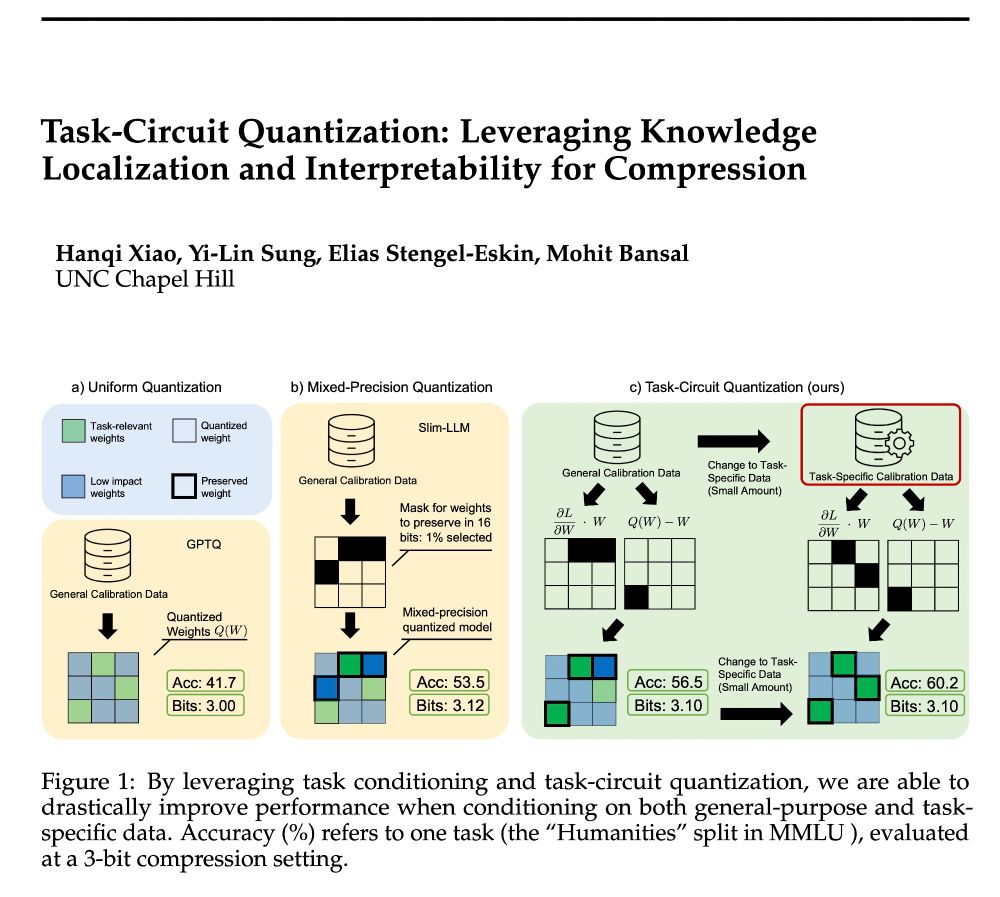

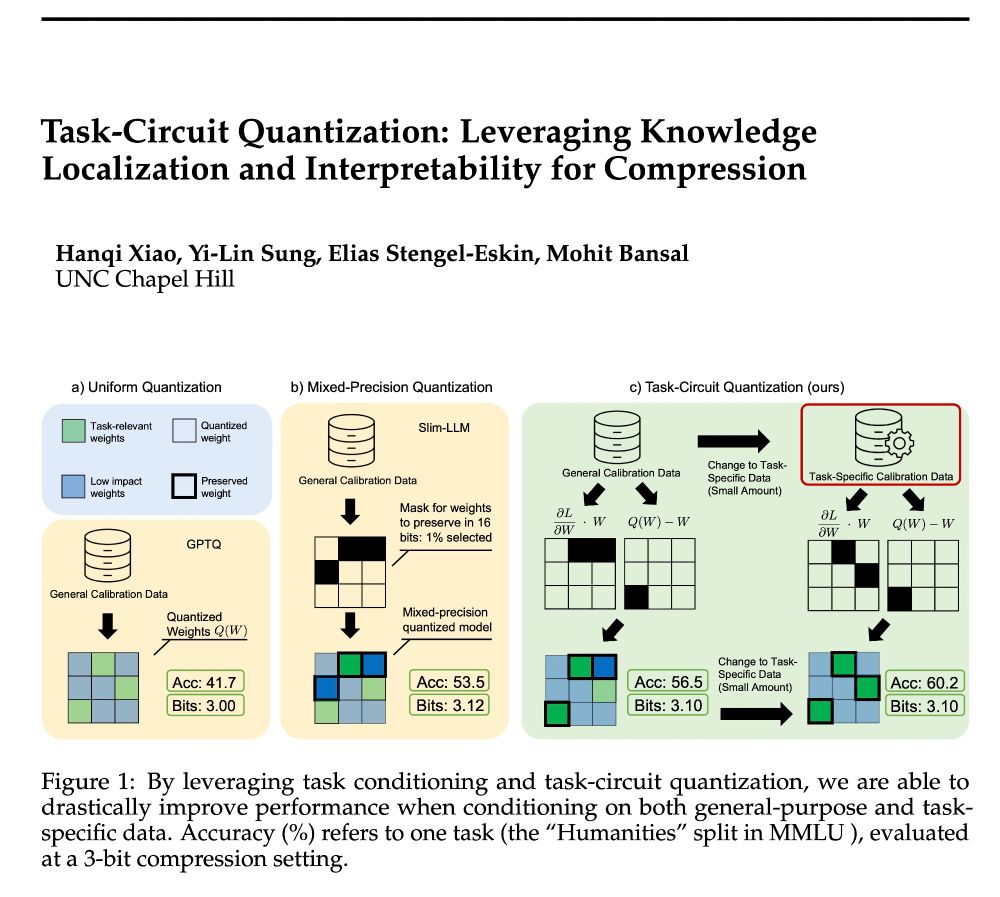

Excited to share my first paper as first author: "Task-Circuit Quantization" 🎉

I led this work to explore how interpretability insights can drive smarter model compression. Big thank you to @esteng.bsky.social, Yi-Lin Sung, and @mohitbansal.bsky.social for mentorship and collaboration. More to come

16.04.2025 16:19 —

👍 5

🔁 2

💬 0

📌 0

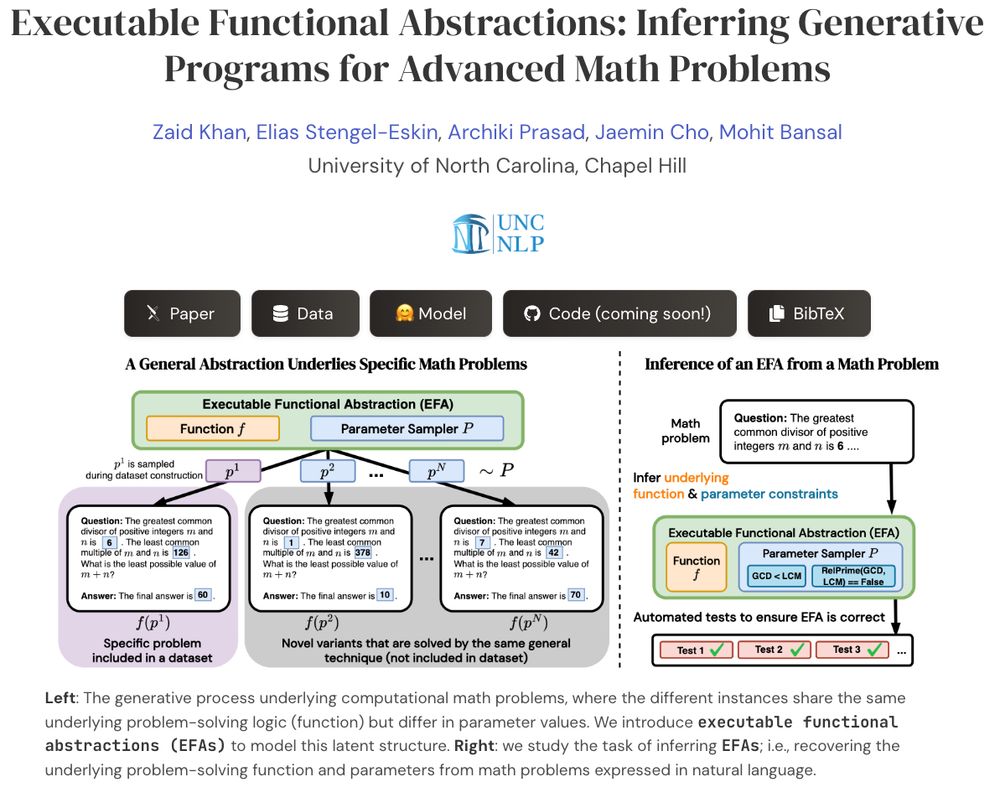

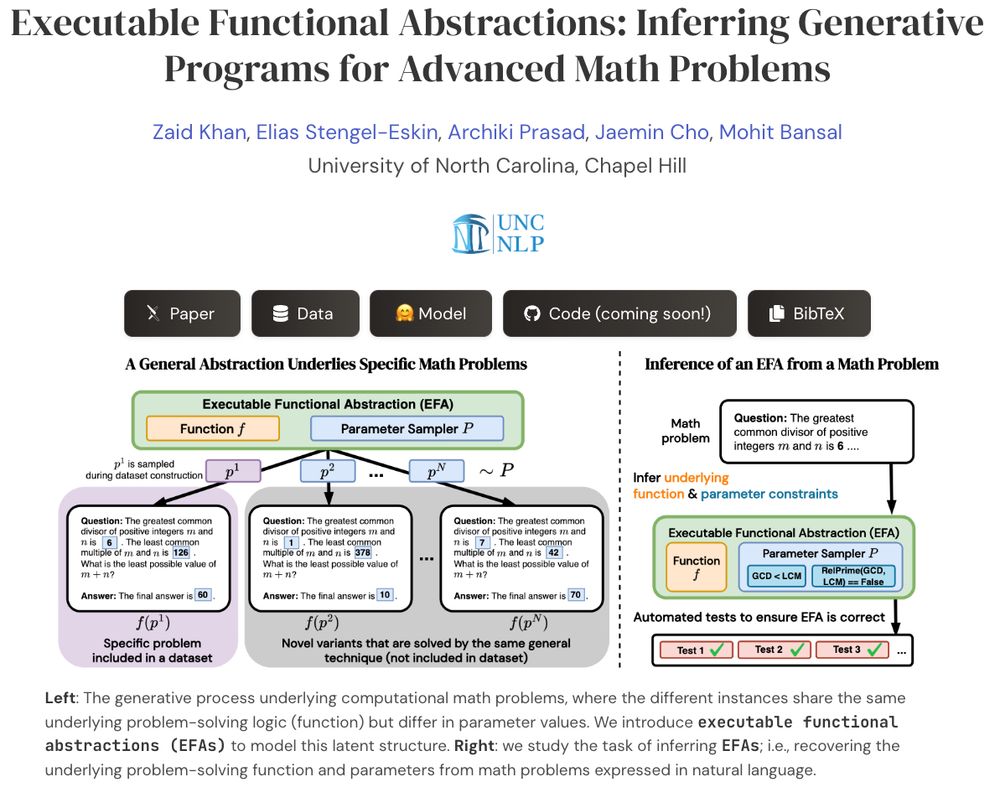

What if we could transform advanced math problems into abstract programs that can generate endless, verifiable problem variants?

Presenting EFAGen, which automatically transforms static advanced math problems into their corresponding executable functional abstractions (EFAs).

🧵👇

15.04.2025 19:37 —

👍 15

🔁 5

💬 1

📌 1

🚨Announcing TaCQ 🚨 a new mixed-precision quantization method that identifies critical weights to preserve. We integrate key ideas from circuit discovery, model editing, and input attribution to improve low-bit quant., w/ 96% 16-bit acc. at 3.1 avg bits (~6x compression)

📃 arxiv.org/abs/2504.07389

12.04.2025 14:19 —

👍 15

🔁 7

💬 1

📌 1

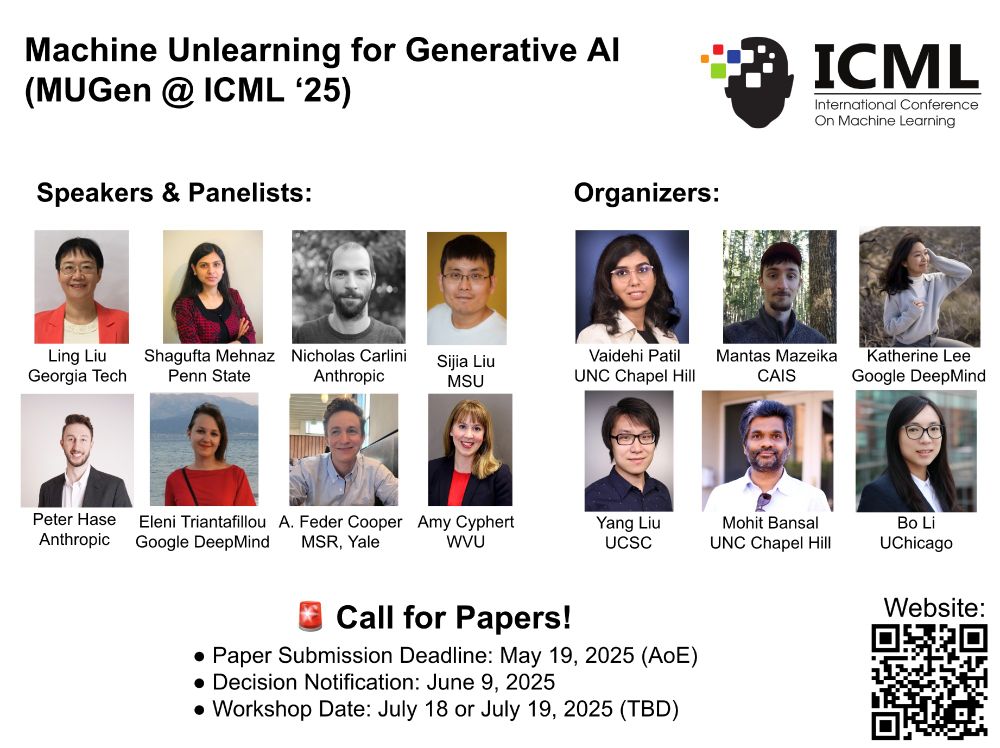

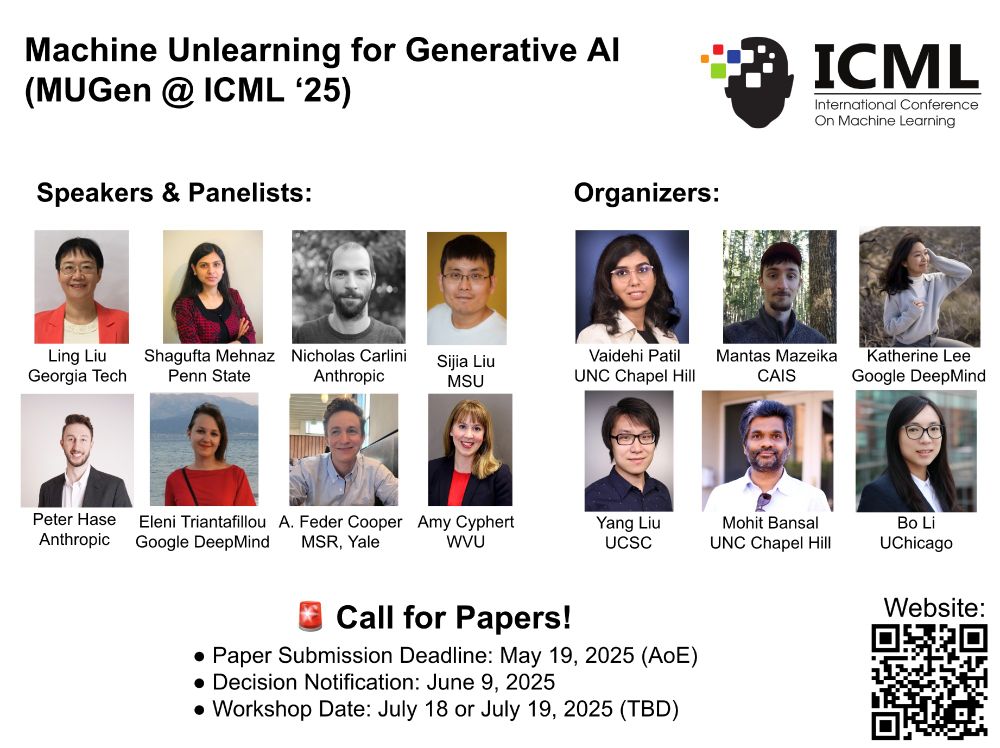

🚨Exciting @icmlconf.bsky.social workshop alert 🚨

We’re thrilled to announce the #ICML2025 Workshop on Machine Unlearning for Generative AI (MUGen)!

⚡Join us in Vancouver this July to dive into cutting-edge research on unlearning in generative AI with top speakers and panelists! ⚡

02.04.2025 15:59 —

👍 4

🔁 1

💬 1

📌 1

🎉🎉 Big congrats to @archiki.bsky.social on being awarded the @Apple AI/ML PhD Fellowship, for her extensive contributions in evaluating+improving reasoning in language/reward models and their applications to new domains (ReCEval, RepARe, System-1.x, ADaPT, ReGAL, ScPO, UTGen, GrIPS)! #ProudAdvisor

27.03.2025 19:41 —

👍 3

🔁 1

💬 1

📌 0

🚨UPCORE is our new method for balancing unlearning/forgetting with maintaining model performance.

Best part is it works by selecting a coreset from the data rather than changing the model, so it is compatible with any unlearning method, with consistent gains for 3 methods + 2 tasks!

25.02.2025 02:33 —

👍 4

🔁 2

💬 0

📌 0

🚨 Introducing UPCORE, to balance deleting info from LLMs with keeping their other capabilities intact.

UPCORE selects a coreset of forget data, leading to a better trade-off across 2 datasets and 3 unlearning methods.

🧵👇

25.02.2025 02:23 —

👍 12

🔁 5

💬 2

📌 1

Next, UTDebug enlarges UTGen’s sweet-spot with two key innovations:

1⃣ Boosts unit test accuracy through test-time scaling.

2⃣ Validates code by accepting edits only if the pass rate improves—and backtracking otherwise.

4/4

05.02.2025 18:53 —

👍 3

🔁 0

💬 0

📌 0

Key finding --> trade-off in UT generation: if UTs made too easy, they only catch trivial errors/don’t raise any errors; but if UTs made too hard, then the generator can’t predict the output! UTGen finds the sweet-spot between these two where the model generates tests that help in debugging.

3/4

05.02.2025 18:53 —

👍 3

🔁 0

💬 1

📌 0

Generating good unit tests is challenging (even for humans) and requires:

1⃣ Error uncovering → Unit tests must target edge cases to catch subtle bugs.

2⃣ Code understanding → Knowing the correct output corresponding to a UT input.

2/4

05.02.2025 18:53 —

👍 3

🔁 0

💬 1

📌 0

🚨 Check out "UTGen & UTDebug" for learning to automatically generate unit tests (i.e., discovering inputs which break your code) and then applying them to debug code with LLMs, with strong gains (>12% pass@1) across multiple models/datasets! (see details in 🧵👇)

1/4

05.02.2025 18:53 —

👍 7

🔁 4

💬 1

📌 0

🚨 Excited to announce UTGen and UTDebug, where we first learn to generate unit tests and then apply them to debugging generated code with LLMs, with strong gains (+12% pass@1) on LLM-based debugging across multiple models/datasets via inf.-time scaling and cross-validation+backtracking!

🧵👇

04.02.2025 19:13 —

👍 8

🔁 5

💬 0

📌 0