I will write a blog on all my drnoiser pipeline in a while i hope

14.02.2026 22:48 — 👍 2 🔁 0 💬 0 📌 0Ipotrick

@ipotrick.bsky.social

@ipotrick.bsky.social

I will write a blog on all my drnoiser pipeline in a while i hope

14.02.2026 22:48 — 👍 2 🔁 0 💬 0 📌 0

Mooore denoising tricks 😃!!

I tested variance as well as ray-length guided spatial filtering.

Variance guided filtering is good at preserving lighting silhouettes, while ray-length guiding adds a lot of contact hardening sharpness!

1. no guiding 2. variance guiding 3. ray-length guiding

More images of the improved denoiser.

This showcases how much smarter the prefilter is now.

Its able to keep much more lighting energy at better temporal stability.

This is a better comparison than in my last post.

I found a few tricks to make the denoiser able to handle a much sparser signal while also increasing temporal stability.

With these tricks applied, indirect lighting now looks much better in dark areas. Bright small areas can not illuminate distant pixels much more accurately.

Before vs After:

I found a bunch of new tricks for denoising.

Using them, i re-architected the whole denoising pipeline.

Old: prefilter -> stochastic blur -> upscale -> temporal

New: prefilter -> stochastic blur -> temporal -> smooth blur -> upscale.

Perf and quality are much better with the new architecture.

I think most raytracing effects and denoisers don't really think about needs of fast games like shooters.

For my RTGI denoiser, i put in a lot of care to get an immediately readable and stable image from the first frame after dis-occlusion.

its very satisfying.

The firefly filter is very aggressive. It makes the image much too dark. But its temporal stability improvements are massive.

It is worth the tradeoff for me.

Crude blur here is a mip map blur similar to the mip map based "history reconstruction" blur of Nvidia ReBlur.

The thin-ness factor is calculated with the firefly filter. It estimates how many valid spatial blur samples each pixel probably gets by doing plane distance tests of surrounding pixels.

My RTGI denoiser had temporal stability issues on thin geometry.

While tinkering, i found two tricks to improve this significantly:

1. Based on a per pixel "thin-ness" factor, a crude blur is applied.

2. A geometric mean based firefly filter does the rest.

Works much better than i expected.

Diese kostbaren zwei Stunden am späten Abend, wenn niemand mehr etwas von einem will und nichts mehr zu erledigen ist, wenn man tun kann, was man wirklich möchte. So müssten ganze Tage, Wochen, Jahre, Leben sein.

12.01.2026 22:16 — 👍 204 🔁 13 💬 5 📌 0its quite wobbly unstable. Could be made more stable. But as its used for the rtgi atm it has to adapt fast

11.01.2026 20:46 — 👍 0 🔁 0 💬 0 📌 0I would like to showcase the denoisers improvements in movement but videos really just remove all noise so i cant really show the difference :/

09.01.2026 01:59 — 👍 4 🔁 0 💬 0 📌 0I believe this is not done by others because it requires quite a high probe resolution to work properly without loosing too much quality.

Luckily, last year i developed a self adapting sparse raytraced probe volume that can reach densities high enough to capture the world in enough detail :).

This is how the world looks when projecting the closest probes radiance onto the ray hit position.

With my rtgi i am also doing something, that i believe, is a novel technique that i have not seen done before.

While probes are often used in rtgi for indirect diffuse on hit shading, i use them to replace all rtgi hit shading.

On each hit i project the closest probes radiance into the hits.

Unfortunately the image gets denoised by the image compression quite a lot already.

It is much worse noise in reality :)

More denoiser before and after screenshots :)

09.01.2026 01:50 — 👍 4 🔁 0 💬 0 📌 1

dark scene before denoise

dark scene after denoise

daylight scene before denoise

daylight scene after denoise

I have been working on a denoiser for raytraced diffuse global illumination in my hobby engine. I am now quite happy with it. It can create a high quality output with only 0.25 rays per pixel.

I am particularly proud of how stable it is in movement and on disocclusions.

It's surprisingly detailed considering the low probe density.

It's also practically completely free as its trivial to calculate the radiance in the same shader as the irradiance per texel.

The radiance cache works by storing another layer of texels on the probe. The new texels store the direct radiance coming into their direction, no cos convolve.

When shading rtgi ray hits, i sample the radiance of the 8 closest probes by projecting the radiance back onto the hit ws position.

Experimenting more with hybrid probe and per pixel traced gi.

I now use a radiance cache to shade per pixel ray trace hits.

It saves a lot of performance and the quality is very close to proper hit shading.

Roughly saving ~30-70% of performance for rtgi (local lights make this verrry worthwhile).

perf is not too great now, probes take around 500us to update, 600us to eval screen irradiance.

Rtgi replaces the eval screen irradiance but takes around 2-3ms now.

Goal is to shoot short traces from pixels (up to maybe 15 meters).

On miss the ray would read the probe volume radiance in the rays direction.

The shorter raylength improves performance immensly.

This hybrid RTGI/probe GI is something that i believe could be very performant and pretty.

Started to experiment with raytraced diffuse gi.

My probe GI works well but it just can not represent fine details with good performance/memory usage.

AO helps it a lot i want even more.

Most of the work on RTGI is denoising, very interesting work.

Here the current state: Probes only vs RTGI:

Great stuff 😍

11.08.2025 13:20 — 👍 1 🔁 0 💬 0 📌 0Yoo, timberdoodle is in there. Great projects all around :)

11.08.2025 13:12 — 👍 3 🔁 1 💬 1 📌 0

An American Woodcock - a mostly tan/buff colored bird with a potato-like body, very long slender beak, and large eyes - posing among the leaf litter and green ivy on the ground in Bryant Park. The bird is mostly facing the camera, head turned ever so slightly to the left.

An American Woodcock walking among the leaf litter and green ivy on the ground in Bryant Park. The skyline is reflected in their left eye.

It’s Timberdoodle time 💛🪶🥔

American Woodcock in Bryant Park, NYC, March 2025

The tech and graphical advancements in Assassin's Creed Shadows are mighty impressive: Micropolygon geometry, fluid wind simulations, ray traced global illumination and so much more. How do they all work and compare to AC games of the past? Find out in my video below!

youtu.be/nhFkw5CqMN0

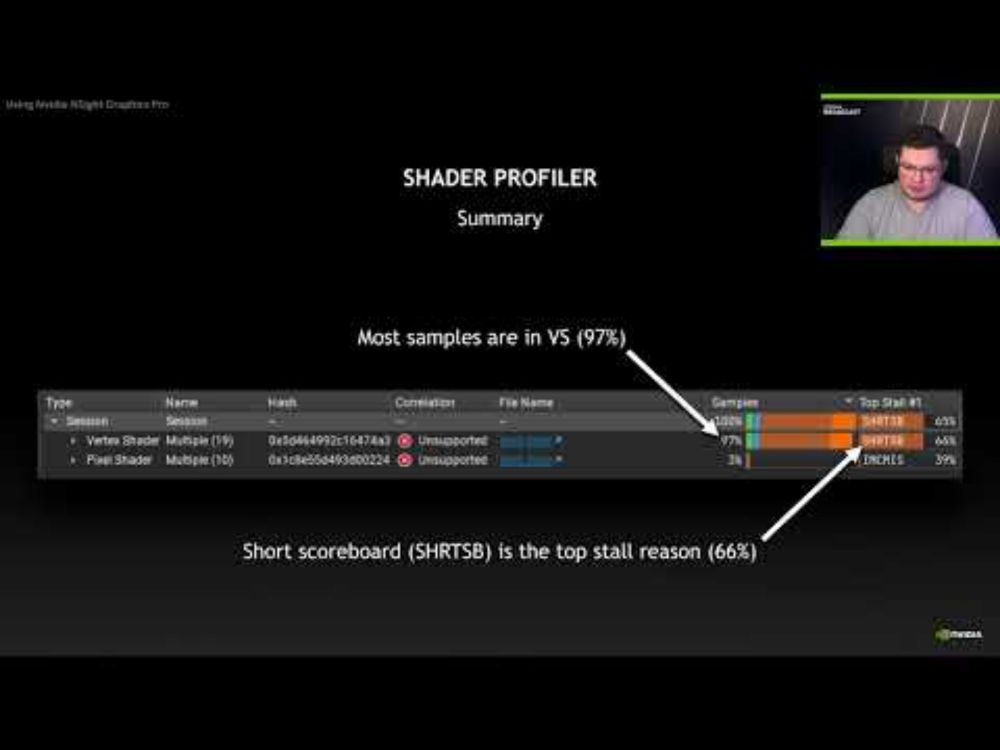

Thread divergence during constant buffer reads can have a big perf impact, this presentation discusses how to detect this on NVidia GPUs using GPU Trace and Shader profiler. Also shows how useful access to ISA is for understanding what the shader does under the hood. www.youtube.com/watch?v=HSsP...

10.12.2024 17:44 — 👍 63 🔁 8 💬 1 📌 0

While watching all the trailers from the Sony State of Play I could not help but notice that 90% of the games shown are Unreal Engine games... with each new scene/effect/character shown it is hard not to think "that will probably stutter on PC".

14.02.2025 07:30 — 👍 285 🔁 15 💬 20 📌 0