You find the minimum and maximum values for each color channel in a 4x4x4 block. And then for each voxel store 3 bits that tell you how to interpolate those two endpoints. This is lossy compression, so you’ll need to keep the original content around in case you want to make edits.

21.01.2026 10:54 — 👍 0 🔁 0 💬 0 📌 0

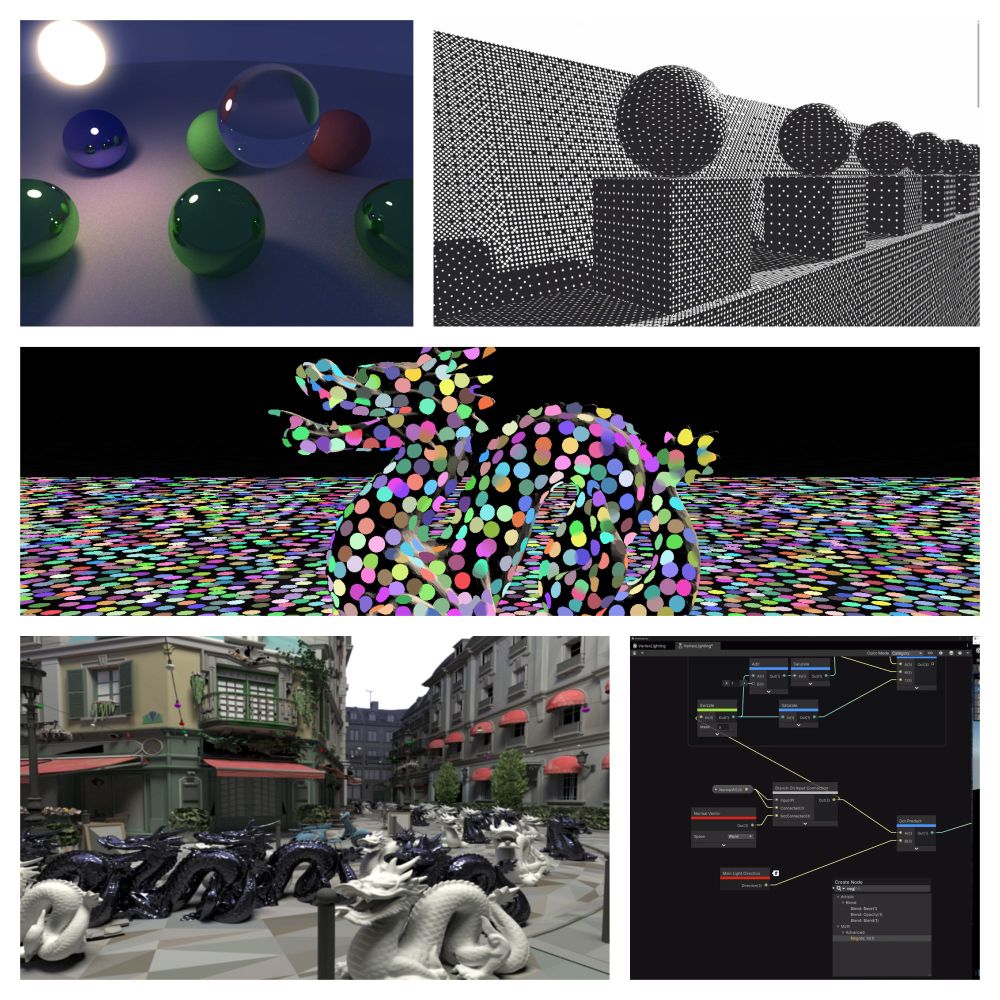

Uncompressed 64tree

Compressed 64tree

Did some experimentation with 4x4x4 voxel block compression today. I'm using a 64 tree with block compression applied at the leaf nodes. 3 interpolation bits per voxel. You can definitely see some compression artifacts. Both trees in this comparison store 8 bit RGB colors.

16.01.2026 22:47 — 👍 5 🔁 0 💬 1 📌 0

I could do something similar on the GPU, per Lane find its ray octant, then use Wave intrinsics to check if all Lanes are in the same octant. If they are, have all go down the same templated code path. Otherwise, just have them all take the normal code path I already have to avoid divergence.

30.12.2025 21:38 — 👍 2 🔁 0 💬 0 📌 0

I did solve the depth values! They are correct now :) However you can still see things through other objects sometimes in the video. It still seems to happen where the axes are 0 in world-space, I haven't been able to find out why.

30.12.2025 19:19 — 👍 4 🔁 0 💬 0 📌 0

Oh I see, no I don't do that at the moment. I also personally haven't seen anyone else do it.

30.12.2025 19:17 — 👍 2 🔁 0 💬 1 📌 0

Yes! I always enter the nearest bounding box first and push the other one onto the stack (if it was also a hit)

30.12.2025 18:48 — 👍 2 🔁 0 💬 1 📌 0

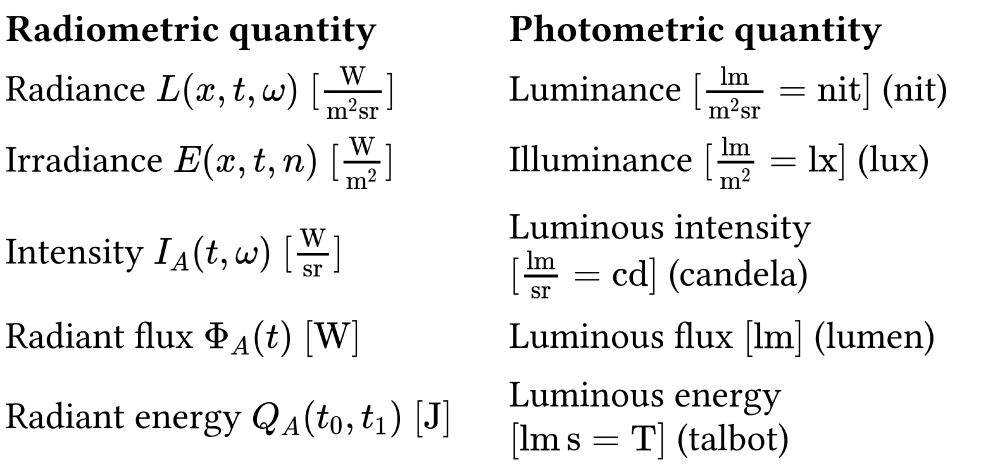

Been working on voxel ray-tracing again lately, this time using a BVH2 TLAS and SVT64 (64tree) BLAS(s). The heat gradient in the video shows the step count from 0 to 128. This scene contains 256 voxel dragons, each model is 256^3 voxels :)

30.12.2025 14:43 — 👍 21 🔁 3 💬 2 📌 0

At the time I wasn’t aware of FLIP for image comparisons, so I didn’t do a FLIP comparison back then :p

26.12.2025 14:36 — 👍 2 🔁 0 💬 0 📌 0

Thank you! :)

Yes, we are fortunate to have access to PS5 development through our Uni.

03.07.2025 18:45 — 👍 1 🔁 0 💬 0 📌 0

A first pass is used to determine what 8x8x8 chunks need to be updated, then the second pass is indirectly dispatched to perform only required re-voxelization.

A big optimization I worked on after my last post was making our voxelizer lazy. So, it only updates parts of the scene that changed. This made a huge difference for our performance and allowed us to push for larger level sizes!

Below is a high-level drawing of how it works:

02.07.2025 19:02 — 👍 3 🔁 0 💬 0 📌 0

A picture showing how to use the render graph interface using a builder pattern.

The engine supports both PC and PS5 through a platform-agnostic render graph I wrote.

It was a big gamble, I had written it as a prototype in 2 weeks, but it really paid off in the end. Saving us a ton of time and keeping our renderer clean.

Below is a code snippet showcasing the interface :)

02.07.2025 19:02 — 👍 5 🔁 0 💬 2 📌 0

YouTube video by Sven

Kudzu Engine - Showcase

We've used the #voxel game-engine we build as a team of students to make a little diorama puzzle game :)

This has been an incredibly fun experience, building this from the ground up, working together with designers and artists!

Engine: youtu.be/uvLZn1X_R0Q

Game: buas.itch.io/zentera

02.07.2025 19:02 — 👍 20 🔁 6 💬 1 📌 0

I'm assuming that by cluster you're referring to a voxel brick in this context :) (correct me if I'm wrong)

If each brick has its own transform. Couldn't that result in gaps between the bricks? Do you do anything to combat that, or is it not an issue?

04.06.2025 13:44 — 👍 1 🔁 0 💬 1 📌 0

YouTube video by IGN

The Witcher 4 - UE 5.6 Tech Demo | State of Unreal 2025

Excited to finally show off Nanite Foliage www.youtube.com/watch?v=FJtF...

03.06.2025 18:19 — 👍 209 🔁 44 💬 14 📌 2

Awesome work, the demo looks amazing! :)

I'm curious what is the voxel size difference between LODs?

Does one brick in LOD1 cover 4x4x4 bricks in LOD0?

Or does it cover 2x2x2 bricks in LOD0?

03.06.2025 20:09 — 👍 3 🔁 0 💬 1 📌 0

Hey, sorry for my late response, I’m happy you enjoyed reading my blog post :)

I don’t currently have an RSS feed, I always share the posts on socials instead.

But I might look into RSS sometime.

09.05.2025 14:16 — 👍 1 🔁 0 💬 0 📌 0

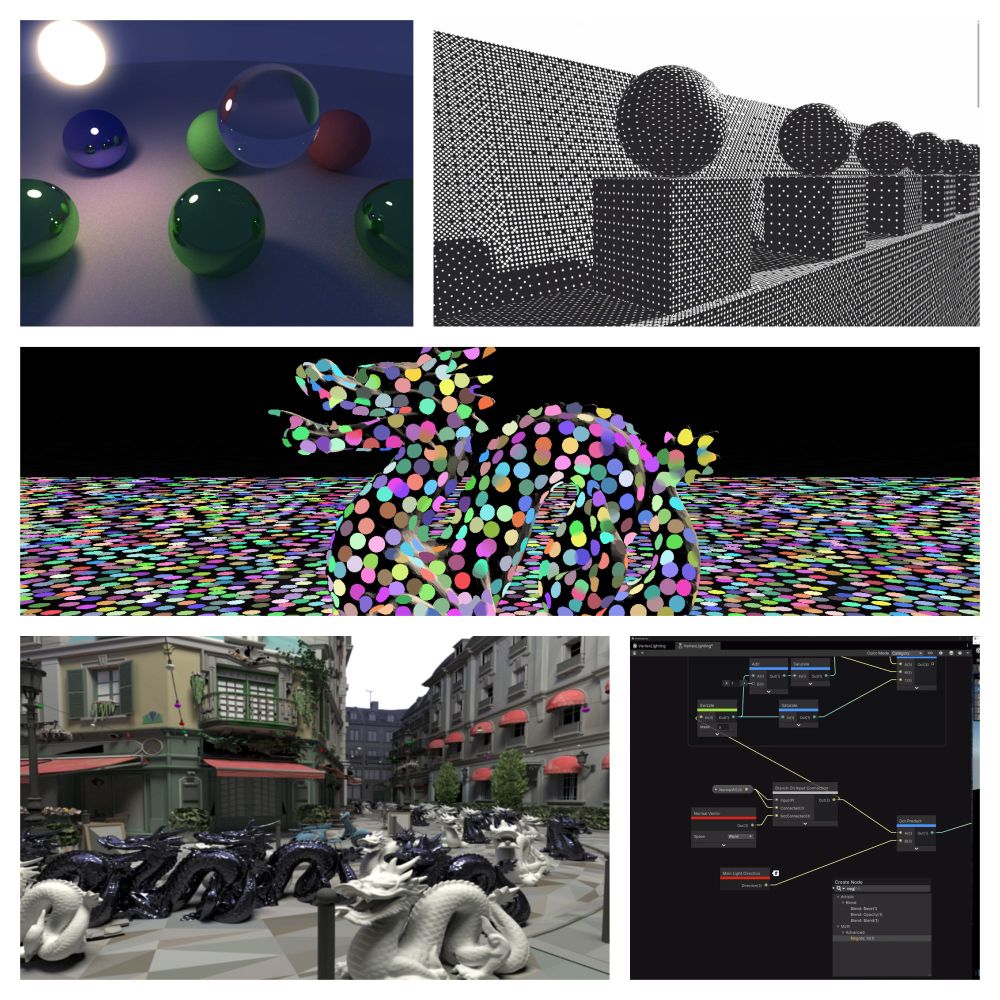

Here's a video showcasing the demo we created using the engine, to showcase our engine is capable of being used to make actual games! :D

16.04.2025 14:57 — 👍 9 🔁 0 💬 0 📌 0

Cone traced reflections.

Cone traced soft shadows.

Cone traced soft shadows & ambient occlusion.

For the past 8 weeks I've been working in a team of fellow students on a voxel game engine. I've been primarily working on the graphics, creating a cross-platform render graph for us, and working together with @scarak.bsky.social on our cone-traced lighting, and various graphics features! :)

16.04.2025 14:57 — 👍 20 🔁 1 💬 1 📌 0

GitHub - mxcop/src-dgi: Surfel Radiance Cascades Diffuse Global Illumination

Surfel Radiance Cascades Diffuse Global Illumination - mxcop/src-dgi

I decided to open source my implementation of Surfel Radiance Cascades Diffuse Global Illumination, since I'm not longer actively working on it. Hopefully the code can serve as a guide to others who can push this idea further :)

github.com/mxcop/src-dgi

12.03.2025 08:21 — 👍 117 🔁 20 💬 0 📌 0

It’s interesting to see the CWBVH performing worse here. If I remember correctly it usually outperforms the others right?

03.03.2025 11:20 — 👍 0 🔁 0 💬 1 📌 0

Graphics Programming weekly - Issue 376 - January 26th, 2025 www.jendrikillner.com/post/graphic...

27.01.2025 14:02 — 👍 79 🔁 23 💬 0 📌 0

MΛX - Surfel Maintenance for Global Illumination

A comprehensive explanation of my implementation of Surfel probe maintenance.

I wrote a blog post on my implementation of the Surfel maintenance pipeline from my Surfel Radiance Cascades project. Most of what I learned came from "SIGGRAPH 2021: Global Illumination Based on Surfels" a great presentation from EA SEED :)

m4xc.dev/blog/surfel-...

25.01.2025 15:45 — 👍 118 🔁 32 💬 1 📌 0

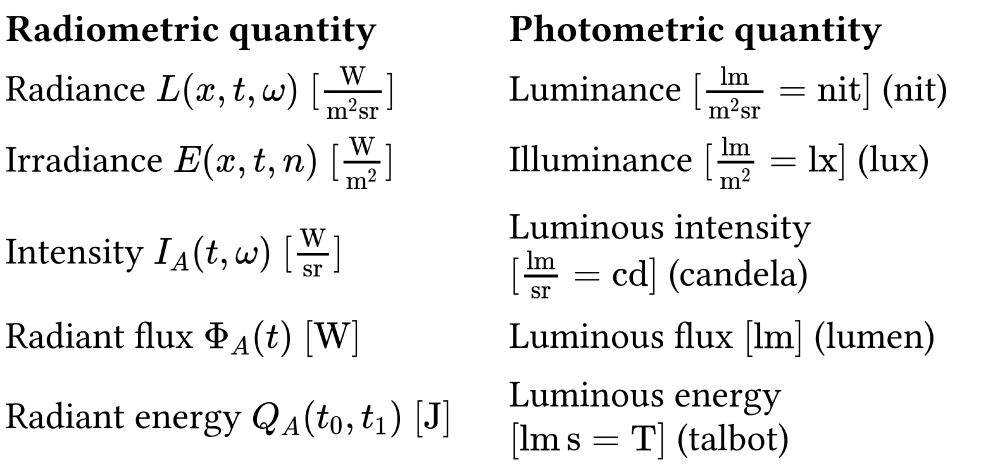

My new blog post explains spectral radiometric quantities, photometry and basics of spectral rendering. This is part 2/2 in a series on radiometry. Learn what it means when a light bulb has 800 lumen and how your renderer can account for that.

momentsingraphics.de/Radiometry2P...

19.01.2025 08:29 — 👍 45 🔁 10 💬 1 📌 0

Join the Radiance Cascades Discord Server!

Check out the Radiance Cascades community on Discord – hang out with 652 other members and enjoy free voice and text chat.

With RC we store intervals for every probe (intervals are rays with a min and max time)

They are represented as HDR RGB radiance and a visibility term which is either 0 or 1.

Here’s the discord link :)

discord.gg/6sUYQjMj

16.01.2025 23:48 — 👍 1 🔁 0 💬 0 📌 0

That’s correct :)

GT stands for Ground Truth, it’s the brute force correct result which I want to achieve.

16.01.2025 11:48 — 👍 2 🔁 0 💬 0 📌 0

Now I'm working on integrating a multi-level hash grid for the Surfels based on NVIDIA's SHARC and @h3r2tic.bsky.social's fork of kajiya. Here's a sneak peak of the heatmap debug view :)

15.01.2025 18:28 — 👍 10 🔁 0 💬 0 📌 0

I've been validating the results of my Surfel Radiance Cascades, comparing it to ground-truth and using the white furnace test. I think I'm really close to GT now, attached are 2 images, one is GT, the other is SRC :)

15.01.2025 18:28 — 👍 31 🔁 1 💬 3 📌 0

I've been improving the Surfel Radiance Cascades since my last post, ironing out bugs one by one. It's starting to get difficult to see the difference between my ground truth renderer and the SRC one :)

(in this rather low frequency scene, more high frequency tests coming soon)

08.01.2025 18:13 — 👍 13 🔁 0 💬 1 📌 0

After many weeks of effort I finally have radiance on screen now! I’ve still got issues mostly related to my Surfel Acceleration Structure, however I’d say the results are already looking quite promising. It has kind of a hand painted look to it now :)

03.01.2025 09:38 — 👍 39 🔁 4 💬 0 📌 0

Engineering Fellow at Epic Games. Author of Nanite.

Freelance French pixel/digital artist who likes drawing silly things!

Working on @rappenems-reforge.bsky.social ✨

Join my Discord💙 http://tinyurl.com/2fpn5k9k

Invented spatiotemporal blue noise, created Gigi rapid graphics prototyping & dev platform.

https://github.com/electronicarts/gigi

20+ year game dev, and graphics researcher. Currently at EA SEED.

Tech blog:

https://blog.demofox.org/

Hi I'm Marl and I make digital things!

Any pronouns. DMs always open.

Technical Art / Game Audio person looking for work! Huge game technology dork. Big robot sometimes. Cimbrian menace.

📍~Hamburg, DE | 🏳️🌈

Graphics researcher at TU Delft. Formerly Intel, KIT, NVIDIA, Uni Bonn. Known for moment shadow maps, MBOIT, blue noise, spectra, light sampling. Opinions are my own.

https://MomentsInGraphics.de

Making pretty pictures with GPUs

https://blog.danielschroeder.me/

Ray Tracing Radical, Turok Technophile, Crysis Cultist, Motion Blur Menace and PC Police Officer for @Digitalfoundry. Wird auch Hermann genannt.

I love Vulkan

Rendering Engineer at Tuxedo Labs

Senior Graphics Programmer at Larian Studios

Guitarist 🎸, pianist 🎹, trying drums 🥁

🖤 Coding, science, cats, horror

"For those who come after" - Gustave

Aka Lady Bloom. ☀️🇫🇷

Principal Product Manager on Substance 3D Painter. Stay up at night to fiddle with shader stuff.

- https://www.froyok.fr/

- https://mastodon.gamedev.place/@froyok

Principal Software Engineer at Electronic Arts

Doing research at Adobe in Computer Graphics/Vision/ML on appearance & content authoring and generation. I also like photography, and baking, but I try to keep it under control!

https://valentin.deschaintre.fr

Empowering real-time graphics developers with advanced language features that enhance portability and productivity in GPU shader development.

Graphics Programmer @ Triumph

Interests Include - Games, Cars, Tech and Art

Sharing stuff about the abovementioned!

A year 2 student game programming at BUAS

24 yo Game Developer, Dutch

Junior Graphics Programmer @ Massive Entertainment

4th Year Games Student @ BUas

Passionate about Game Development and Computer Graphics, currently investigating Terrain rendering & Volumetrics with DX12

Rendering Engineer at Traverse Research